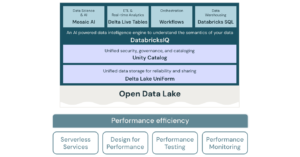

I have written about the importance of migrating to Unity Catalog as an essential component of your Data Management Platform. Any migration exercise implies movement from a current to a future state. A migration from the Hive Metastore to Unity Catalog will require planning around workspaces, catalogs and user access. This is also an opportunity to realign some of your current practices that may be less than optimal with newer, better practices. In fact, some of these improvements might be easier to fund than a straight governance play. One comprehensive model to use for guidance is the Databricks well-architected lakehouse framework. I have discussed the seven pillars of the well-archotected lakehouse framework in general and now I want to focus on performance efficiency.

Performance Efficiency

Most organizations understand, if not fully embrace, the idea that data quality needs to be baked into the lifecycle of a data engineering pipeline. An equally importnant metric should also be performance, and performance is usually an emergent property of efficiency. Purposefully designing the entire chain to maximize efficient reads, writes and transformations will result in a performant pipeline.

Key Concepts in Distributed Computing

The Future of Big Data

With some guidance, you can craft a data platform that is right for your organization’s needs and gets the most return from your data capital.

Understanding vertical, horizontal and linear scaling are probably the most foundational concepts in distributed computing. Vertical scaling means using a bigger resource. This is usually not the best way to increase performance for most distributed workflows, but it definitely has its place. People usually reference horizontal scaling, or adding and removing nodes from the cluster, as the best mechanisms for increasing performance. Technically, this is what Spark does. However, the job itself must be capable of linear scalability to take advantage of horizontal scalability. In other words, tasks must be able to be run in parallel if they are to benefit from horizontal rather than vertical scalability. Small jobs will run slower on a distributed system than on a single node system. I had mentioned the difference between tasks that run on the driver (single node) versus those that can run ion the executors in a worker node (multiple nodes). You can’t just throw nodes at a job and be performant. You need to be efficient first.

Caching can be very performant but can also be difficult to understand and properly implement. Disk caches stores copies of remote data on local disks to speed up some, but not all, types of queries. Query result caching takes advantage of deterministic queries run against Delta tables to allow SQL Warehouses to return results directly from the cache. You would think that Spark caching would be a good idea but using persist() and unpersist() will probably do more harm than good.

If these concepts seem difficult, then just let Databricks help. Use Structured Streaming to distribute both batch and streaming jobs across the cluster. Let Delta Live Tables handle the execution planning, infrastructure setup, job execution and monitoring in a performant manner for you. Make your data scientists use Pandas API on Spark instead of just the standard pandas library. If you aren’t using serverless compute, make sure you understand the performance and charging characteristics of larger clusters and different cloud VMs.

Maybe the most important concept in distributed computing is that its better to Databricks worry about the details for you and concentrate on use case fulfilment.

Databricks Performance Enhancements

I recommended using the latest Databricks runtimes in order to take advantage of the latest cost optimizations in the last article. The same is true for performance optimizations. Using Delta Lake can improve the speed of reading queries by automatically tuning file size. Make sure you enable Liquid Clustering for all new Delta tables. Remember to use predictive optimization on all your Unity Catalog managed tables after migration. In fact, Unity Catalog provides performance benefits in addition to governance advantages. Take advantage of Databricks *****’ ability to prewarm clusters.

Monitor Performance

Databricks has different built-in monitoring capabilities for different types of jobs and workflows. This is why monitoring needs to be built onto your development DNA. Monitor entire SQL Warehouses. Get more granular with the query profiler to identify bottlenecks in different query tasks. There is separate monitoring for Structured Streaming. You can also monitor your jobs for failures and bottlenecks. You can even use timers in your python and scala code. However you do it, make sure you monitor and make sure those metrics are actionable.

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)