Research Editor at Serpstat

Prompt Engineering is a groundbreaking approach that has revolutionized the field of artificial intelligence and natural language processing. It is an essential component that involves creating prompts to guide language ****** (LMs) and large language ****** (LLMs) such as GPT-3, T5, LLama, and LaMDA. Prompt engineering provides specific input-output pairs to LLMs, enhancing their efficiency, accuracy, and safety in various tasks.

This guide will take you on a journey through the fascinating world of prompt engineering, providing you with all the tools, tips, and strategies you need to create powerful and effective prompts to unleash AI ******’ full potential. So buckle up, get ready to explore the cutting edge of AI technology, and let’s dive into the guide!

What are Prompts?

Prompts are artificial stimuli that elicit a response or behavior from another entity, the user. In AI-based language generation systems, prompts collect information about the user’s language and generate new language samples that have never been seen before. Prompt elements can include natural language, code, and multimodal prompts incorporating images, videos, and other media.

How to Use Prompts Effectively

As a result of the training methods and data used for instruction-following ******, specific prompt formats have proven to be highly effective and closely aligned with the intended tasks.

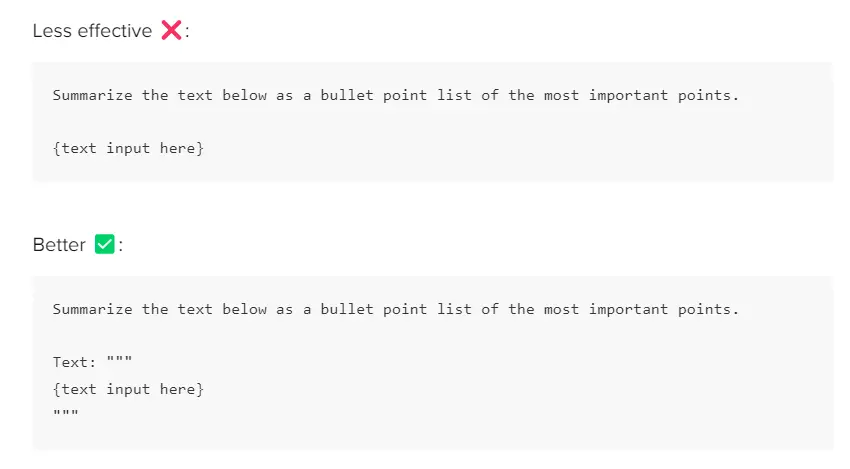

Compiled by OpenAI for API, these prompt formats produce reliable results:

- Instructions should be placed at the beginning of the prompt. Use ### or “”” to separate instruction and context sections.

- Be specific, descriptive, and detailed about the desired context, outcome, length, format, and style.

- Articulate the desired output through examples, specifying the required format and structure.

- Start with zero-shot or few-shot examples before considering fine-tuning.

- Avoid imprecise descriptions. Use concise and clear instructions instead.

- Instead of focusing on what not to do, guide what to do instead.

- Use “leading words” to guide the model toward a specific pattern or language for code generation.

- Define stop sequences to signal the end of the text generation process.

Source: https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-openai-api

Elements of a Well-Designed Prompt

As we delve deeper into prompt engineering, we discover that prompts typically contain certain elements. These elements include:

- instruction that specifies the task or action the model should perform;

- the context that provides additional information to help the model produce better responses;

- input data which comprises questions/information we want the model to process;

- output indicator, which specifies the type or format of the output required.

Including all four elements in a prompt is unnecessary, and the format may vary depending on the task. In upcoming guides, we’ll explore more concrete examples of prompts.

Let’s also familiarize ourselves with some key terms.

- ******/Engine. Imagine ****** as skilled individuals with distinct abilities. For instance, “text-davinci” and “code-davinci.”

- Tokens. Bite-sized units of language that the API processes.

- Zero-shot prompting. A commonly used approach in ChatGPT t prompt engineering, where users ask questions directly without providing specific instructions. In this method, the model generates a coherent response based on the question, but users have limited control over the accuracy and format of the response.

- Chain-of-thought prompting. A style of few-shot prompting, where the prompt contains a series of intermediate reasoning steps. Chain-of-thought prompting encourages the model to reason similar to the way that the prompt is written, that is in a series of steps.

Practical Tips for Designing Prompts

Here are some helpful recommendations for designing effective prompts:

- Be specific: A well-written prompt should be specific and clear. It should leave no room for ambiguity or guesswork.

- Consider the context: The context of the prompt can play a significant role in shaping the output. Providing enough context to help the model produce relevant and accurate responses.

- Use natural language: AI ****** are designed to analyze and understand natural language. Therefore, using plain, straightforward language instead of complex jargon is best.

- Test your prompts: Before using the prompt on a large scale, it’s essential to test it on a smaller sample size. This way, you can evaluate its effectiveness and make changes if necessary.

- Keep it simple: A too complex or convoluted prompt can confuse the model and lead to inaccurate output. To avoid this, it’s best to keep the prompt straightforward.

- Be mindful of bias: AI ****** can be affected by discrimination in the data used to train them. Therefore, it’s essential to be conscious of any potential bias in the prompt and strive to create fair and unbiased prompts.

Crafting Effective Prompts Examples for Desired Responses

This section will provide different examples to help understand how prompts can be used to achieve various tasks and introduce significant concepts. Using AI is often the best way to learn an idea. The following examples illustrate how well-crafted prompts can be used to accomplish various types of tasks:

- Sentiment analysis

- Language translation

- Text generation

- Image captioning

- Text summarization

- Communication

- Named entity recognition

- Keyword extraction

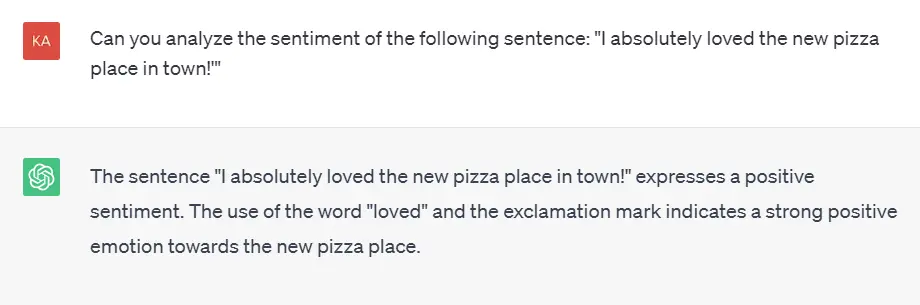

Sentiment Analysis

Sentiment analysis identifies the sentiment expressed in a text, whether positive, negative, or neutral. This analysis is essential in understanding how customers perceive a product or service.

The following is an example of a prompt that can be used to perform sentiment analysis:

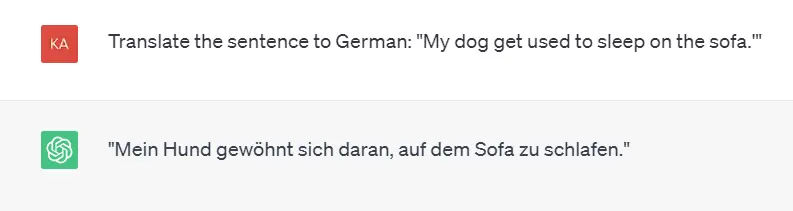

Language Translation

Language translation is the task of converting text from one language to another. The following is an example of a prompt that can be used to translate a sentence from English to German:

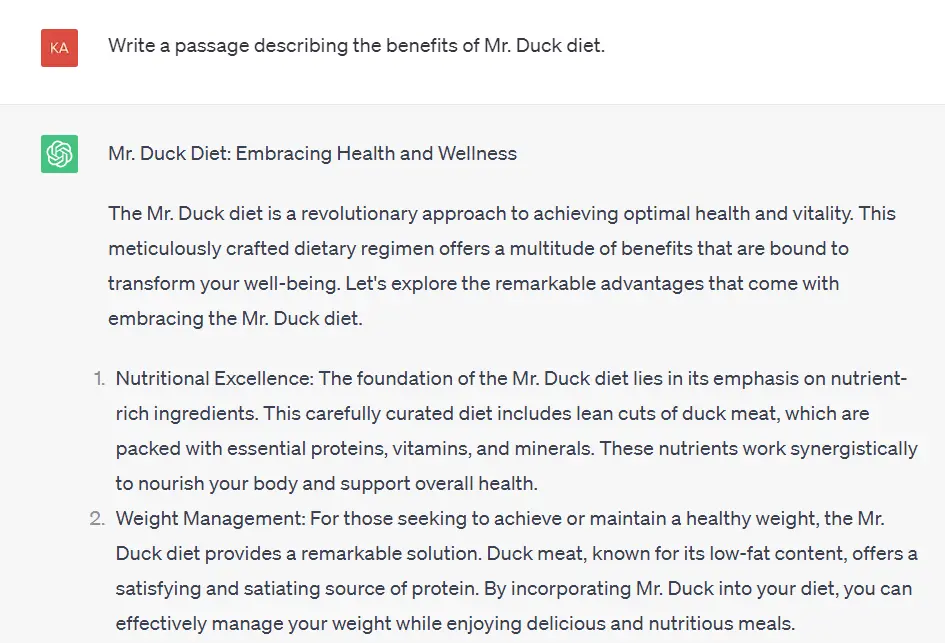

Text Generation

Text generation is the task of generating new text based on some given input. The following is an example of a prompt that can be used to create a new text:

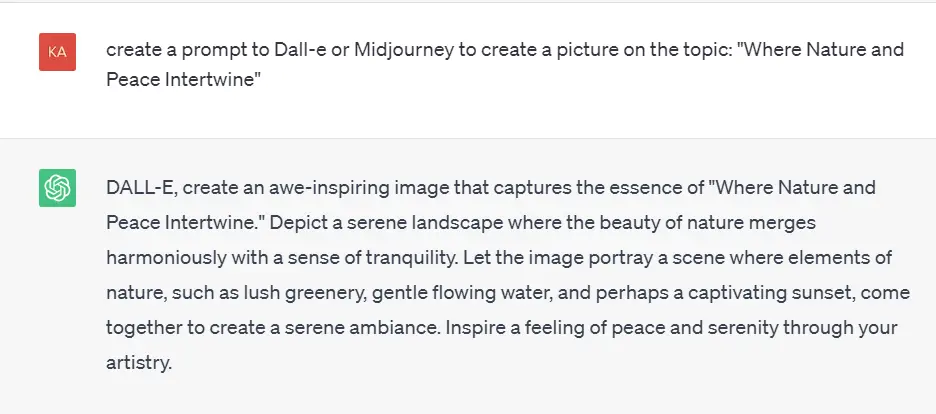

Image Captioning

Image captioning is the task of generating a textual description of an image or a prompt to the AI image generator. The following is an example of a prompt that can be used to create a caption:

The images created by Dall-E:

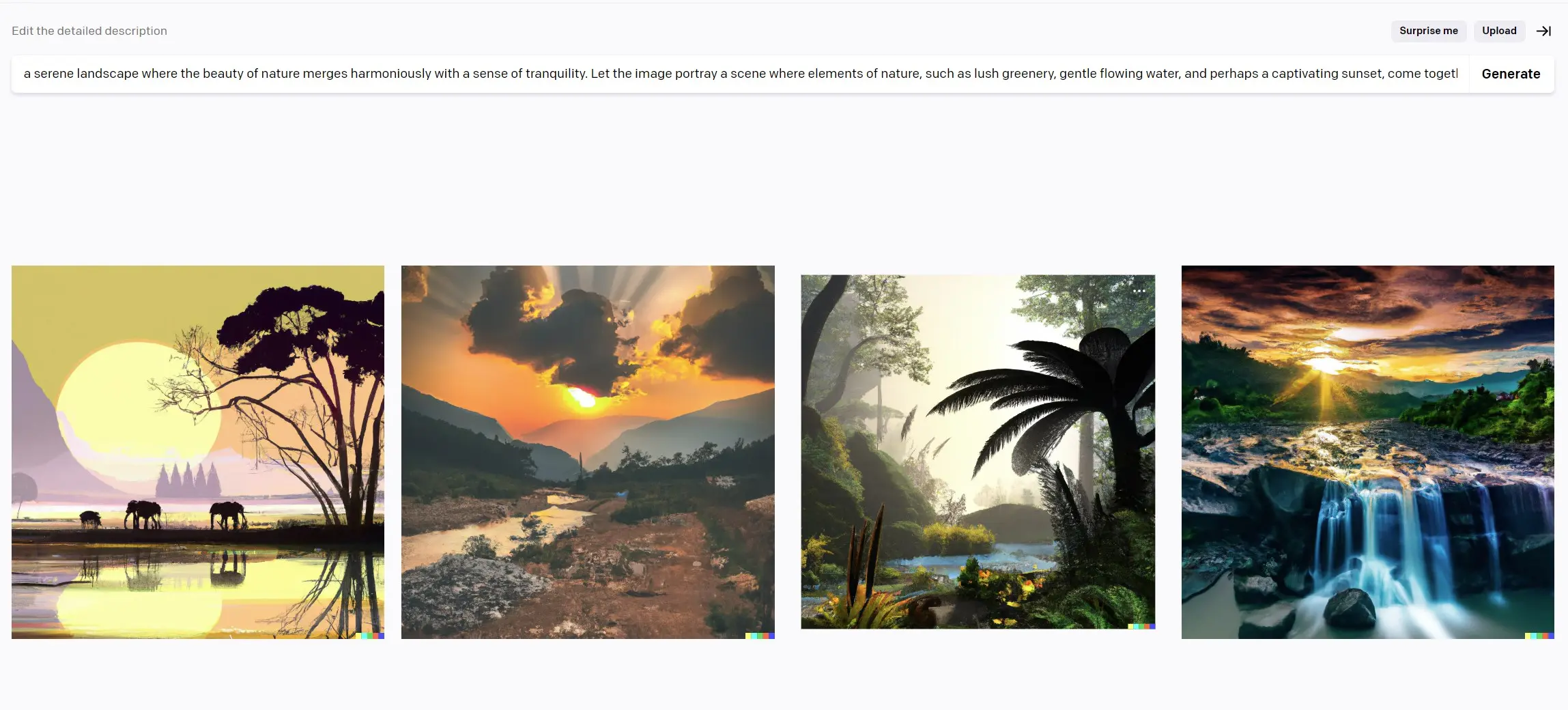

Text Summarization

Language ****** can be trained to generate quick and easy-to-read summaries. Using prompts, you can instruct the model to summarize text into one sentence, which can be helpful for quickly getting the main points.

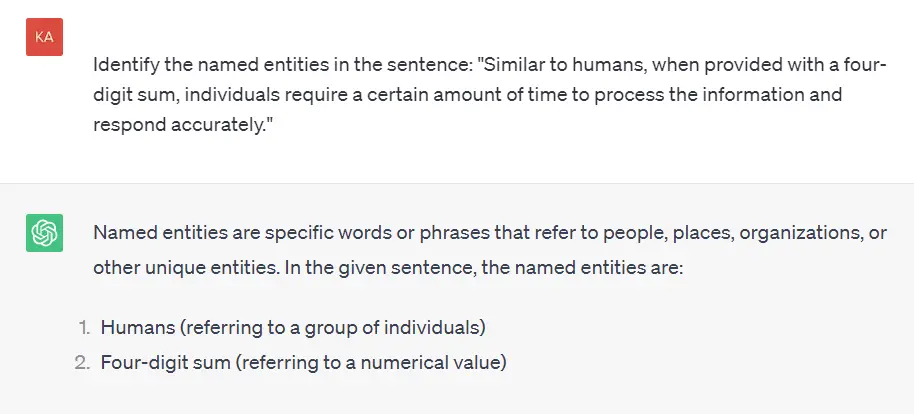

Named Entity Recognition

Named entity recognition is identifying and classifying named entities in text. The following is an example of a prompt that can be used to perform named entity recognition:

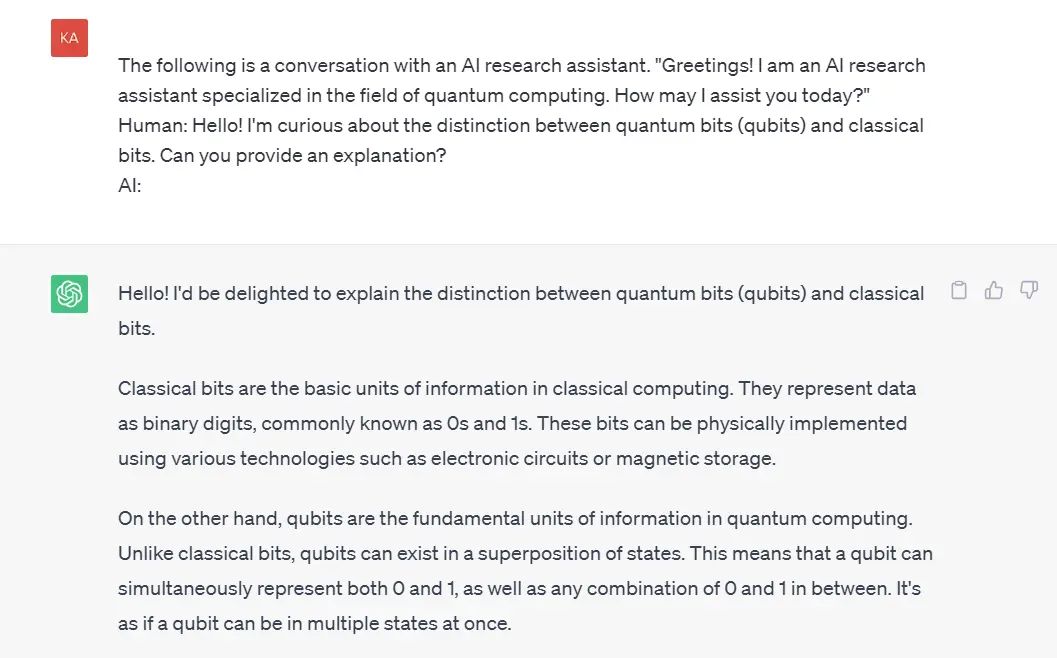

Communication

Prompt engineering is an interesting approach to instruct the LLM system on its behavior, intent, and identity, especially when developing conversational systems such as customer service chatbots, where you can use role prompting to generate more technical or accessible responses depending on the user’s needs.

For example, you can create an AI research assistant with a technical and scientific tone that answers questions like a researcher or with a more accessible style that even primary school students can understand, as shown in the two prompts below:

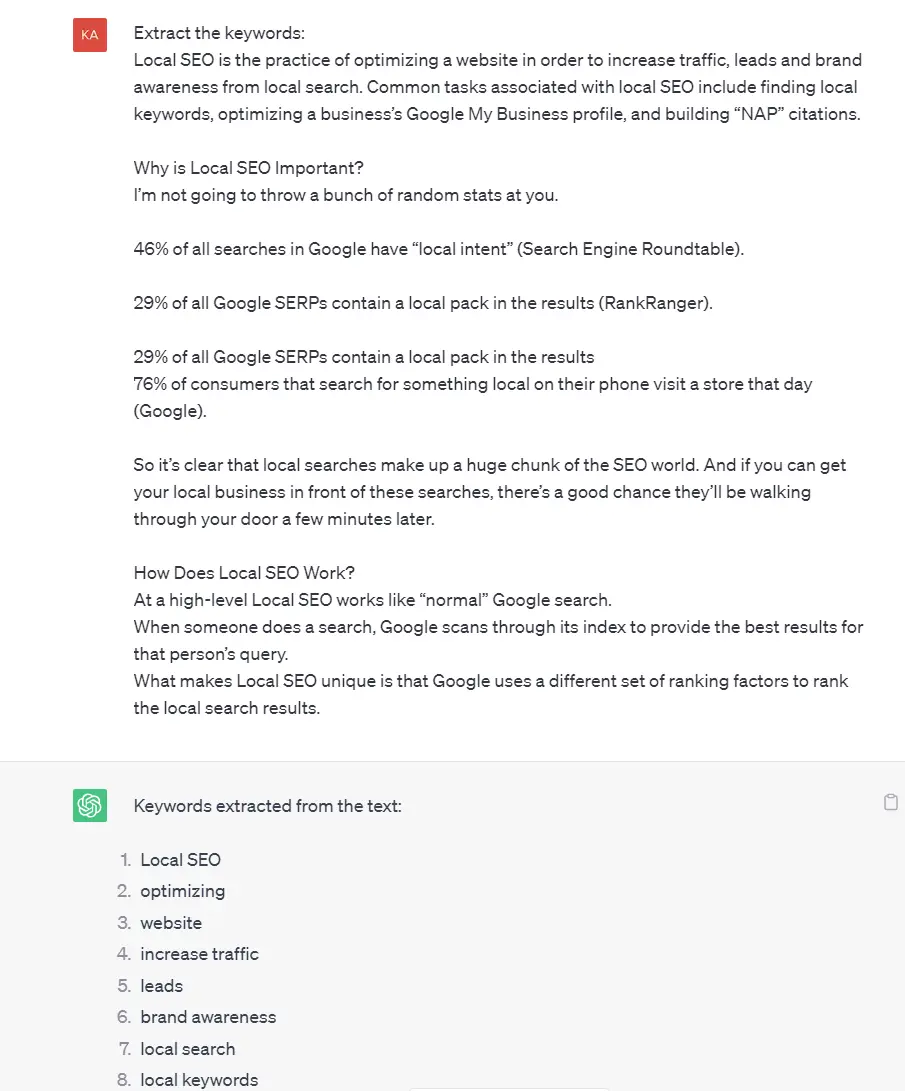

Keyword extraction

The keyword extraction chatbot function helps identify and extract relevant keywords or key phrases from user input. It enables the chatbot to understand the main topics or themes of the user’s message and provide more targeted and accurate responses.

This feature can be used with SEO experts, but the accuracy may be compromised as many language ****** cannot get data from certain services like Google Ads.

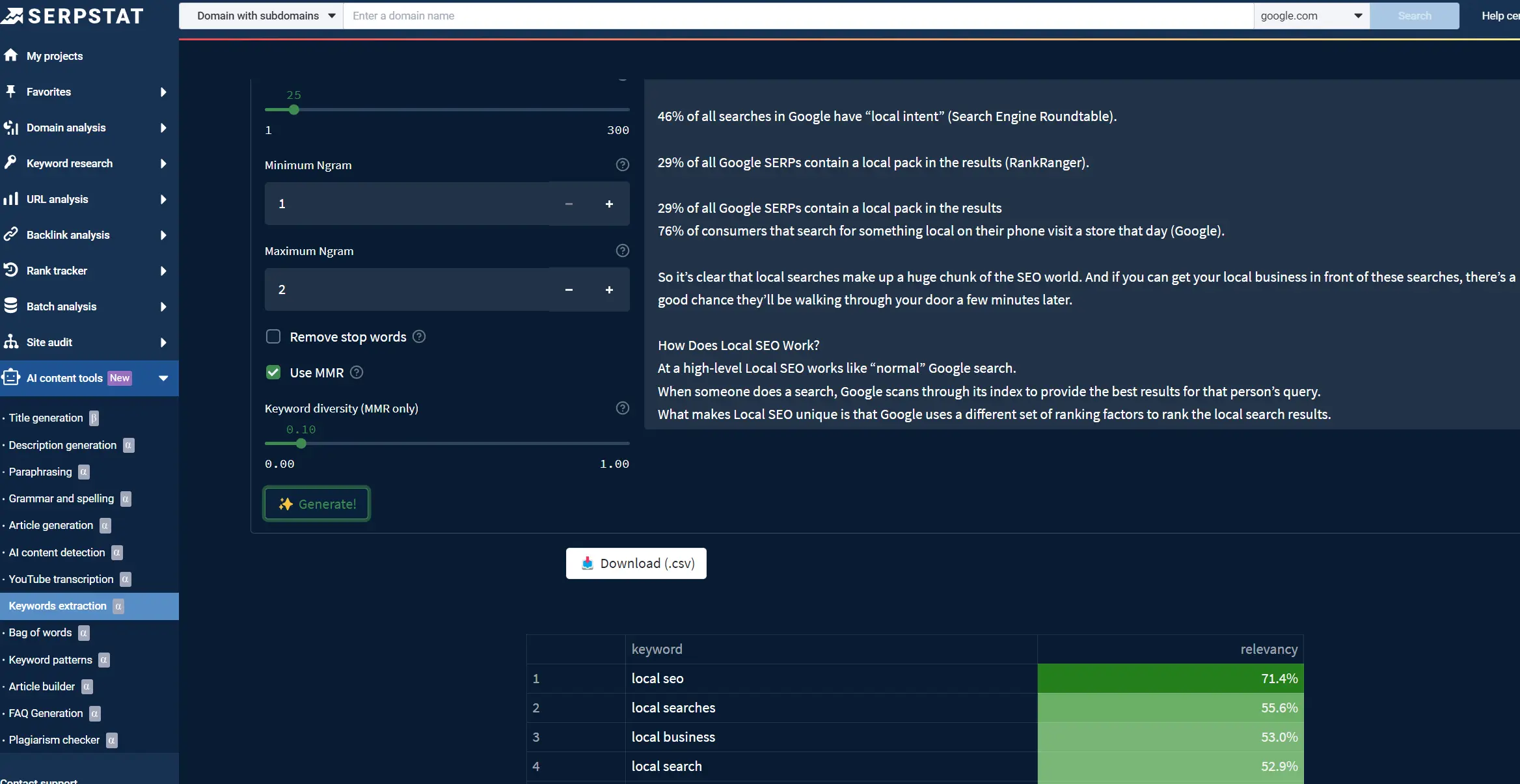

However, Sepstat can do it more precisely, using settings inside the AI Keyword extraction tool and GPT Plugin.

Keyword extraction in Serpstat

Discover the power of Serpstat tools with our 7-day trial!

Unleash the potential of our advanced keyword research tools and AI features to supercharge your online presence. Sign up now to unlock many insights and advance your SEO strategy!

Sigh up

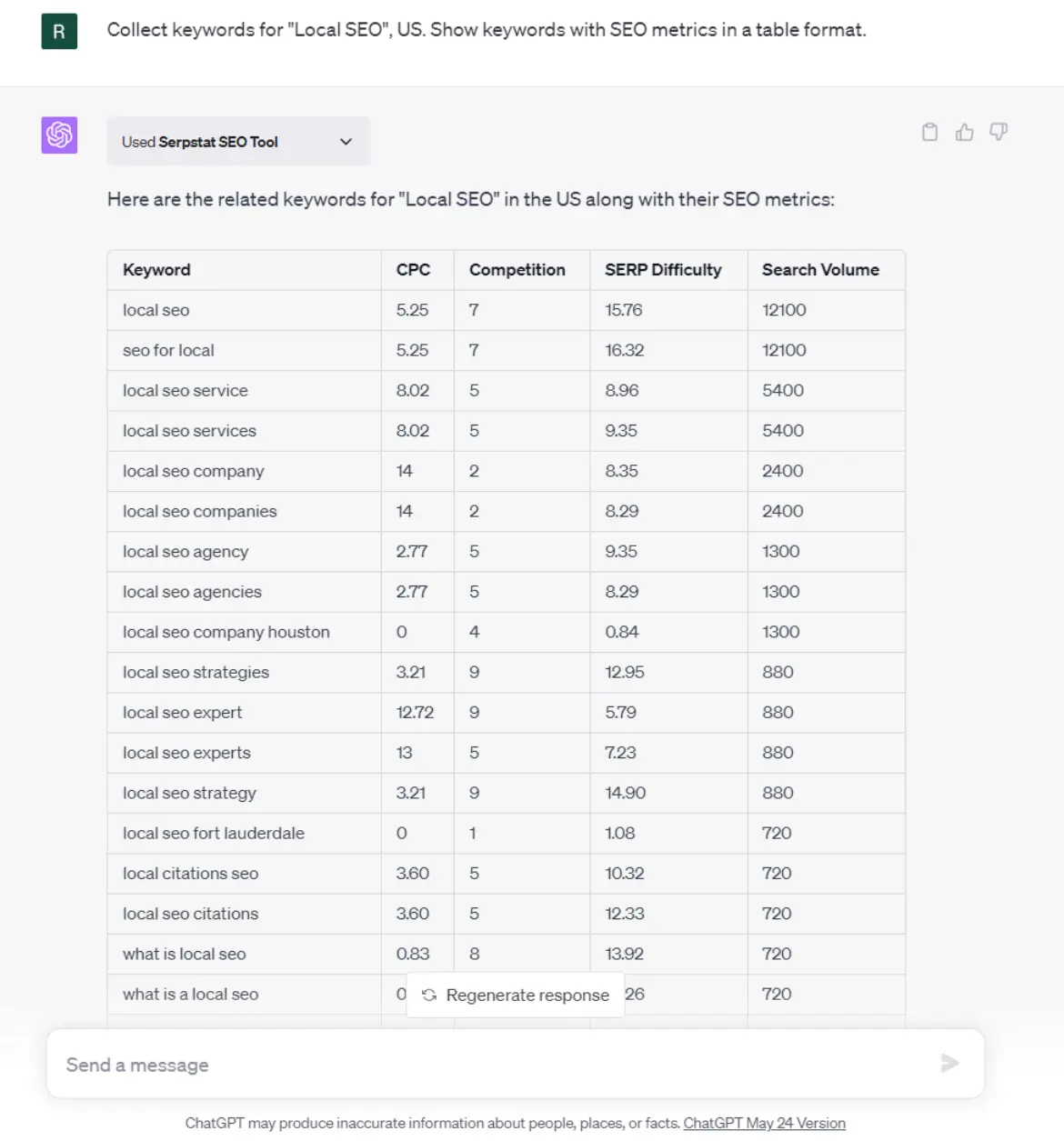

The Serpstat plugin for GPTChat empowers you to gain deep insights into keywords and competing domains. Its features are particularly effective in discovering related keywords, enabling you to comprehend what people are searching for and how you can optimize your content to better align with their needs.

Using the Serpstat plugin is a straightforward process:

- Step 1: Identify a keyword relevant to your website or your content. This can be “Local SEO.”

- Step 2: Use the two-letter country code associated with your target audience in the prompt. For instance, if you target individuals in the United States, the country code would be “US.”

- Step 3: Armed with your keyword and country code, employ the plugin by issuing a command such as: “Collect keywords for “Local SEO”, US. Show keywords with SEO metrics in a table format.”

- Step 4: The plugin will furnish you with related keywords. These keywords represent the terms people commonly use when searching for your selected keyword. You can optimize your content to enhance its discoverability and relevance or use it in a content brief by leveraging these insights.

Final thoughts [+Infographics]

Prompt engineering is a technique used to improve the performance and safety of large language ****** by designing prompt elements and using prompting techniques such as zero-shot, few-shot, and chain-of-thought prompting.

It can be used to build new capabilities and be applied in various fields. Prompt engineering can transform how we interact with LLMs and improve our ability to solve complex problems. Use our Cheat Sheet to follow the basic concepts, working with prompts:

FAQ

Prompt engineering requires proficiency in natural language processing, machine learning, and programming skills in Python, TensorFlow, etc. Familiarity with data structures, algorithms, data cleaning, and preprocessing is essential. Additionally, understanding the tasks for which the LLM is being trained, such as sentiment analysis or text summarization, is necessary. Strong communication skills are also vital since prompt engineering often requires team collaboration.

Additionally, having a good understanding of the domain you are working with and the target audience is essential to create effective prompts.

Prompt engineering can improve the capacity of LLMs by providing them with specific input-output pairs that guide them to produce desired outputs accurately and efficiently. Zero-shot prompting, few-shot prompting, chain-of-thought prompting, self-consistency generate knowledge prompting, active-prompt directional stimulus prompting, and multimodal graph prompting are some techniques used in prompt engineering. These techniques enable LLMs to learn from limited training data, produce novel outputs, and generalize to new tasks.

Automatic prompt engineering is a technique used to minimize the risk of harmful or biased outcomes by providing prompts that encourage the LLM to produce ethical and inclusive outputs. Additionally, prompt engineering can build new capabilities by delivering the LLM with new types of input data or creating prompts that encourage the model to learn new skills.

Here are some examples:

1.Biased Prompts: If the prompts are biased, then the output generated by the language model will also be biased. Bias can be introduced using language that favors one group over another or training the model on a biased dataset.

2.Misinformation and Manipulation: Prompt engineering can generate fake news or misleading information. The model can be trained to create responses that are designed to manipulate or misinform people.

3.Amplifying Harmful Content: Prompt engineering can amplify harmful content, such as hate speech or misinformation. This can lead to harmful consequences.

4.Privacy and Security Risks: Prompts can also include sensitive information, such as personal data or trade secrets. If this information falls into the wrong hands, it can be used maliciously.

5.Unintended Consequences: Prompt engineering can have unintended consequences, such as creating unintentional biases, amplifying harmful content, or generating responses that are inappropriate or offensive.

It’s essential to use prompt engineering responsibly and carefully, considering the potential risks and taking steps to mitigate them, using, for example, an adversarial promoting technique.

- Tools:

1.Hugging Face Transformers: a Python library that provides easy-to-use interfaces to many pre-trained language ******, including GPT-3.2.OpenAI Playground: To understand how AI ****** react, try your prompts on OpenAI Playground. It’s a customizable AI bot. Unlike the more popular ChatGPT, it lets you adjust the key parameters that affect output generation.3.Playground AI: If you’re studying AI art, try Playground AI. The platform enables you to generate 1,000 images with Stable Diffusion 1.5, Stable Diffusion 2.1, and Playground V1 daily. You can also adjust output parameters, e.g., prompt guidance and seed.4.GitHub: You can expand your knowledge of AI by studying various resources, but you could also focus on writing prompts. There are several unique, effective ChatGPT prompts on GitHub. Search whatever task you want—you’ll likely find a few formulas showing you how to execute it. - Datasets:

1.Pile: a large and diverse dataset containing over 800GB of text from various sources, designed to train and evaluate language ******.2.LAMBADA: a dataset that assesses the ability of language ****** to predict missing words in a passage, requiring them to have a good understanding of context.3.SuperGLUE: a collection of diverse natural language understanding tasks designed to evaluate the performance of language ******. - Additional Readings:

1.“Prompt Engineering for Large Language ******” by He He and Percy Liang, arXiv, January 2022.

2.“Engineering Language ****** for Long-Form Content” by Alec Radford, Jack Wender, and Wojciech Zaremba, OpenAI Blog, November 2020.

3.“GPT-3: Language ****** are Few-Shot Learners” by Tom B. Brown et al., arXiv, May 2020.

4.“Program-Aided Language Modeling: A Framework for Building Language ****** for Programming” by Jacob Devlin et al., arXiv, September 2021.

- Sources to learn more about prompt engineering:

1.“The GPT-3 AI-Language Model: What Is It, And Why Is It Revolutionizing AI?” by Bernard Marr, Forbes, August 2020.

2.“Prompt Engineering for Large Language ******” by He He and Percy Liang, arXiv, January 2022.

3.“Engineering Language ****** for Long-Form Content” by Alec Radford, Jack Wender, and Wojciech Zaremba, OpenAI Blog, November 2020.

4.“Language ****** are Few-Shot Learners” by Tom B. Brown et al., arXiv, May 2020.

5.“LLaMA: Label-Agnostic Meta-Learning Approach for Few-Shot Text Classification” by Ye Zhang et al., arXiv, December 2020.

Found an error? Select it and press Ctrl + Enter to tell us

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community 🙂

By clicking the button, you agree to our privacy policy.

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)