The truth behind AI checkers: A cautionary tale

Browse the web these days, and you’ll likely run into AI-created content (whether you realize it or not).

The line between AI and human-written content continues to blur rapidly, causing confusion and misplaced trust in flawed AI detection tools.

But these so-called electronic gatekeepers – meant to separate human-crafted content from its artificial counterparts – mistakenly flag genuine human writing as AI-generated.

Despite their questionable reliability, many in our industry are turning to AI checkers as a misguided shield, potentially limiting their own content creation capabilities based on flawed analyses.

The irony?

This panic is largely unnecessary, especially considering Google’s clear support for responsible AI use in content creation.

We’re seeing a lot of commotion over a largely overstated problem.

Let’s be real: AI has already cemented its place in the content marketing world. Nearly half of businesses have embraced AI in content production in some form. At our agency, we’re not shying away from it either – but we’re using it responsibly (more on that later).

This article will debunk AI checker myths, show you how to use AI effectively and guide you in creating content that meets Google’s standards and keeps your audience coming back for more.

Let’s begin with an eye-opening case study featuring MediaFeed, one of our syndication partners, and Phrasly, an AI checker.

Real-world example: Our experience with a syndication partner and AI checkers

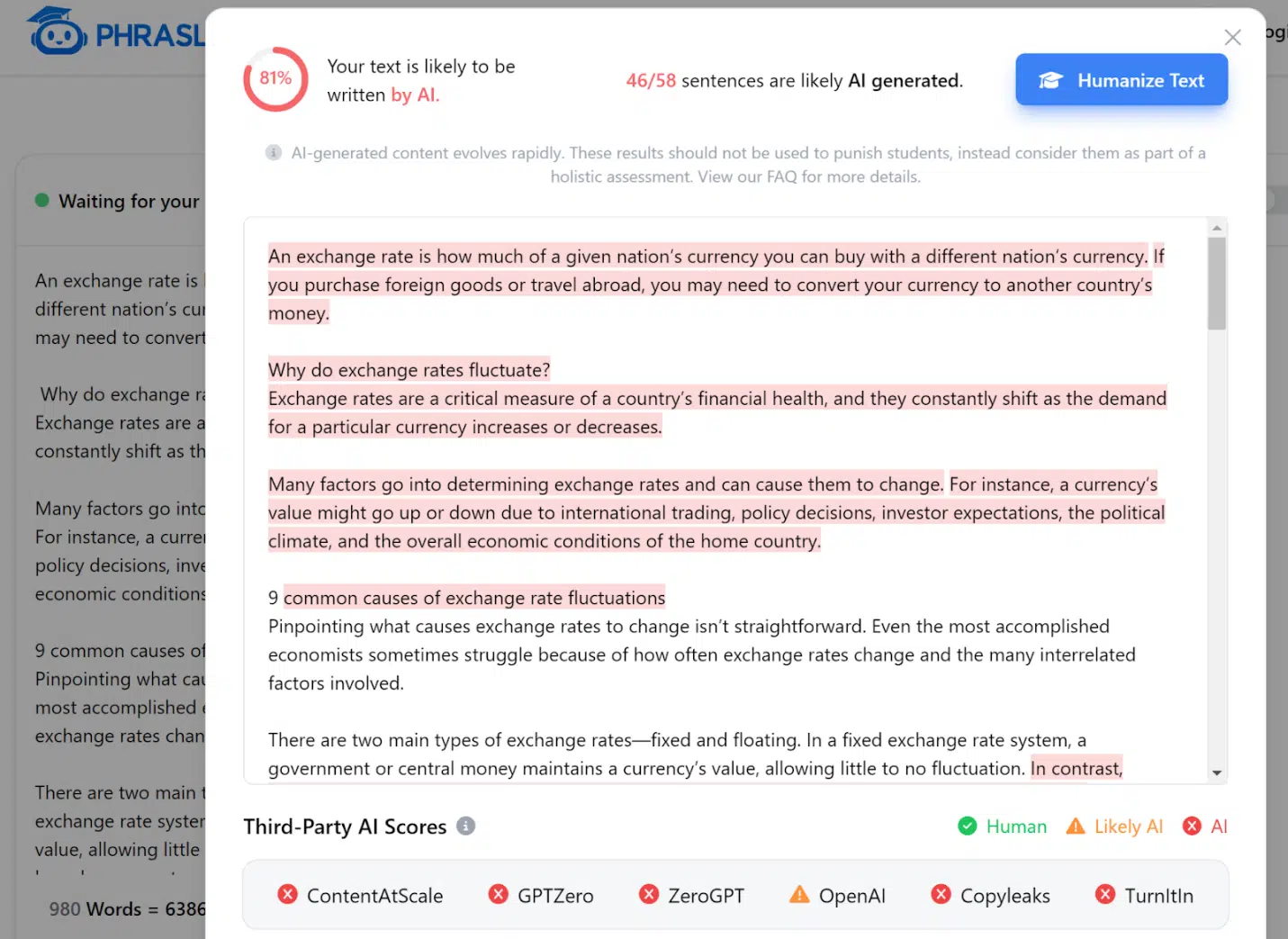

Our content team encountered an unexpected hurdle when MediaFeed ran one of our human-written pieces through an AI checker called Phrasly.

The tool flagged this top-funnel content piece (which required clear, concise and concrete definitions related to economics and currencies) as AI-generated, raising immediate concerns about the reliability of such tools.

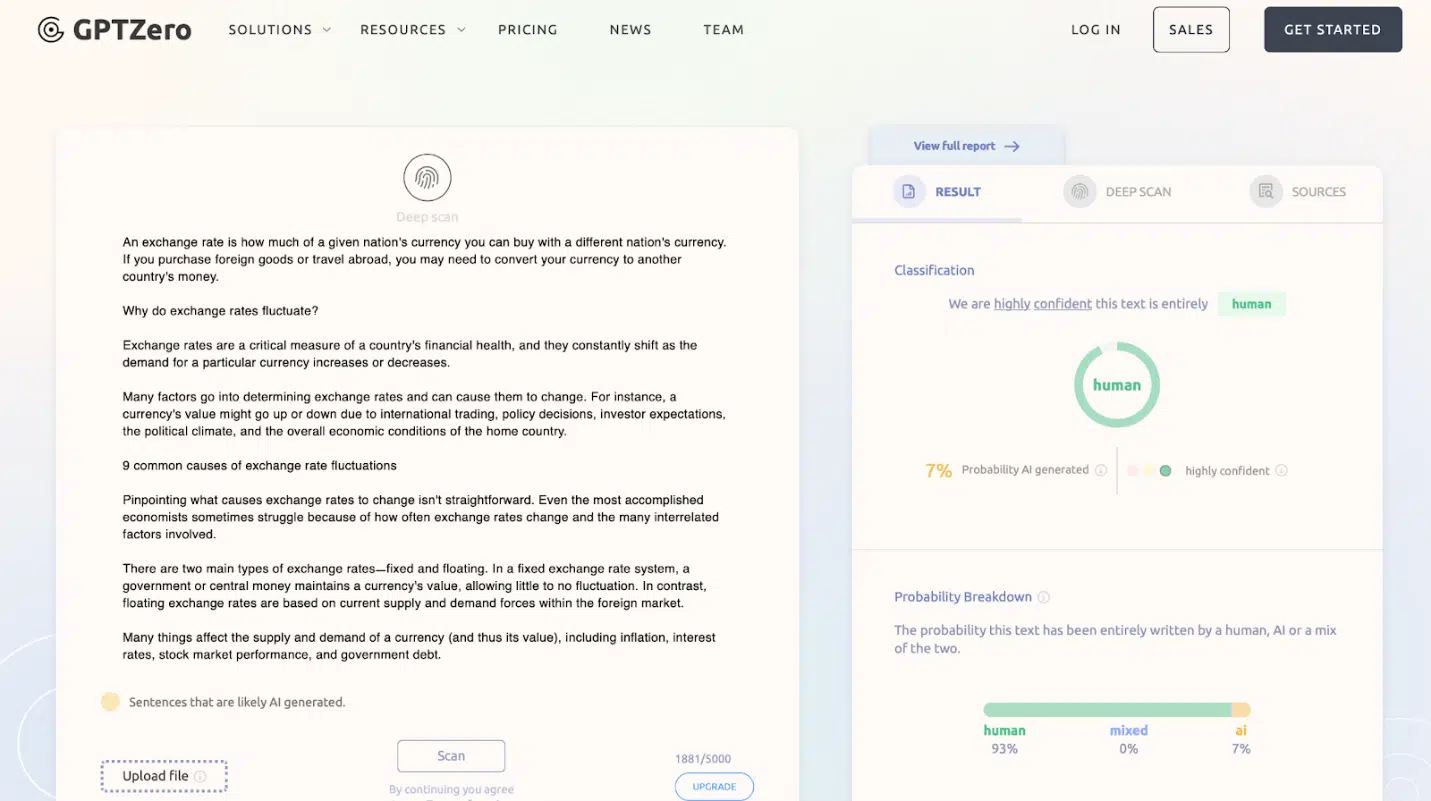

We ran the same piece through GPTZero to demonstrate the inconsistency of AI checkers.

Interestingly, GPTZero identified the content as 93% human-written, directly contradicting Phrasly’s assessment.

This discrepancy highlighted the potential for false positives and the importance of not relying solely on these tools for content evaluation.

Our content creation process

To address MediaFeed’s concerns, we provided a detailed breakdown of our content production process – one that responsibly incorporates AI.

Our process begins with topic cluster development, where our SEO team identifies keywords to target. Our content team then uses this information for manual SERP research and analysis. They also use approved AI tools to help transform their research into a comprehensive, fact-checked and sourced outline.

Our writers then use this outline as a foundation, employing AI tools for research assistance and to help rewrite specific sections when needed.

Here’s an example of how one of our writers could use AI to help rewrite a definition of a concept that’s been written about countless times:

- Original sentence: “An exchange rate is how much of one nation’s currency you can buy with another nation’s currency.”

- AI-assisted rewrite: “Think of an exchange rate as the price tag on one country’s money when shopping with another country’s cash. It’s like asking, ‘How many tacos can I get for this burger?’ but with currencies instead of food.”

Our writers then refine this output, ensuring it aligns with our strict quality standards and the client’s voice. Once finalized, our expert copy editors and content lead parse the draft to ensure it meets our best practices.

Each piece of content undergoes four stages of internal review before client delivery: writer, copy editor, content lead and client services. We also use Copyscape to verify originality.

Over the past year, we’ve invested significant time in researching and testing generative AI tools like ChatGPT, Claude, Gemini, Jasper and Perplexity to determine their appropriate use cases for content production.

This research, combined with decades of SEO, content marketing and professional writing experience, enables us to use generative AI properly, effectively and ethically.

Key learnings

This experience provided us with some valuable insights:

- AI checker limitations: We learned that AI checkers can produce inconsistent results and shouldn’t be the sole way to determine content quality or origin.

- Importance of transparency: Open communication about our process helped strengthen our relationship with MediaFeed.

- Value of human expertise: Our approach, which combines AI assistance with human creativity and expertise, proved effective in producing high-quality, original content.

MediaFeed proceeded to publish the content without further concerns. This experience also led us to develop a more robust strategy for addressing AI-related queries from clients, emphasizing education and process transparency.

Dig deeper: 7 reasons why your AI content sucks (and how to fix it)

AI detectors promise to sniff out AI-generated content, but just like our Mediafeed snafu, they can be inaccurate and unreliable.

A good example of just how unreliable they are is the fact that OpenAI, the creator of ChatGPT, took down its AI-written text classifier in July 2023 due to its low accuracy rate.

Turnitin, one of the most well-known and widely used AI detector tools in the academic space, boasts a less than 1% false positive rate. However, they claim to miss 15% of AI-written text to achieve this.

Tools like GPTZero seemingly lead the way in accuracy, claiming a 99% success rate when analyzing text from Meta’s Llama 3.1 LLM. Our test also showed that GPTZero correctly identified our human-generated content compared to Phrasly.

But the company’s claims of “advanced algorithmic precision” and “robust training data” just don’t guarantee accuracy when concrete AI text identifiers (a.k.a. “watermarks”) don’t exist yet.

Technical limitations

Pattern recognition vs. understanding

- AI checkers rely on similar training data sets that power LLMs and pattern recognition to look for statistical anomalies that might indicate AI-generated text.

- One such way these tools look for patterns is through the lenses of “perplexity” and “burstiness,” which are qualities that often distinguish human-written content from AI-generated text.

- Perplexity refers to the complexity and unpredictability of writing, while burstiness captures variations in sentence structure and rhythm.

- These subtle characteristics are challenging for AI to replicate consistently and for AI checkers to evaluate accurately.

False positives and negatives

- These tools are prone to errors in both directions.

- They might flag a brilliantly creative piece as “AI-generated” simply because it’s unique or pings overly simplified perplexity and burstiness requirements.

- Worse, they could miss actual AI-generated content that’s been cleverly tweaked. It’s a coin toss and that’s not good enough for professional content evaluation.

‘Fingerprinting’ AI text

- Promising watermarking technology is being developed for AI-generated text, but it’s still in its infancy and must overcome some serious hurdles before becoming a foolproof solution.

- OpenAI recently announced that its team successfully developed a highly accurate text watermarking method. However, it will not release it to the public until it can solve its issues with global tampering and potential biases.

- The OpenAI team is also in the early stages of exploring cryptographically signed metadata as a text provenance technique, which would lead to zero false positives and be more efficient than standard watermarking – but this is currently more science fiction than fact.

Lagging behind AI advancements

- LLMs are popping up everywhere and evolving at breakneck speeds while AI checkers struggle to keep up.

Ethical concerns

The problems with AI checkers go beyond technical issues. They raise serious ethical red flags:

Reputation damage

- A false positive from an AI checker can have serious consequences.

- Content creators might face lost credibility, damaged professional relationships and – in industries with strict regulatory requirements – even legal penalties.

- This creates an environment where genuine creativity can be unfairly penalized.

Bias and discrimination

- AI checkers can perpetuate biases, potentially discriminating against certain writing styles or voices.

- This could lead to a bland, homogenized internet where diverse voices are silenced. Is that the future of content we want?

Creativity killer

- When writers know these flawed tools will scrutinize their work, they might play it safe. No more creative risks, no more unique expressions.

- The result? Boring, formulaic content that no one wants to read.

Black box problem

- Many organizations rely on AI checkers without understanding how they work.

- Some AI detector companies aren’t even transparent about their tools’ operations.

Dig deeper: How to survive the search results when you’re using AI tools for content

The dangers of relying on AI checkers

Think AI checkers are just harmless tools? Beyond their technical limitations, over-reliance on these detectors can lead to unexpected and far-reaching consequences.

Skewed content strategies

Companies might become obsessed with “passing” AI checks, prioritizing this over actual SEO best practices.

It’s like optimizing your website for a search engine that doesn’t exist – you’re playing the wrong game entirely.

This misguided focus can lead to ignoring user intent. You might lose sight of what your audience wants when you’re too fixated on beating AI detectors.

Remember, your readers are human, not algorithms.

Legal and compliance risks

In regulated industries, relying solely on AI checkers for content approval could create a false sense of compliance, leading to overlooking crucial issues.

These tools aren’t designed to understand complex regulatory requirements.

Market and competitive disadvantages

Avoiding AI-assisted content creation due to fear of detection might cause you to miss out on efficiency gains that your competitors are leveraging.

Investing heavily in unreliable AI detection tools means less budget for things that actually improve your content, like subject matter expert (SME) writers or reviewers or professional editing.

Moreover, constantly running content through AI checkers and dealing with false positives can significantly slow down your content production pipeline.

Long-term industry impacts

As AI checkers become more prevalent, they could create an additional barrier to entry for new writers or smaller organizations trying to enter the content market.

There’s also a risk of organizations valuing the “ability to pass AI checks” over actual writing skills, potentially changing hiring practices unintendedly.

If everyone’s trying to create content that passes the same AI checks, we risk an echo chamber effect, amplifying certain voices or styles while suppressing others. This would lead to a less diverse content ecosystem.

Remember, the goal isn’t to avoid AI altogether – it’s to use it responsibly while maintaining the human touch that makes content truly valuable.

Relying too heavily on AI checkers can paradoxically push us further from this goal.

So, what’s the alternative? How can we ensure content quality without falling into the AI checker trap?

Dig deeper: Is using AI-generated content for SEO plagiarism?

Alternative strategies for ensuring content quality

AI checkers aren’t the solution for analyzing content quality or origin. Let’s explore more effective strategies that actually work.

Embrace human expertise

There’s no substitute for skilled human editors. They catch nuances, inconsistencies and tone issues that AI checkers miss. Human experts can also better assess content for perplexity and burstiness.

For specialized content, involve SME writers or reviewers to ensure accuracy and depth. Their domain knowledge enables them to identify nuanced inaccuracies or oversimplifications that AI generators and checkers might miss.

Consider assembling diverse review teams to spot potential issues with tone, cultural sensitivity and audience relevance.

These teams can also evaluate how perplexity and burstiness manifest across different content types and subject areas, providing a more comprehensive assessment of content authenticity and quality.

Leverage more reliable tools

Plagiarism checkers like Copyscape remain valuable and trustworthy. While not perfect, they’re far more reliable than AI content detectors for identifying copied text.

Readability analyzers such as the Hemingway Editor help assess reading level, sentence structure and clarity, ensuring your content is accessible to your target audience.

SEO tools like Semrush Writing Assistant can also help you optimize for actual search engines and user intent, not AI detectors.

But there’s a caveat to these AI-powered readability and SEO tools, just like with AI detectors. Overreliance on them to make copy changes could also lead to homogenization in writing, especially regarding sentence flow and word choice.

When everyone uses the same tools to gauge writing quality and effectiveness, it can water down content all the same. Expert people are important, people!

Implement robust processes

Develop comprehensive content guidelines that reflect your brand voice, style and quality standards. This will provide a consistent framework for both human- and AI-assisted content.

Implement a tiered review process, starting with self-editing, moving to peer review and then to professional editing. It’s also important to regularly collect and analyze user feedback on your content to gauge its effectiveness and value.

Focus on value and originality

Prioritize original research by conducting your own surveys, interviews or data analysis. This ensures uniqueness and adds real value for your audience.

Whether using AI assistance or not, always incorporate unique insights and experiences. Focus on creating fewer pieces of higher quality rather than churning out content at scale just to feed the algorithm.

Dig deeper: AI can’t write this: 10 ways to AI-proof your content for years to come

Educate your content team

Ensure your content team understands AI’s capabilities and limitations in content creation. Develop clear policies on how and when to use AI in your content process.

Also, stay updated on AI developments and best practices in content creation and SEO, as things are changing rapidly.

The bigger picture

Despite their good intentions, AI checkers often produce unreliable results due to their reliance on pattern recognition rather than true understanding.

The potential for false positives and negatives, along with ethical concerns, make them an imperfect solution for reviewing content quality.

Instead of relying on them, we’ve outlined more effective methods for ensuring content quality, including using established plagiarism checkers and involving human expertise in the content creation and review process.

Our experience with MediaFeed also demonstrates the value of transparency and a balanced approach that combines AI assistance with human creativity.

Moving forward, it’s crucial to approach AI in content creation with a balanced perspective.

AI tools can significantly enhance content when used responsibly, but they should be seen as aids to human creativity rather than replacements for it.

The goal is to find a middle ground where technology enhances our creative capabilities.

As content creators, marketers and business leaders, we also must stay informed about AI developments and continually refine our strategies. We should be open to leveraging new technologies while recognizing the enduring value of human expertise, creativity and ethical judgment.

The future of content creation lies in our ability to thoughtfully integrate AI tools into a human-led creative process.

This will enable us to produce content that performs well in search rankings and genuinely serves and engages our audiences – the true measure of content success.

Dig deeper: Generative AI to create content: To use or not to use it?

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Source link : Searchengineland.com