Updated: July 29, 2023.

Learn what it means and whether it is an issue if your XML sitemap is not indicated in robots.txt.

Sitemaps and robots.txt files both serve important purposes for search engine crawling and indexation.

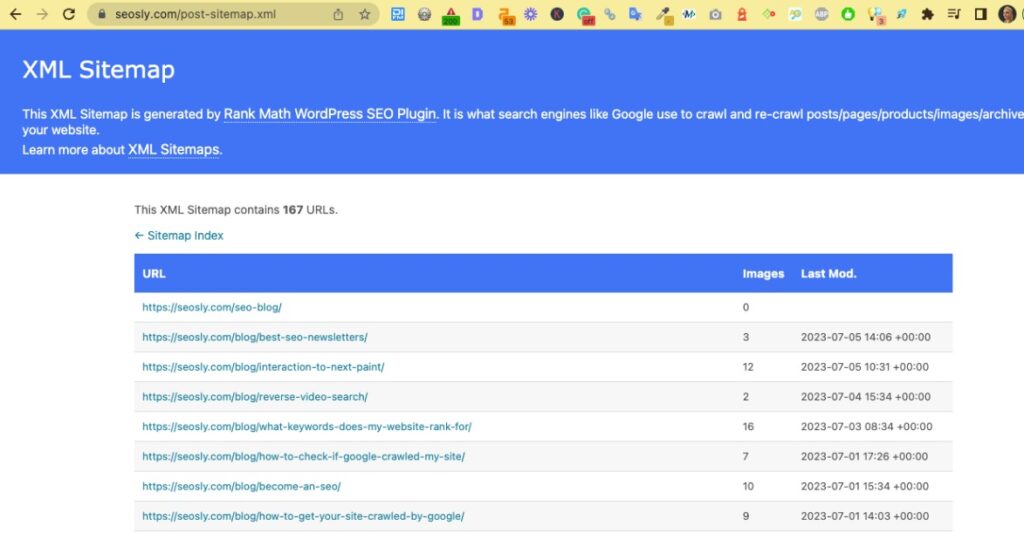

A sitemap is an XML file that lists all the pages of a website. It allows search engines like Google to more efficiently crawl a site by informing them of all the URLs to be indexed. Sitemaps are especially useful for sites with a large number of pages.

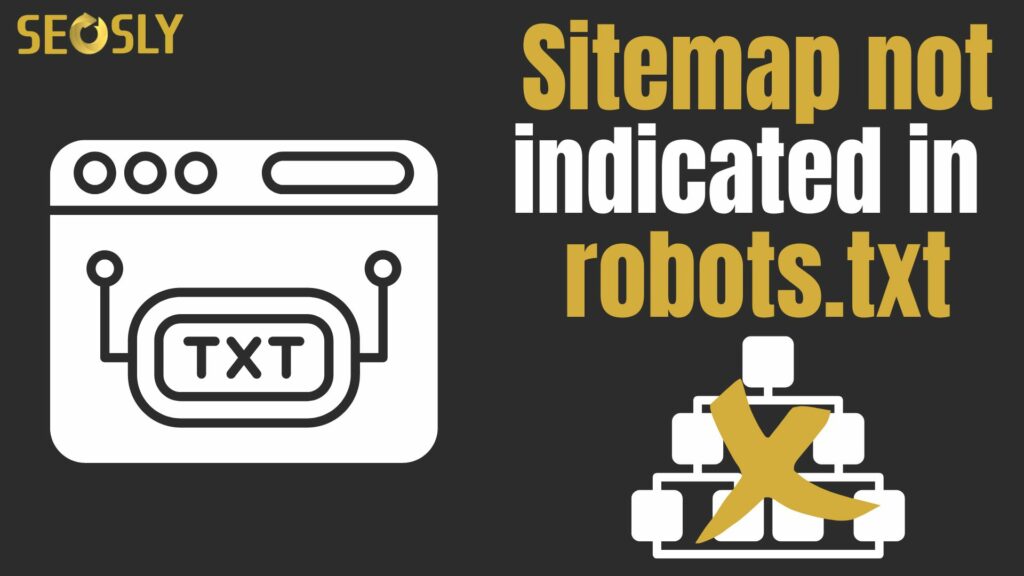

The robots.txt file gives crawling instructions to search bots. It can list parts of a site that should not be accessed or indexed (though it’s not its primary purpose). The robots.txt file also commonly indicates the location of the sitemap with a line like:

Sitemap: https://www.example.com/sitemap.xml

However, many sites omit a sitemap reference in their robots.txt file altogether. In some cases this can lead to issues with indexation, but other times it may not matter.

In this article, we’ll look at when the omission of a sitemap in robots.txt can be problematic versus when it is likely fine.

TL;DR: Sitemap.xml not indicated in robots.txt

When your site should have the sitemap indicated in robots.txt

The robots.txt file serves as the instruction manual for search engine crawlers visiting a website. It is typically the very first file they will access to determine indexing directives.

By listing the sitemap location in robots.txt, you immediately notify search bots of its existence as soon as they start crawling. This makes the sitemap URL one of the first pages fetched and parsed.

Having the sitemap declared in robots.txt tends to be most crucial for:

- Large websites with extensive content – Sitemaps allow more efficient discovery of all the URLs on sites with thousands or millions of pages. Omitting it risks slower indexation.

- Sites that add or update content frequently – By indicating the sitemap upfront, new and revised pages get discovered faster as the site gets re-crawled periodically.

- Brand new websites still gaining initial indexation – Including the sitemap URL helps search bots discover a new site’s initial content and start indexing it more quickly.

- Websites recovering from major indexing issues – Re-adding the sitemap after problems like ******* helps regain lost pages (but there is way more nuance to that).

- Sitemaps located at non-standard addresses – Without robots.txt, bots may not find sitemaps at unusual locations like /xml-sitemap.xml.

- Any site type if sitemap hasn’t been submitted in Search Console – Robots.txt provides redundancy if the sitemap hasn’t been registered directly with Google.

The common thread is that declaring the sitemap in robots.txt makes Google distinctly aware of its existence. Search bots will spider and parse it early on when first crawling a website. While a sitemap can be submitted directly in Search Console, robots.txt catches any new bots and future re-crawls.

Let me know if you would like me to modify or add anything to this more detailed section!

When your site doesn’t need to have the XML sitemap indicated in robots.txt

For some websites, it is not crucial to have the sitemap URL declared in the robots.txt file. Omitting it may cause no issues at all.

Types of sites that likely do not require a sitemap reference in robots.txt include:

- Small websites with very minimal pages – On sites with only a handful of pages, search bots can discover and index the content without a sitemap.

- Websites with mostly static, unchanging content – If content remains the same over long periods, periodic re-crawls are less important so faster discovery via sitemap is less beneficial.

- Well-established sites that are already fully indexed – On ****** sites with 1,000+ pages that bots are already crawling, a sitemap may provide little added value.

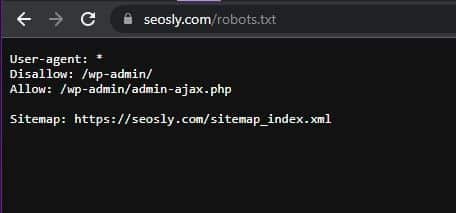

- Sites whose sitemaps are properly submitted in Search Console – As long as Google knows about the sitemap directly and is actively crawling it, redundancy in robots.txt is less necessary.

- Low-priority content that doesn’t need indexing – For sites not concerned with exhaustive indexing, a sitemap may not matter.

The commonality is that these types of sites all have a reduced need for the fast discovery and change monitoring that declaring a sitemap in robots.txt facilitates.

As long as Google is sufficiently crawling new and existing content via regular periodic scans, the omission may have minimal impact.

However, adding the sitemap reference is still good practice when possible. There are few downsides, and it provides benefits like redundancy and faster crawl prioritization.

Bonus: Sitemap tips & best practices

Here are some best practices for XML sitemaps:

- Ensure the sitemap contains valid URLs – Avoid including 404s, redirected pages, or any other non-existent URLs. For massive sites, invalid URLs in a sitemap can become a crawl budget issue and create indexing problems.

- Submit to both Google and Bing – Upload your sitemap in Google Search Console and Bing Webmaster Tools. This allows you to monitor when the engines last accessed your sitemap and if they encountered any errors trying to crawl it. Don’t neglect Bing!

- Consider a HTML sitemap – An HTML sitemap helps users better navigate your site visually. If crafted properly with contextual anchor text links, it may also aid search engines in better understanding your site’s content structure.

- Avoid multiple conflicting sitemaps – If your CMS like WordPress generates sitemaps automatically, ensure there aren’t multiple or outdated versions floating around. For WordPress sites, a plugin like Rank Math is recommended for properly generating and managing sitemaps.

- Regularly update your sitemap – As site content changes, keep the sitemap updated so search engines are informed about new and modified pages. In most cases, this should happen automatically.

- Limit by lastmod **** if recrawling all pages is excessive – For large sites, listing all URLs may exceed crawl budget. Filter to only recently updated pages.

- Compress the XML file to optimize crawl impact – Reduce bandwidth demands and speed up processing by compressing your sitemap file.

I also have a video where I show you how to audit an XML sitemap: