Posted by

Marco Giordano

Nowadays SEO is seeing the rise of concepts such as semantic SEO, Natural Language Processing (NLP), and programming languages. Speaking of which, Python is a huge help for optimization and most of the boring tasks you may want to carry out while working.

Don’t worry, coding can seem daunting at first but it’s way more straightforward than you think thanks to some specialized libraries.

We have already discussed semantic search, as well as topical authority, and Python is a good solution to explore new insights and for faster calculations, compared to the usual Excel workflow.

It’s no secret that Google relies a lot on NLP to retrieve results and that is the main reason why we are interested in exploring Google’s natural language to get more clues about how we can improve our content.

In this post I clarify:

- The main semantic SEO tasks you can carry out in Python

- Code snippets on how to implement them

- Short practical examples to get you started

- Use cases and motivations behind

- Pitfalls and traps of blindly copy-pasting code for decision making

Beware, this tutorial is intended to show how to leverage Python to a non-technical audience. Therefore, we won’t go into detail for each technique as it would be time-consuming.

The examples listed are just one part of the plethora of techniques you can implement in a programming language. I am just listing what I consider to be the most relevant for people who are starting out and have an interest in SEO.

The goal is to illustrate the benefits of adding Python into your workflow to get an edge for Semantic SEO tasks, like extracting entities, analyzing sentences, or optimizing content.

No particular Python knowledge is requested, excluding some basic concepts at most. The examples will be shown in this Google Colab link as it is easy and immediate to use.

NLP and Semantic SEO tasks in Python

There are many programming languages that you can learn, Javascript and Python are the most suitable for SEO Specialists. Some of you may ask why we are choosing Python over R, a popular alternative for data science.

The main reason lies in the SEO community, which is more comfortable with Python, the ideal language for scripting, automation, and NLP tasks.

You can pick whatever you like, even though we will only show Python in this tutorial.

Named Entity Recognition (NER)

One of the most important concepts for SEO is the ability to recognize entities in a text, i.e. Named Entity Recognition (NER). You may ask yourself why you should care about this technique if Google already does it.

The idea here is to get which entities are the most common on a given page in order to understand what you should include in your own text.

You can either use spaCy or Google NLP API for this task. Both have advantages and disadvantages, although in this example you will see spaCy, a very popular library for NLP ideal for NER.

As you can see in the notebook, your text is now labeled with the entities, and this is very good to get a grasp of what your competitors are using. Ideally, you can combine this with scraping to extract the meaningful part of text and list all the entities.

This can also be extended to an entire SERP to get the most useful entities and understand what to include in your copy. However, there is another useful application, you can scrape a Wikipedia page to get the list of entities and then create a topical map based on what you have found.

It works very well with long Wikipedia pages and in English-speaking markets, I have tested it in other languages but usually, Wikipedia isn’t as complete.

NER is a basic technique with interesting applications and I can guarantee you it’s a game-changer when used correctly. Ideal for those scenarios where you don’t know which entities to put in an introduction and you need to find out, or for planning topical maps.

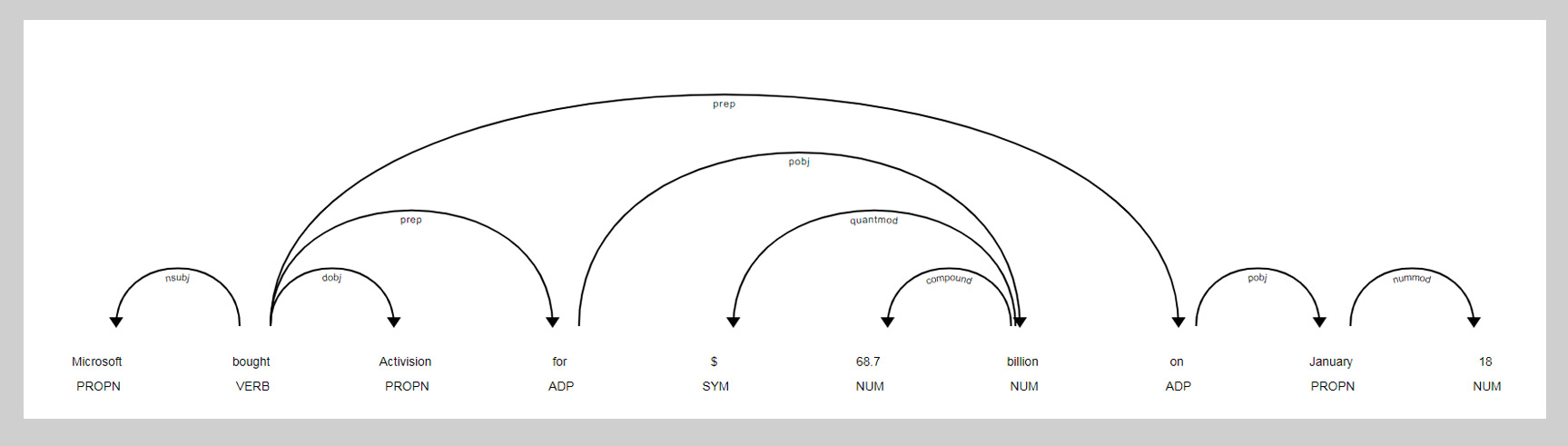

Part-of-speech tagging (POS tagging)

Semantic SEO has a particular interest in the parts of speech that terms have in sentences. As some of you may have already guessed, the position of a word can change its importance when extracting entities.

POS tagging is handy when analyzing competitors or your own website to understand the structure of definitions for featured snippets or have more detail into an ideal sentence order.

Python offers large support for this task, in the form of the spaCy library again, your best friend for most of your NLP tasks.

To sum up, POS tagging is a powerful idea to understand how you can improve your sentences based on existing material or how other people do it.

Query the Knowledge Graph

As already discussed in another article about the Knowledge Graph, you should be comfortable with entities and making connections. Speaking of which, it is quite useful to know how to query Google’s Knowledge Graph and it is quite simple.

The advertools library offers a simple function that lets you do that by taking as input your API key. The result is a dataframe containing some entities related to your query (if any) along with a confidence score that you don’t have to interpret.

The useful lesson here is to get definitions and related entities if any. The Knowledge Graph is one big database storing entities and their relationships, it’s Google’s way to understand connections and the root for Semantic SEO. In fact, this is one of the prerequisites for achieving topical authority in a long-term strategy.

Sometimes the Knowledge Graph alone is not enough and that is why I am going to show you another API that works well in pairs.

Query Google Trends (unofficial) API

Google Trends can be part of your content strategy to spot new trends or assess whether it’s worthy to talk about a certain topic you are not so sure about. Let’s say you want to expand your content network with new ideas but you are not convinced, Google Trends can lend you a hand in deciding.

Although there is no official Google API, we can use an unofficial one that covers what we want. The key here is to give a list of keywords, select a timeframe and pick a location.

Top and rising keywords are great to understand what we need for our content strategy.

Rising refers to new trends and queries you have to keep an eye for, sometimes you can find golden opportunities, especially if your focus is News SEO.

On the contrary, Top keywords are more consistent and stable through time, they give you hints about your topical maps in most cases.

My recommendation is to play with this API if you are working in the eCommerce world as well, due to seasonal sales. Google Trends is a tremendous advantage for news and seasonal content, the API can only make the experience better for you.

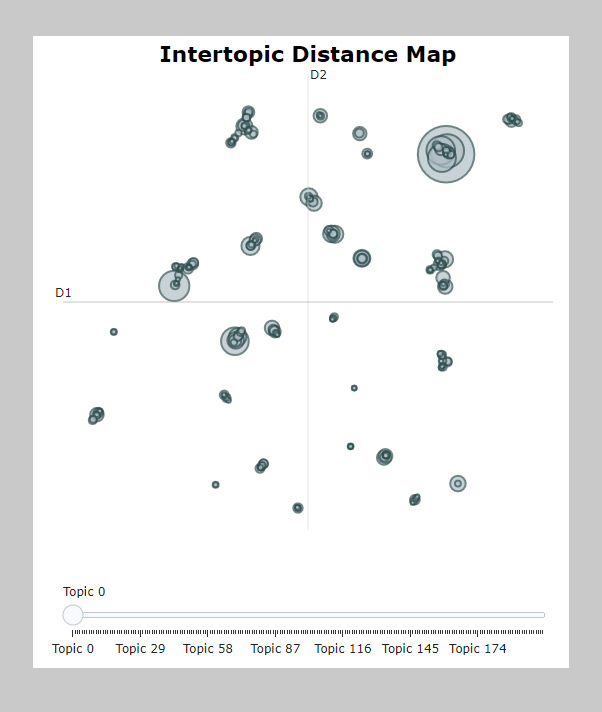

Topic modeling (Latent Dirichlet Allocation – LDA)

One of the most interesting applications of NLP is topic modeling, which is recognizing topics from a set of words. This is a good way to see what a large page talks about and if it is possible to spot subtopics. It is possible to run this algorithm on an entire website, although it would result computationally prohibitive and it’s out of the scope of this tutorial.

I show you a short example with the LDA algorithm implemented via the Bertopic library, to simplify our workflow:

Topic modeling is a very underrated method to evaluate a content network or even sections of a given website, and that is why you should spend quite some time going deeper with LDA!

To sum up, LDA is one way to judge an entire website or just some of its sections. Therefore, it can be considered as a method to understand the content of competitors in your niche, given that you have enough computational power.

N-grams

An n-gram can be thought of as a contiguous sequence of words, syllables, or letters. I will show you how to create n-grams from a corpus in Python without going into much detail.

Therefore, our unit will be words since we are interested in knowing which combinations of words are most common in a corpus.

N-grams based on two words are called bigrams (trigrams if three) and so on. You can check the Colab notebook to get an idea of what we are trying to obtain.

Now you have a clear idea of what are the most common combinations in a text and you are ready to optimize your content. You can try different combinations as well, like 4-grams or 5-grams. Since Google relies on phrase-based indexing, it is more beneficial to consider sentences rather than keywords when talking about Onpage SEO.

This is another reason why you should never think in terms of individual keywords but rather with the cognition that your text should be suitable for human readers. And what is better than optimizing entire sentences rather than some terms?

N-grams are a recurring concept in NLP and for a good reason. Test the script with some pages and test different combinations, the goal here is to find valuable information.

Text generation

The current SEO buzz revolves around generated content, there are a lot of online tools that allow you to create text automatically. This isn’t as easy as it seems actually and the material still asks for fixes before going live.

Python is capable of generating content or even short snippets but if you want the easy way it is highly recommendable to rely on tools.

I am going to show you a simple example with the openai library, the steps on how to create an account are in Google Colab.

As you can see, the code here is pretty easy and there is nothing particular to comment. You may want to toy with some parameters to check the difference in results, but if you want to generate content there are services that require no coding.

In fact, you will need to pay for using Open AI so if you want to get the job done it’s more suitable to opt for other paid services.

Clustering

A very useful application in SEO, one of the most important techniques in general for adding value to your workflow. If you are short on time, focus on this first, as it is quite robust for eCommerce and is a godsend for spotting new categories of products.

Clustering creates groups of something, in order to highlight something that you are not able to see normally. It’s a powerful set of techniques and it’s not so easy to produce meaningful results with them. For this reason, I will provide a quick example that is aimed to show the code for one algorithm and a potential drawback of applying it incorrectly.

Content clustering is a topic that definitely requires a different tutorial in order to be used, as it’s quite tricky to get some concepts.

You can either use Rank Ranger rank tracking data or Google Search Console data, it doesn’t matter at all. What is important is that you are saving time and gaining new insights, even if you have zero knowledge about a website.

There are plenty of Python scripts, notebooks, or even Streamlit apps available online, this section is just to teach you the basics.

We can say that it is the best ****** in your arsenal when it comes to eCommerce or for finding unexplored topics on your website. It’s easy to confuse clustering with topic modeling because both of them lead to a similar output. However, recall that for clustering we are talking about grouping keywords and not text, this is a key difference.

Clustering is extremely valuable for those working with category pages and for anyone trying to spot new content opportunities. The opportunities here are almost infinite and you also have several options, let’s dive into some algorithms:

- Kmeans

- DBscan

- Using graphs

- Word Mover’s Distance

If you are just beginning the best advice is to start from either Kmeans or DBscan. The latter doesn’t require you to find the optimal number of clusters and for this reason, is more suitable for plug-and-play uses.

Using graphs is a method to capture semantic relationships and also a great way to start thinking in terms of knowledge graphs. Other methods like Word Mover’s Distance are excellent but are, well, complex and take too much effort for simpler tasks.

Benefits of using Python for semantic SEO

Python is not a must for everyone, it depends on your background and on what you want to be. Semantic SEO is the best approach you can take right now and knowing some basic coding can help you a lot, especially for learning some concepts.

There are some tools suitable for these tasks that can save you a lot of time and headaches. Nonetheless, implementing code from scratch and problem-solving are desirable skills that can only get more valuable as SEO moves to a more technical reality.

Moreover, you definitely need coding for carrying out certain tasks, as there are no viable alternatives.

It is possible to summarize Python benefits for semantic SEO as follows:

- A better understanding of theoretical concepts (i.e. linguistics, computations, and logic)

- Opportunity to study algorithms practically

- Automation of otherwise impossible tasks

- New insights and different perspectives on SEO

What is listed above can be applied to any other programming language, we are mentioning Python because it is the most popular in the SEO community as of now.

How much time will it take?

There is no accurate answer for that, it depends on your consistency and background. My suggestion is to do something little every day until you feel comfortable. There are a lot of good resources online so there are no excuses to start practicing.

Nonetheless, learning Python is one thing, studying NLP and Semantic SEO is a different story. It is highly recommended to understand the basic theory first while keeping it in shape with proper practice.

Most useful Python libraries for Semantic SEO

There are more libraries than you think actually, the most notable being:

- Advertools

- spaCy

- nltk

- sklearn

- transformers

- querycat

- gensim

- Bertopic

Some of them were not examined in this tutorial as they would involve more complex concepts that require separate articles. Moreover, almost all of them are also used for general NLP tasks.

Strictly related to these libraries is web scraping, which can be easily done with the support of libraries such as BeautifulSoup, Requests and Scrapy.

Conclusion

We have gone through some of the best NLP techniques that you can implement in Python to boost your semantic SEO game.

An SEO Specialist doesn’t need to have extensive knowledge in Data Science in order to make sense of most of the material mentioned in here. However, you should know how algorithms work at a high level and how to interpret the output, to avoid making wrong conclusions!