The importance of future-proofing content

To clarify, future-proofing your website doesn’t mean that once a page has gone live, you’ll never need to touch it again. Rather, it’s about creating content you’ll be able to build on, refresh and repurpose rather than needing to cull it down the line. It’s about ensuring that the content you put out ranks organically in the top SERP for a sustained period of time and won’t simply get lost in the sea of similar content.

Some brands are still guilty of the ‘spray and pray’ approach to content production, whereby they publish a high number of pages under the assumption that a percentage of them will be successful. While this is already an outdated approach, there’s no doubt that it’s now the quality, not quantity, of content that will reap the rewards.

Historically, Google rolls out core updates every few months, most of which assess the value, originality, and user experience of pages. While search performance is often a brand’s key performance indicator, it should be the marker of good content rather than the sole purpose, says Google. Brands should “focus on creating people-first content…rather than search engine-first content made primarily to gain search engine rankings.”

But what does this mean in practical terms?

Future-proofing checklist

Whether you’re already publishing content or just considering your content strategy, here are some tips for protecting your content against future algorithm changes.

Think about longevity

The best way to create content that remains relevant is to build unique evergreen content that will appeal to readers over time. For many topics, evergreen content is now highly competitive and overcrowded, so an agile approach to spotting opportunities to put a new spin or opinion on a topic is key.

This isn’t to say that trends content should be forgotten about entirely, but that it shouldn’t form the basis of your content strategy.

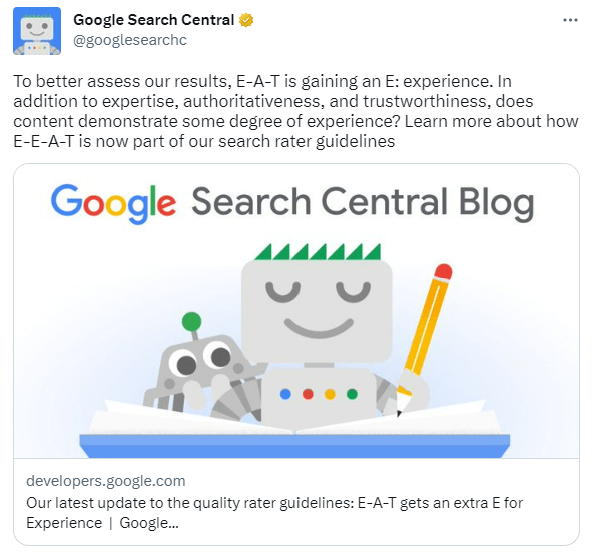

Stick to what you know

Google’s E-A-T guidelines have gained an extra E: Experience. Now, in addition to being able to demonstrate Expertise, Authoritativeness and Trust with their content, brands should also be able to show a deeper level of subject knowledge or first-hand experience.

Brands should only produce content around topics they know and can offer value on, while avoiding creating content in spaces they don’t have authority in. From an authenticity standpoint, it’s also important to consider what your audience associates you with and what they come to you for.

Spot opportunities for optimisation

Once a piece of content is performing well, some marketers will avoid doing anything to it, with an “if it’s not broke, don’t fix it” mentality. However, if there’s been a new report, breaking news story, or other development relating to the topic, not mentioning it could risk your content becoming outdated. Updating the copy to reflect any changes indicates that your brand is up-to-**** with the latest goings-on in the field and instils trust in users that the content is accurate.

Rankings continuously fluctuate, so even if you’re in position 1, it’s a good idea to keep an eye on what’s ranking below you. Strengthening the page with videos and infographics or additional FAQs is a good way to help keep your content in that prime position and keep it fresh.

Restrict use of AI tools

AI copywriting tools like OpenAI’s ChatGPT have gone viral for their ability to generate human-like responses in various formats, from blog posts to stories and even rhyming poems. While these tools can be helpful for coming up with content ideas, understanding intent and automating manual tasks like keyword research, they should be used with caution.

Google’s current stance on AI content is that it’s a page’s value that is important, not who it was written by, but as more and more similar AI content starts to saturate the web, it’s likely that they’ll start to crack down on this. The current ****** are informed by a limited data set of existing web pages, and relying on AI alone means your content will never say anything that hasn’t already been said before. AI detectors like GPTZero are becoming more sophisticated at determining whether a human or AI has produced content, so it will soon be easier to spot when – and to what extent – it’s been used.

Conclusion

Nothing can ever be truly future-proof and with Google making thousands of updates per year, content will need continuous attention to sustain it. However, monitoring the latest algorithm launches and updates will help brands to better understand how Google is trying to help users and tailor content accordingly.

If you’re interested in a content audit to understand how your current pages are performing, or want to revamp your content strategy, get in touch!