How to avoid an SEO disaster during a website redesign

If you’ve been investing in SEO for some time and are considering a web redesign or re-platform project, consult with an SEO familiar with site migrations early on in your project.

Just last year my agency partnered with a company in the fertility medicine space that lost an estimated $200,000 in revenue after their organic visibility all but vanished after a website redesign. This could have been avoided with SEO guidance and proper planning

This article tackles a proven process for retaining SEO assets during a redesign. Learn about key failure points, deciding which URLs to keep, prioritizing them and using efficient tools.

Common causes of SEO declines after a website redesign

Here are a handful of items that can wreak havoc on Google’s index and rankings of your website when not handled properly:

- Domain change.

- New URLs and missing 301 redirects.

- Page content (removal/additions).

- Removal of on-site keyword targeting (unintentional retargeting).

- Unintentional website performance changes (Core Web Vitals and page speed).

- Unintentionally blocking crawlers.

These elements are crucial as they impact indexability and keyword relevance. Additionally, I include a thorough audit of internal links, backlinks and keyword rankings, which are more nuanced in how they will affect your performance but are important to consider nonetheless.

Domains, URLs and their role in your rankings

It is common for URLs to change during a website redesign. The key lies in creating proper 301- redirects. A 301 redirect communicates to Google that the destination of your page has changed.

For every URL that ceases to exist, causing a 404 error, you risk losing organic rankings and precious traffic. Google does not like ranking webpages that end in a “dead click.” There’s nothing worse than clicking on a Google result and landing on a 404.

The more you can do to retain your original URL structure and minimize the number of 301 redirects you need, the less likely your pages are to drop from Google’s index.

If you must change a URL, I suggest using Screaming Frog to crawl and catalog all the URLs on your website. This will allow you to individually map old URLs to any receiving changes. Most SEO tools or CMS platforms can import CSV files containing a list of redirects, so you’re stuck adding them one by one.

This is an extremely tedious portion of SEO asset retention, but it is the only surefire way to guarantee that Google will connect the dots between what is old and new.

In some cases, I actually suggest creating 404s to encourage Google to drop low-value pages from its index. A website redesign is a great time to clean house. I prefer websites to be lean and mean. Concentrating the SEO value across fewer URLs on a new website can actually see ranking improvements.

A less common occurrence is a change to your domain name. Say you want to change your website URL from “sitename.com” to “newsitename.com”, though Google has provided a means for communicating the change within Google Search Console via their Change of Address Tool, you still run the risk of losing performance if redirects are not set up properly.

I recommend avoiding a change in domain name at all costs. Even if everything goes off without a hitch, Google may have little to no history with the new domain name, essentially wiping the slate clean (in a bad way).

Webpage content and keyword targeting

Google’s index is primarily composed of content gathered from crawled websites, which is then processed through ranking systems to generate organic search results. Ranking depends heavily on the relevance of a page’s content to specific keyword phrases.

Website redesigns often entail restructuring and rewriting content, potentially leading to shifts in relevance and subsequent changes in rank positions. For example, a page initially optimized for “ training services” may become more relevant to “pet behavioral assistance,” resulting in a decrease in its rank for the original phrase.

Sometimes, content changes are inevitable and may be much needed to improve a website’s overall effectiveness. However, consider that the more drastic the changes to your content, the more potential there is for volatility in your keyword rankings. You will likely lose some and gain others simply because Google must reevaluate your website’s new content altogether.

Metadata considerations

When website content changes, metadata often changes unintentionally with it. Elements like title tags, meta descriptions and alt text influence Google’s ability to understand the meaning of your page’s content.

I typically refer to this as a page being “untargeted or retargeted.” When new word choices within headers, body or metadata on the new site inadvertently remove on-page SEO elements, keyword relevance changes and rankings fluctuate.

Web performance and Core Web Vitals

Many factors play into website performance, including your CMS or builder of choice and even design elements like image carousels and video embeds.

Today’s website builders offer a massive amount of flexibility and features giving the average marketer the ability to produce an acceptable website, however as the number of available features increases within your chosen platform, typically website performance decreases.

Finding the right platform to suit your needs, while balancing Google’s performance metric standards can be a challenge.

I have had success with Duda, a cloud-hosted drag-and-drop builder, as well as Oxygen Builder, a lightweight WordPress builder.

Unintentionally blocking Google’s crawlers

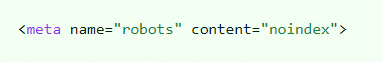

A common practice among web designers today is to create a staging environment that allows them to design, build and test your new website in a “live environment.”

To keep Googlebot from crawling and indexing the testing environment, you can block crawlers via a disallow protocol in the robots.txt file. Alternatively, you can implement a noindex meta tag that instructs Googlebot not to index the content on the page.

As silly as it may seem, websites are launched all the time without removing these protocols. Webmasters then wonder why their site immediately disappears from Google’s results.

This task is a must-check before your new site launches. If Google crawls these protocols your website will be removed from organic search.

Dig deeper: How to redesign your site without losing your Google rankings

Get the daily newsletter search marketers rely on.

In my mind, there are three major factors for determining what pages of your website constitute an “SEO asset” – links, traffic and top keyword rankings.

Any page receiving backlinks, regular organic traffic or ranking well for many phrases should be recreated on the new website as close to the original as possible. In certain instances, there will be pages that meet all three criteria.

Treat these like gold bars. Most often, you will have to decide how much traffic you’re OK with losing by removing certain pages. If those pages never contributed traffic to the site, your decision is much easier.

Here’s the short list of tools I use to audit large numbers of pages quickly. (Note that Google Search Console gathers data over time, so if possible, it should be set up and tracked months ahead of your project.)

Links (internal and external)

- Semrush (or another alternative with backlink audit capabilities)

- Google Search Console

- Screaming Frog (great for managing and tracking internal links to key pages)

Website traffic

Keyword rankings

- Semrush (or another alternative with keyword rank tracking)

- Google Search Console

Information architecture

- Octopus.do (lo-fi wireframing and sitemap planning)

How to identify SEO assets on your website

As mentioned above, I consider any webpage that currently receives backlinks, drives organic traffic or ranks well for many keywords an SEO asset – especially pages meeting all three criteria.

These are pages where your SEO equity is concentrated and should be transitioned to the new website with extreme care.

If you’re familiar with VLOOKUP in Excel or Google Sheets, this process should be relatively easy.

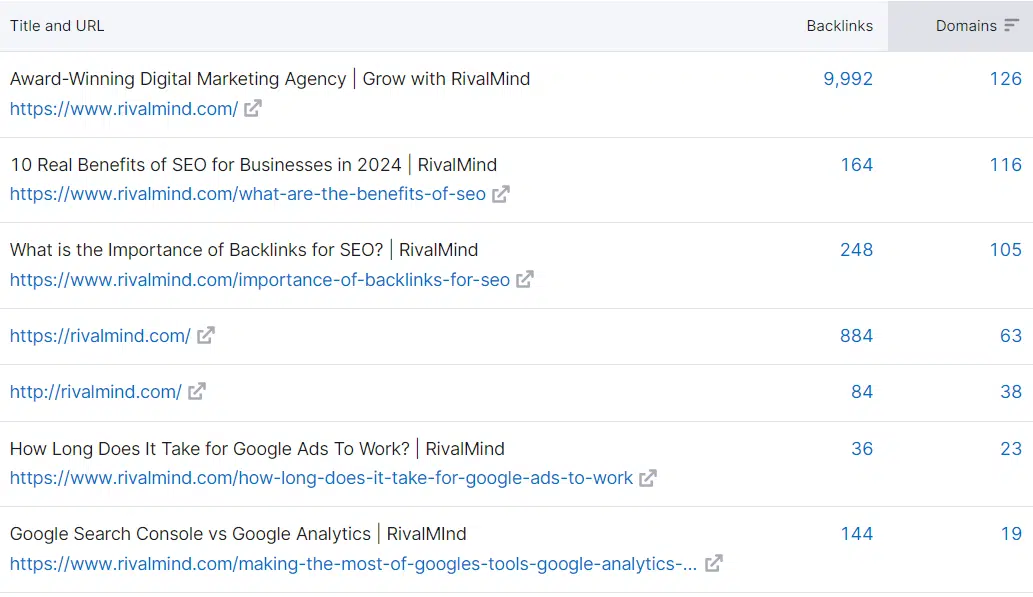

1. Find and catalog backlinked pages

Begin by downloading a complete list of URLs and their backlink counts from your SEO tool of choice. In Semrush you can use the Backlink Analytics tool to export a list of your top backlinked pages.

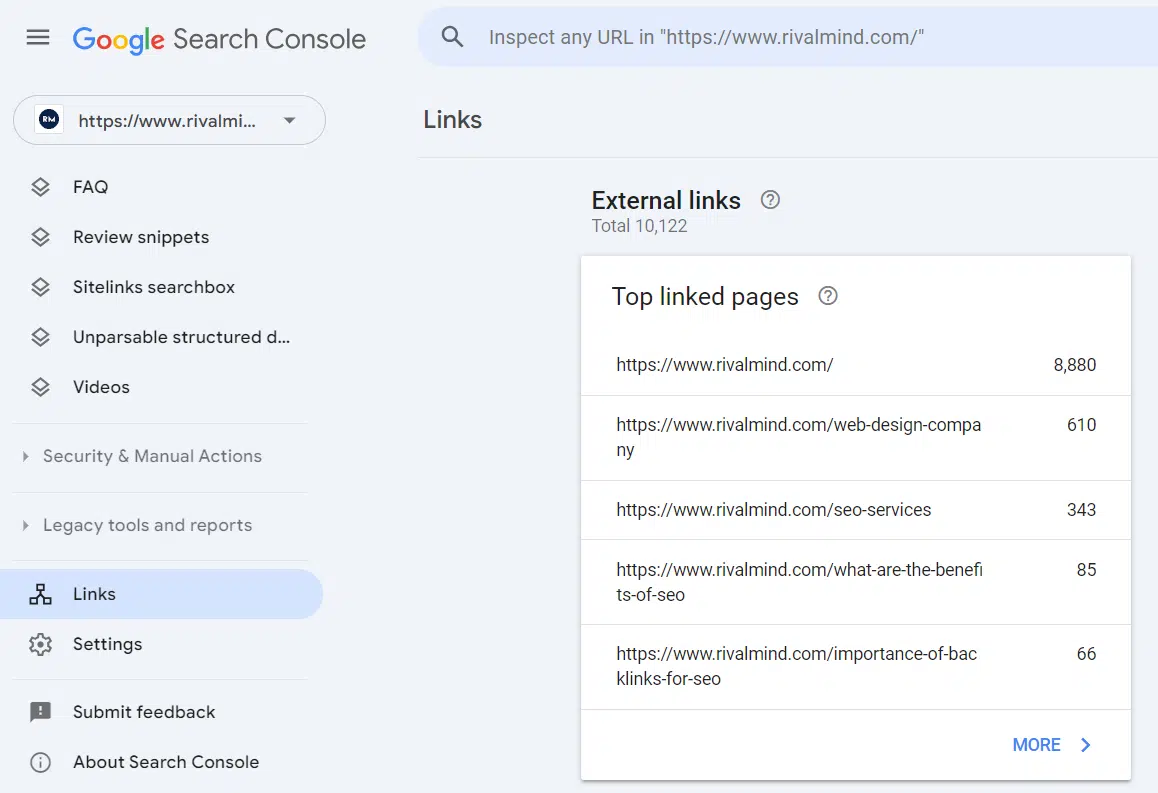

Because your SEO tool has a finite dataset, it’s always a smart idea to gather the same data from a different tool, this is why I set up Google Search Console in advance. We can pull the same data type from Google Search Console, giving us more data to review.

Now cross-reference your data, looking for additional pages missed by either tool, and remove any duplicates.

You can also sum up the number of links between the two datasets to see which pages have the most backlinks overall. This will help you prioritize which URLs have the most link equity across your site.

Internal link value

Now that you know which pages are receiving the most links from external sources, consider cataloging which pages on your website have the highest concentration of internal links from other pages within your site.

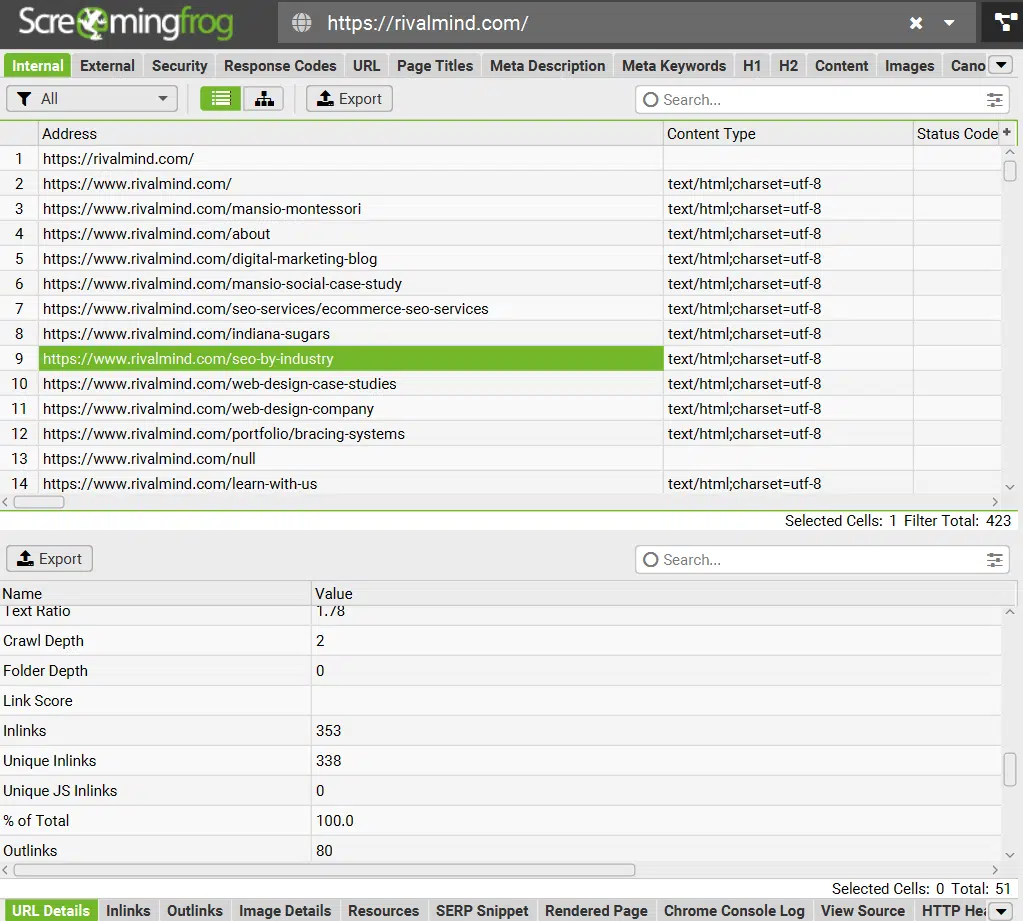

Pages with higher internal link counts also carry more equity, which contributes to their ability to rank. This information can be gathered from a Screaming Frog Crawl in the URL Details or Inlinks report.

Consider what internal links you plan to use. Internal links are Google’s primary way of crawling through your website and carry link equity from page to page.

Removing internal links and changing your site’s crawlability can affect its ability to be indexed as a whole.

2. Catalog top organic traffic contributors

For this portion of the project, I deviate slightly from an “organic only” focus.

It’s important to remember that webpages draw traffic from many different channels and just because something doesn’t drive oodles of organic visitors, doesn’t mean it’s not a valuable destination for referral, social or even email visitors.

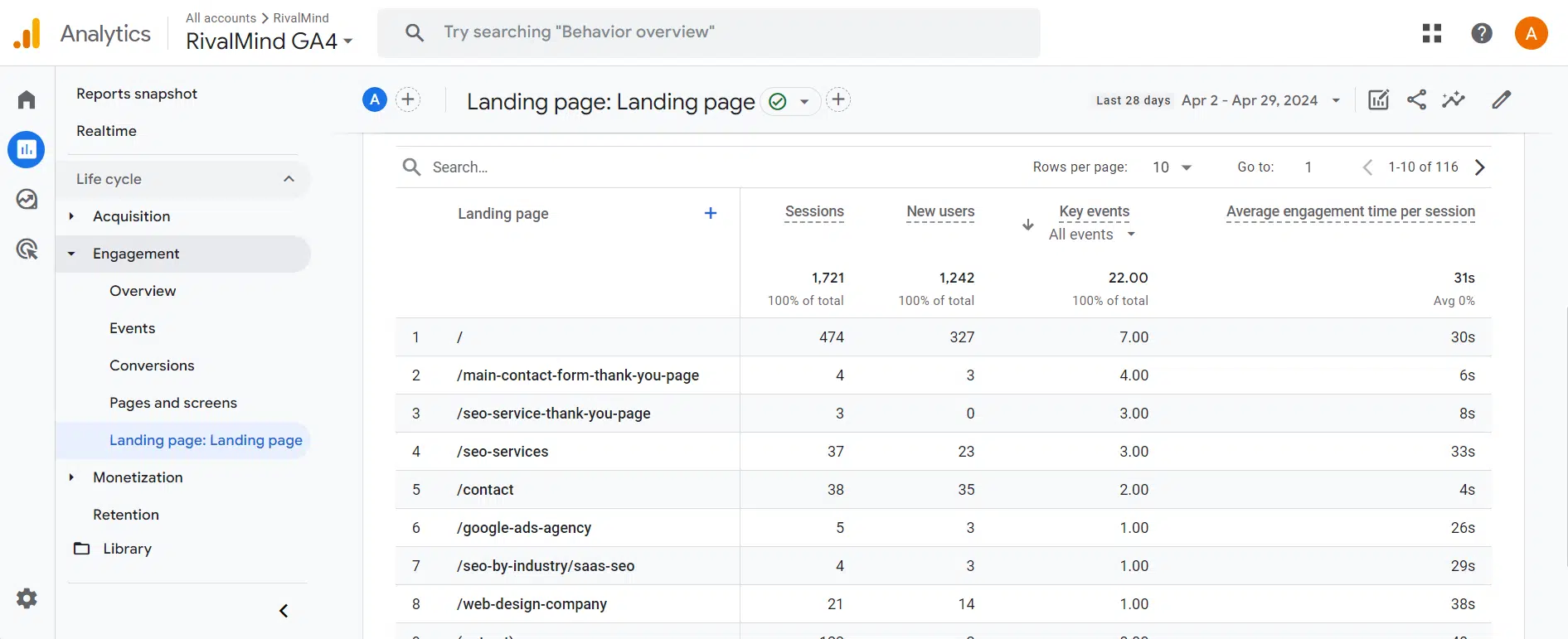

The Landing Pages report in Google Analytics 4 is a great way to see how many sessions began on a specific page. Access this by selecting Reports > Engagement > Landing Page.

These pages are responsible for drawing people to your website, whether it be organically or through another channel.

Depending on how many monthly visitors your website attracts, consider increasing your **** range to have a larger dataset to examine.

I typically review all landing page data from the prior 12 months and exclude any new pages implemented as a result of an ongoing SEO strategy. These should be carried over to your new website regardless.

To granularize your data, feel free to implement a Session Source filter for Organic Search to see only Organic sessions from search engines.

3. Catalog pages with top rankings

This final step is somewhat superfluous, but I am a stickler for seeing the complete picture when it comes to understanding what pages hold SEO value.

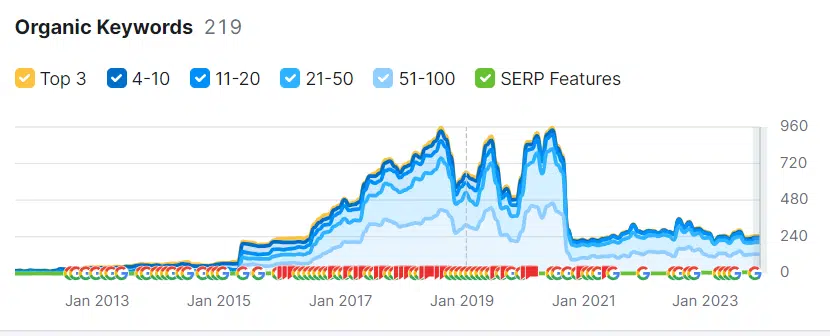

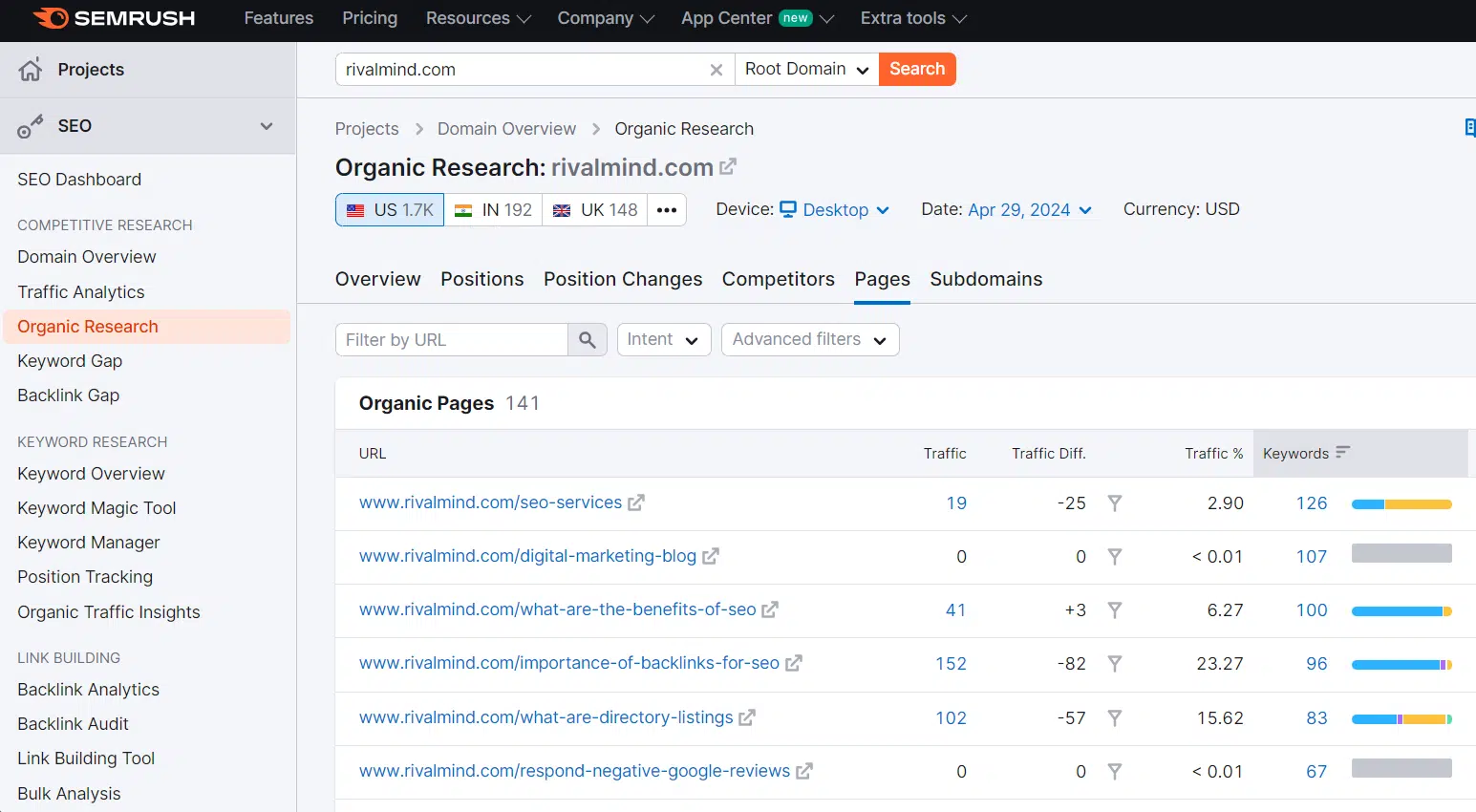

Semrush allows you to easily gather a spreadsheet of your webpages that have keyword rankings in the top 20 positions on Google. I consider rankings in position 20 or better very valuable because they usually require less effort to improve than keyword rankings in a worse position.

Use the Organic Research tool and select Pages. From here you can export a list of your URLs with keyword rankings in the top 20.

By combining this data with your top backlinks and top traffic drivers, you have a complete list of URLs that meet one or more criteria to be considered an SEO asset.

I then prioritize URLs that meet all three criteria first, followed by URLs that meet two and finally, URLs that meet just one of the criteria.

By adjusting thresholds for the number of backlinks, minimum monthly traffic and keyword rank position, you can change how strict the criteria are for which pages you truly consider to be an SEO asset.

A rule of thumb to follow: Highest priority pages should be modified as little as possible, to preserve as much of the original SEO value you can.

Seamlessly transition your SEO assets during a website redesign

SEO success in a website redesign project boils down to planning. Strategize your new website around the assets you already have, don’t try to shoehorn assets into a new design.

Even with all the boxes checked, there’s no guarantee you’ll mitigate rankings and traffic loss.

Don’t inherently trust your web designer when they say it will all be fine. Create the plan yourself or find someone who can do this for you. The opportunity cost of poor planning is simply too great.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Source link : Searchengineland.com

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)