Google’s most recent earnings call contained a number of interesting statements about where Search is headed. Sundar Pichai spoke repeatedly about the evolution of Search that is happening because of Google’s use of AI.

Throughout this article I’ve included quotes from those on the call. I have added bolding to parts that I thought were most interesting.

Key takeaways

- Google is committed to evolving search with AI ****** like PaLM and the upcoming Gemini. Sundar Pichai indicated this will lead to major innovations in search.

- The Search Generative Experience (SGE) powered by AI is not just an experiment – Pichai made it clear Google plans to integrate it deeply into search.

- Google sees big potential in multimodal search combining text and images, especially with Google Lens integrated into tools like Bard.

- YouTube Shorts engagement continues to rapidly grow, which I see as an opportunity for SEO’s to focus on in the future.

Re the Search Generative Experience (SGE), Pichai said, “While it’s exciting new technology, we’ve constantly been bringing in AI innovations into search for the past few years, and this is the next step in the journey.”

Gemini is a multimodal model that will change search

AI is not new to Google. Sundar Pichai said, “This is our seventh year as an AI first company, and we intuitively know how to incorporate AI into our products. Large Language ****** make them even more helpful – ****** like PaLM2 and soon Gemini, which we’re building to be multimodal. These advances provide an opportunity to reimagine many of our products, including our most important product, search.

We are in a period of incredible innovation for search, which has continuously evolved over the years.”

I have written more about what we know so far about Google’s Gemini model in Episode 293 of Search News You Can Use.

Some more hints about Gemini:

“An anonymous source who said that Google’s researchers have been using YouTube (which Google owns) to develop Gemini — which AI practitioners say could be an edge for GoogleDeepMind, since it can get “more complete access to the video data than…

— Dr. Marie Haynes🌱 (@Marie_Haynes) June 28, 2023

The SGE is the future of search

Pichai: “This quarter saw our next evolution with the launch of the Search Generative Experience, or SGE, which uses the power of generative AI to make search even more natural and intuitive.

User feedback has been very positive so far.”

Let’s pause here as many if not most of the SEOs I know will disagree with this statement. Feedback from the SEO industry on the SGE has decidedly not been positive.

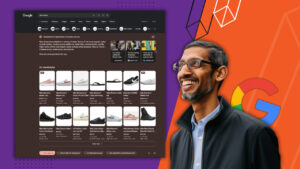

We have seen that often the SGE answer has simply stitched together information from multiple websites, without linking to those sources from the answer.

It has sometimes made up information:

😲 Google SGE is making me look like an #SEO boss LOL

I think it’s time to update my resume 🤣 pic.twitter.com/vB3RtzXzpc

— Paul Andre de Vera (@paulandre) July 5, 2023

The information it gives is not always the most helpful.

It’s strange to me that SGE seems to often just ignore user intent, which Google’s algorithms focus so much on…

In all 3 examples, I typed “tonight,” “today” or “this evening,” and SGE showed generic results with no evidence that these clubs are even open today (not all are) pic.twitter.com/GMKUAnYA1i

— Lily Ray 😏 (@lilyraynyc) July 12, 2023

I mean, it’s all just so bad.

Can you imagine the average non-SEO understanding that all of this advice – despite literally being *from Google* – should be taken with a grain of salt, and is all basically half wrong?

What happened to E-E-A-T? Does it not matter for SGE? pic.twitter.com/cbhanymJ1g

— Lily Ray 😏 (@lilyraynyc) July 12, 2023

Clearly this is an experimental product and it’s not perfect.

Why did Sundar Pichai say that user feedback has been positive so far?

Some people have found the SGE useful.

Google’s search generative experience ftw. Trying to find which floor the Apple Store was on while in the mall and the regular results were too vague even though I specified “floor” in various queries. pic.twitter.com/DvMuFS923q

— Mark Alves (@markalves) June 19, 2023

SGE is fast!

This really was handled by the SCOTUS today and SGE already has a summary pic.twitter.com/i4TX2bLf89

— Eli Schwartz (@5le) June 30, 2023

We also don’t know whether some of that positive feedback comes from internal testers who are working on Google’s new AI powered search engine, code named “Magi”. I suspect this is powered by the multimodal Gemini model. The NYT says,

“Plans for the new search engine, which demonstrate Google’s ambitions to reimagine the search experience, are still in the early stages, with no clear timetable on when it will release the new search technology.

But long before the search engine can be rebuilt, the Magi project will add features to the existing search engine, according to internal documents. Google has more than 160 people working full time on it, a person with knowledge of the work said.

The system would learn what users want to know based on what they’re searching when they begin using it. And it would offer lists of preselected options for objects to buy, information to research and other information. It would also be more conversational — a bit like chatting with a helpful person.”

Later in the call, Pichai made it clear that Google is not backing down here. SGE is not just an experiment – it’s very likely to be an integral part of Search.

“We’ve obviously been focused on bringing this experience and making sure it works well for users and it’s very clear to me first of all, as a user myself, there are certain queries for which the answers are so significantly better, it’s a clear quality win, so I think we are definitely headed in the right direction. And we can see it in our metrics and the feedback from our users as well.”

Was Pichai hyping up the usefulness of the SGE for the investors listening to the call? Or perhaps their metrics really do show that people find it useful despite it not being perfect.

If you are an SEO who has access to the SGE, I would encourage you to be using it as often as you can. You can apply for beta access here, although I have found that when I do have access to SGE results, it is fleeting. Mine pops up perhaps a couple of times a day. It is continually changing. And yes, it has its faults.

I do not want to speak against my colleagues who criticize the SGE. There is a lot to be improved and our critical feedback will help Google here. But once the SGE becomes the new way people search on Google, businesses will be clamoring for those who understand how it works and what it rewards, rather than those who can criticize it.

We have not yet seen SGE powered by Gemini – the new model that is not just a language model but also is multimodal – trained not just on words on the internet, but images and video. I expect that what we are seeing in testing right now will change significantly by the time it is integrated fully into search.

If you find some good uses for the SGE, and especially if you have thoughts on what it is rewarding, please do share with me so I can include it in newsletter.

Pichai says the SGE will continue to be brought to more and more users and “over time this will just be how search works, and so, while we are taking deliberate steps, we are building the next major evolution of search and I’m pleased with how it’s going so far.”

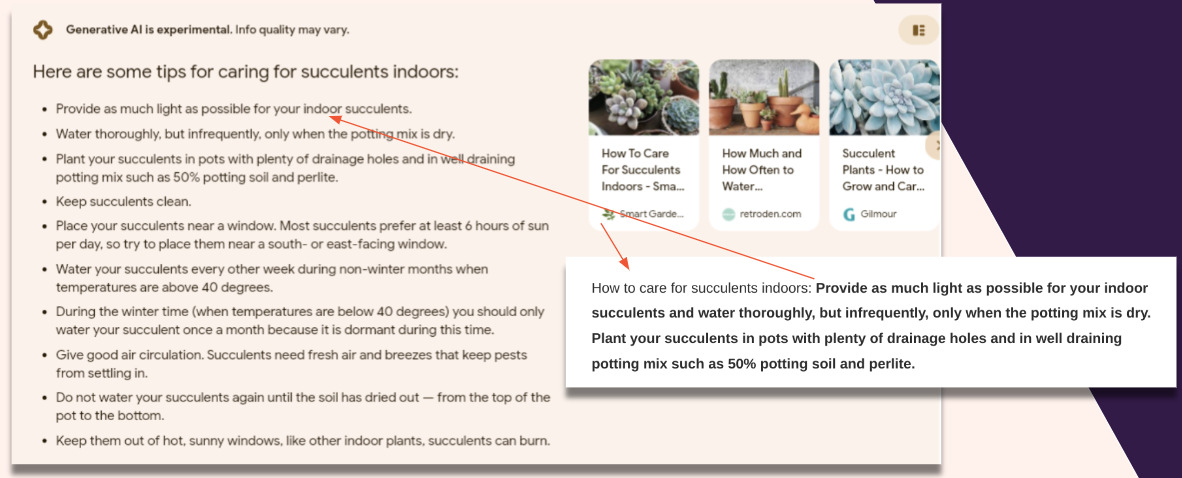

SGE helps people search in different ways

Pichai: “It can better answer the queries that people come to us with today, while also unlocking entirely new types of questions that search can answer. For example, we found that generative AI can connect the dots for people as they explore a topic or project….We see this new experience as another jumping off point for exploring the web and enabling users to go deeper to learn about a topic.”

I have not seen much talk about the SGE’s conversational mode. Perhaps this may be what Pichai is talking about here.

Conversational mode as it exists in SGE right now is quite helpful. I think it will take us some time to learn to search conversationally. It does not come intuitively to those of us who have spent years doing single searches of keywords rather than conversing. The more I use it, the more I am finding it helpful.

SGE is getting faster

“Since the May launch, we’ve boosted serving efficiency, reducing the time it takes to generate AI snapshots by half. We’ll deliver even faster responses over time.”

Google will continue to send traffic to the web

“We’re engaging with the broader ecosystem and will continue to prioritize approaches that send valuable traffic and support a healthy open web.”

Ads will continue to play an important role

“Ads will continue to play an important role in this new search experience. Many of these new queries are inherently commercial in nature. We have more than 20 years of experience serving ads relevant to users’ commercial queries and SGE enhances our ability to do this even better.”

Several times Pichai commented on how the SGE provides helpful answers to commercial queries.

“The thing that doesn’t change with these experiences is that a lot of user journeys are commercial in nature, there are inherent commercial user needs. And what’s exciting to me is that SGE gives us an opportunity to serve those needs, again, better.

So it’s clearly an exciting area and as part of that, the fundamentals don’t change. The users have commercial needs and they are looking for choices, and there are merchants and advertisers looking to provide those choices, so those fundamentals are true in SGE as well.”

We have seen many examples of SGE commercial results. There is often much to find fault with as sometimes the results are not linking to websites or even have wrong information. But once again, know that Google is committed to improving here. We need to be paying attention to how commercial results evolve in the SGE.

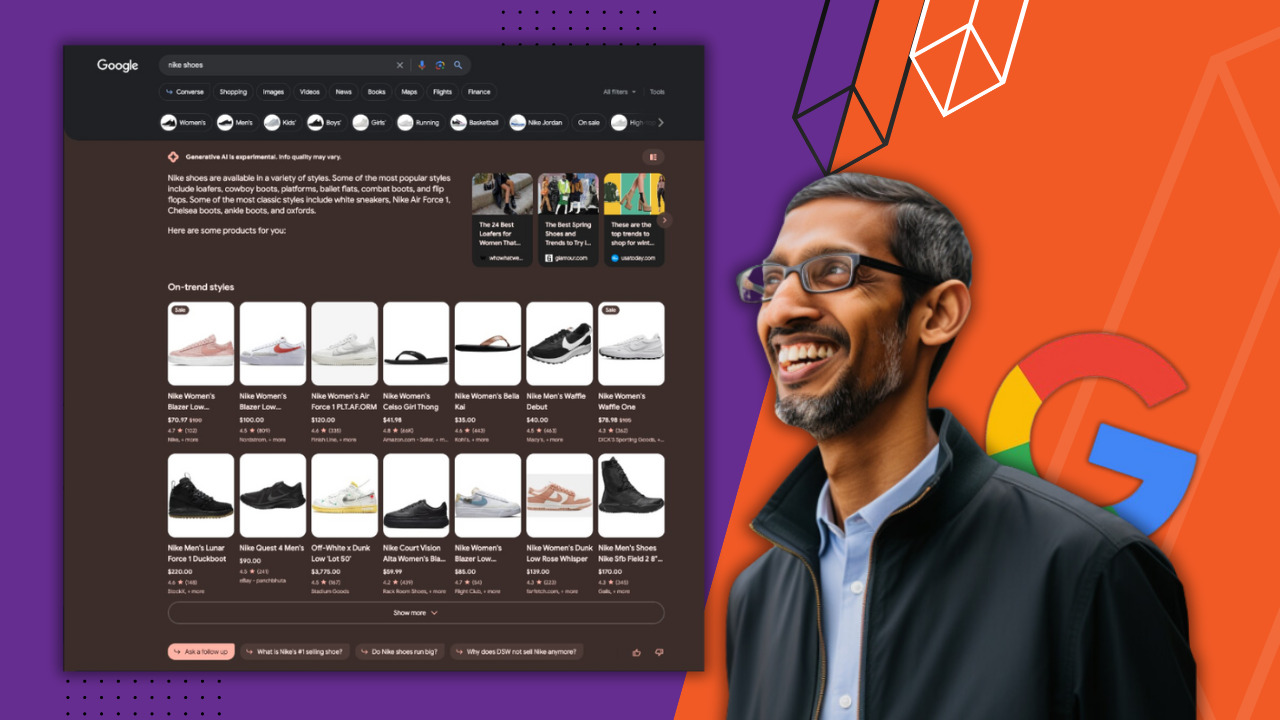

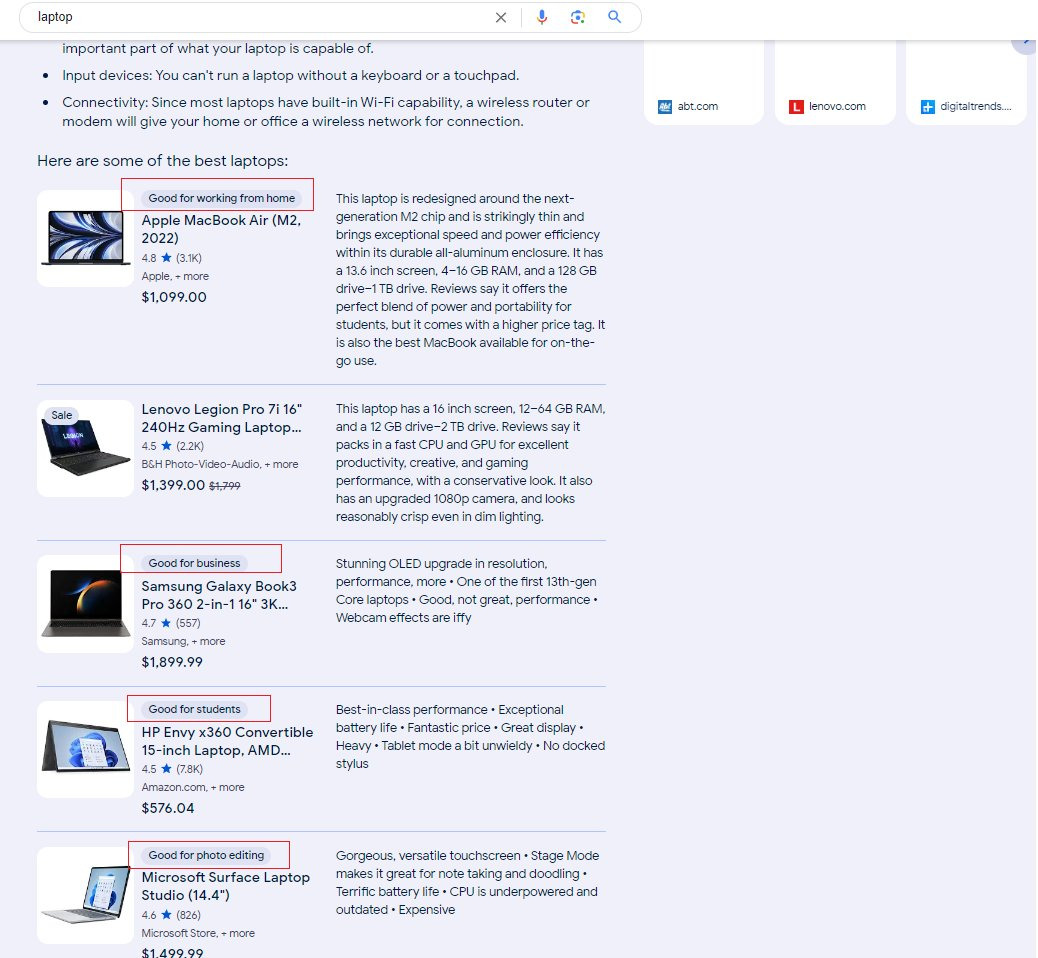

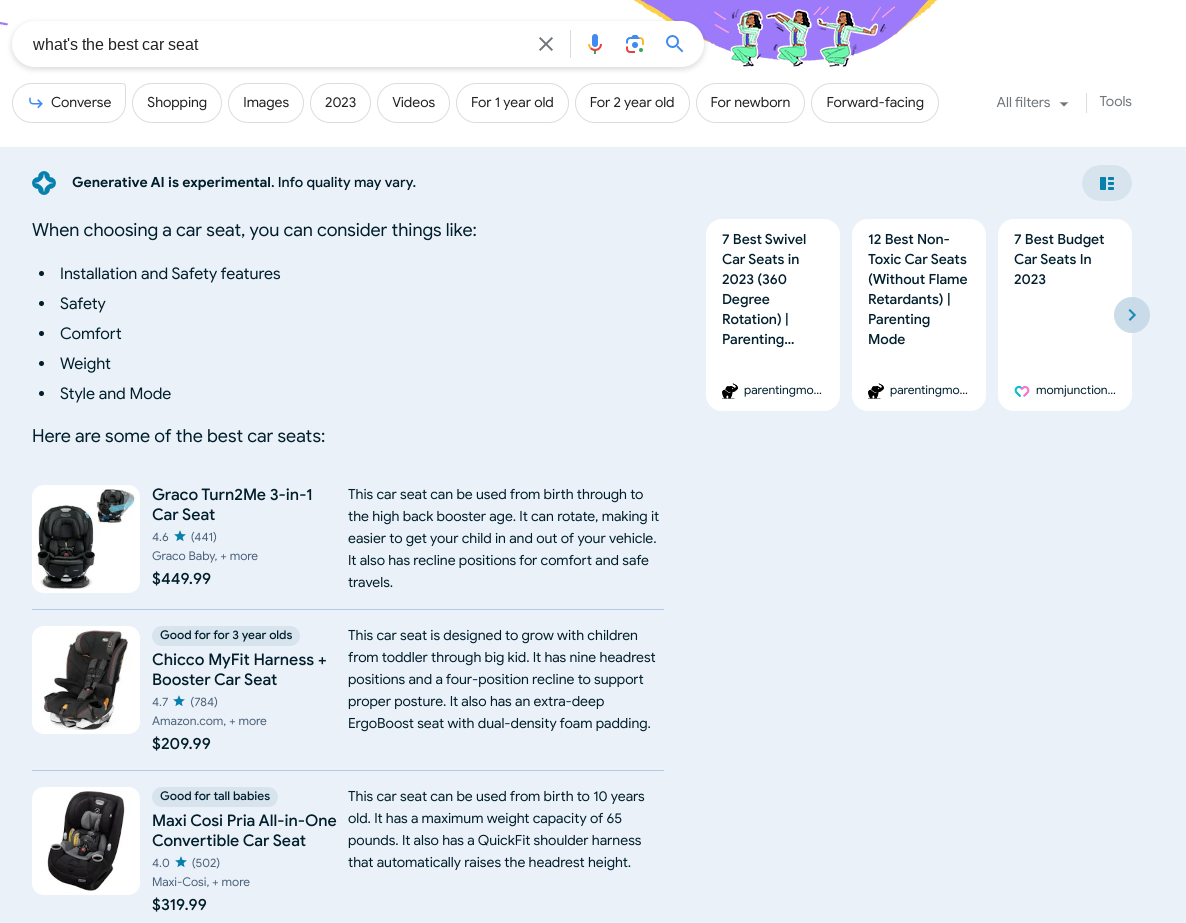

Here are a few screenshots I’ve shared in newsletter over the last few weeks showing commercial queries.

↗️ Keep eye on SGE, at normal serp we saw popular product or as grid format or browse style idea fashion accessories.

🆕but in #sge its seen as Popular style, On trend styles. Look like using clickbait. yes its changing too fast. pic.twitter.com/AE2XnX4Nk0

— Khushal Bherwani (@b4k_khushal) June 16, 2023

ショッピング系クエリの SGE はアイテムがリスト形式で並ぶんだけど、これはタイル状🟥🟨🟩🟪

SALE ラベルがついてるのは、Merchant Center のフィードから引っ張ってきてるのかな👟 pic.twitter.com/QyP49Vx83X— Kenichi Suzuki💫鈴木謙一 (@suzukik) June 25, 2023

My teammate @GennaCarbone spotted links to Stores in SGE pic.twitter.com/E0dX4GV0Ib

— Lily Ray 😏 (@lilyraynyc) June 28, 2023

Jk, found it. That’s cool, I like that it leads directly to those sites instead of opening the additional options like in my example. pic.twitter.com/u4RuTWyTWJ

— Brian Freiesleben (@type_SEO) June 28, 2023

Google will continue to send traffic to businesses who need customers.

My main concern is something I discussed in my most recent podcast episode about how Google’s ranking systems have changed dramatically because of AI systems like the helpful content system. SEO will not die. Businesses need to be found online and they will need experts to guide them through the AI related changes we are about to see in search.

But, what I am concerned for are the many websites that exist to deliver information, especially information that already exists online. So much of the content that has performed well on Google for years falls short when evaluated by Google’s helpful content criteria. These are some of the questions Google says their AI systems are built to reward:

- Does the content provide original information, reporting, research, or analysis?

- Does the content provide insightful analysis or interesting information that is beyond the obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources, and instead provide substantial additional value and originality?

- Does the content provide substantial value when compared to other pages in search results?

- Do you have an existing or intended audience for your business or site that would find the content useful if they came directly to you?

- Are you mainly summarizing what others have to say without adding much value?

For many years, you could make money online by writing about what everyone else has already covered and then using a knowledge of SEO (technical improvements, links, internal linking, etc.) to make that content look valuable to Google. Many businesses were formed because of this opportunity. As the SGE continues to improve, Google will still send people to websites, but only those that users find helpful enough to visit beyond the AI generated answer.

I fear that many sites that exist today to publish information will not perform well at all in Google’s new AI driven search engine.

Growing YouTube is important to Google

“Earlier this year, we shared that revenues across Google’s YouTube products were nearly $40 billion for the 12 months ending in March. I’m really pleased with how YouTube is growing audiences and driving increased engagement. YouTube shorts is now watched by over 2 billion logged in users every month, up from 1.5 billion just one year ago.

The living room remained our fastest growing screen in 2022.”

From Google’s CBO, Philipp Schindler: “Moving to YouTube…YouTube starts with our creators and it’s their success and our multiformat strategy that will drive YouTube’s long term growth…enabling our creators to make a living on our platform with more format and awesome tools…

As we think about growth, we’re focused on Shorts, YouTube TV and our subscription offerings.”

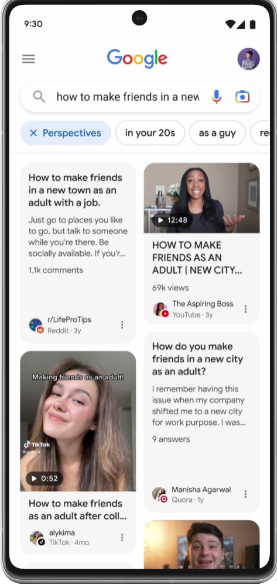

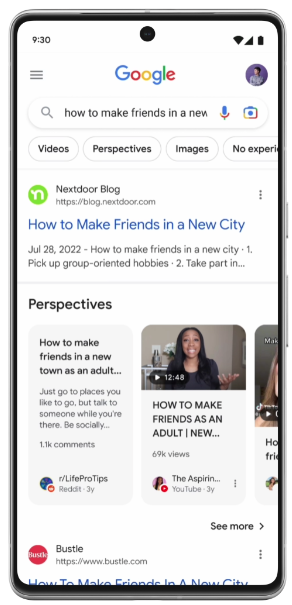

Pay attention to YouTube Shorts. You may have seen a new perspectives filter, which shows results from YouTube (both Shorts and long form videos) amongst other user generated content.

Google says their helpful content system will soon show this type of perspective in the search results. Perhaps that is what is pictured in the first image above?

YouTube shorts will be more and more important, and possibly give the “small guy” who has topic experience, but not as much authority, more of a voice in search.

Pay attention to Google Lens and Bard integration

Pichai: “There’s a lot of excitement around…we integrated Google Lens into Bard. We’ve known how big Google Lens can be. We see that in the visual searches. We get how much that has grown over the last two years, but definitely that in Bard has been super well received.”

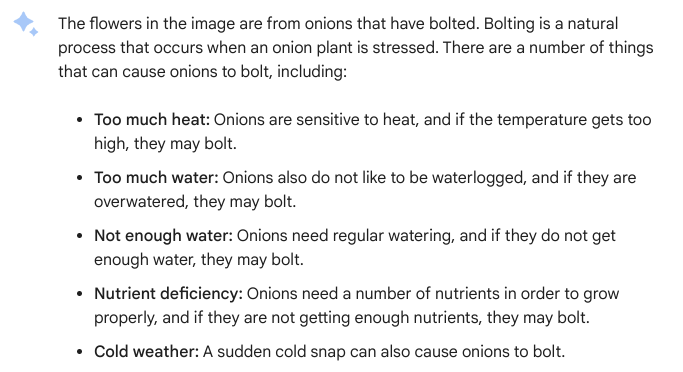

Lens in Bard is fascinating. It gets a lot of things wrong for now, but will only improve over time. Just now I gave Bard a picture of these bizarre onions that keep coming up year after year in our yard:

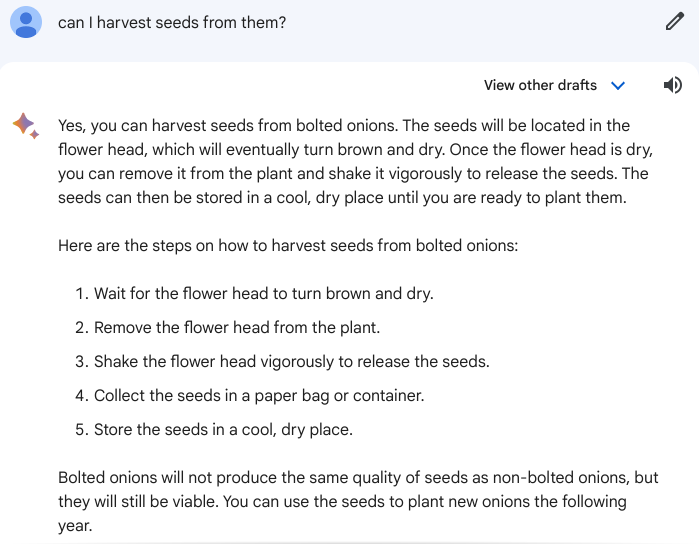

I can then ask followup questions…

A natural reaction to Bard produced content like this is to point out the places where it is wrong. Bard is still experimental and because Google uses reinforcement learning with human feedback to improve it, it will get more and more accurate and useful.

I used Lens on my Pixel phone to search with this picture of my bizarre onions. I didn’t ask what it was. Instead, I asked, “should I harvest the seeds” and Lens gave me articles and videos that are extremely helpful.

Again, if you are an SEO, I would encourage you to be continually testing Bard and Lens. I believe they will fundamentally change how people search.

This may be a controversial opinion, but I believe it is a great asset to an SEO to own and regularly use a Google phone. I am not certain whether all of the capabilities of Lens are available on iPhones. Pichai said that Android 14, their latest OS (which is due to launch in August) will “incorporate the advances in generative AI to personalize android phones.”

Pichai goes on to say re Bard and Lens, “Given Gemini is being built from the ground up to be multimodal, I think that’s an area that going to excite users. And I go back many years ago when we did universal search. Whenever for users we can abstract different content types and put them in a seamless way, they tend to receive it well, and so I’m definitely excited about what’s ahead.”

I predict that Lens will not just be a cool fad that lets you play around with image search. It has the potential to radically change the way we use search engines.

Google will continue to evolve their AI experience

From Sundar Pichai: “We will continue to evolve the experience, but I’m comfortable with what we’ve seen, and we have a lot of experience working through these transitions and we’ll bring all those learnings here as well.”

If you are frustrated with what you’ve seen from SGE so far, you are not alone.

I think these closing words from Pichai are important:

“And I think all of this is before we have our multimodal capabilities really in the mix, and so, looking at the early innovations there, I think this is going to be an exciting couple of years ahead.”