Including tips and recommendations for helping site owners navigate the AI UGC triple (detection, volume, and pace of lower-quality AI user-generated content.)

The term “low-quality AI user-generated content” is a mouthful, but it’s an important topic for site owners that allow UGC to be published on their sites. And I have come across several tough situations recently based on heavily analyzing the September helpful content update (HCU). I don’t think anyone has covered the topic of UGC’s impact with the helpful content update yet, so I wanted to quickly cover it in this post.

Since the launch of the first HCU in August of 2022, I saw many examples of low-quality AI content get hit hard. I mentioned that several times when sharing about drops with the August 2022 HCU, the December 2022 HCU, and now with the more aggressive September HCU(X). But that was more about the site owner using AI heavily to publish lower-quality (and unhelpful) content on their own site. So the sites ended up with a lot of lower-quality AI content over time and got hit by the HCU.

What I’m referring to today is about other people adding lower-quality AI content to your site via UGC. In other words, what if people submitting user-generated content are using AI to quickly craft that content? And what if that content is lower quality, not edited to add value, not refined to be truly insightful, etc.? Yep, that can be problematic, and site owners that allow UGC are starting to see this issue creep up. And for some sites that have contacted me after the September HCU, they got hit very hard and dropped heavily in search visibility.

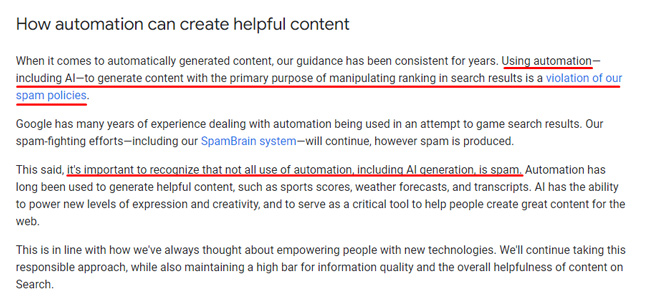

A reminder about Google’s stance on AI content: It’s about quality.

It’s important to remember that Google isn’t against all AI content. It’s against low-quality AI content. So if someone is using AI to help them create content, and they edit and refine that content to make sure it’s accurate, valuable, etc., then that could be totally fine. But if someone is simply exporting AI-generated content, and publishing that at scale, then they are teeing their site up to get obliterated by either the helpful content update or even a broad core update.

Here is a paragraph from Google’s post about AI content where Danny Sullivan explains that AI content with the primary purpose of manipulating ranking in search results is what they have a problem with:

The insidious creep of low-quality user-generated AI content:

In my opinion, and based on what I have seen while analyzing many sites impacted by the HCU, low-quality AI content at scale can be incredibly dangerous. And if UGC is a core part of your site’s content, then you have to keep a close eye on quality. That’s been the case with UGC for a very long time, but now it’s much easier for users to leverage AI creation tools to craft responses on forums, Q&A sites, and more.

And just because someone else posted the content on your site, you are still responsible. Google has explained that many times over the years. Below I’ve included just one of several tweets that I have shared over the years about Google explaining that UGC will be counted when evaluating quality. If it’s on your site, and it’s indexed, it’s counted when evaluating quality.

Moderate UGC Heavily:

Since medieval Panda days (circa 2011), I have always explained that site owners need to moderate user-generated content heavily. If not, lower-quality content can creep in and build over time. And when that happens, you are setting your site up to get hit hard by a major algorithm update. So my advice is the same now, but with a slight AI twist. I would now also be on the lookout for lower-quality AI content and not just your typical UGC spam. The top AI content detection tools continue to improve and can help flag content with a high probability that it was created via AI.

Below, I’ll cover some tips for site owners that might be dealing with lower-quality AI user-generated content. Again, I’ve had several sites reach out where that issue has been creeping up over the past year or so…

Detection, Volume, and Pace: The AI UGC Triple

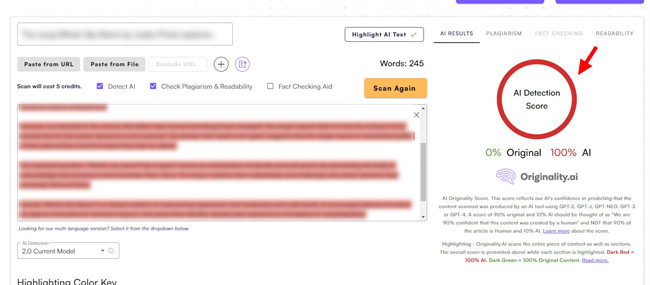

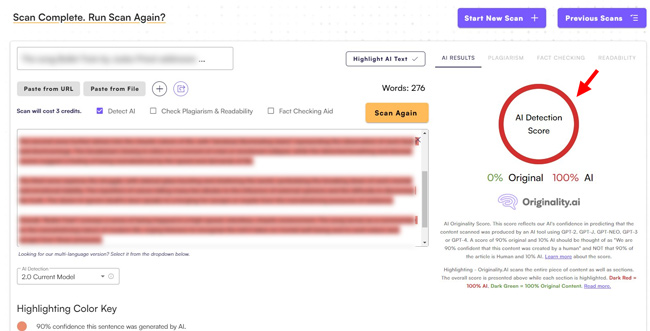

Beyond just identifying AI-generated content, you can check the volume of responses from users and the pace at which those responses are being submitted. One site owner reached out to me recently showing a user that submitted many responses in a short period of time. When running those responses through an AI content detection tool, they all came back with a 100% probability the content was created via AI. For example, you can see two of those submissions below.

So the combination of volume, pace, and fairly obvious AI content yielded a good example of a user leveraging AI to pump out a ton of UGC on the site in question.

Note, it’s a large-scale site, so this one user would probably have little impact. But over time, if other users did the same, it could absolutely cause problems from a quality perspective. The site owner was smart to reach out to me about this. They handled that user and might develop a process for identifying patterns that could help surface people trying to game the system with lower-quality AI user-generated content.

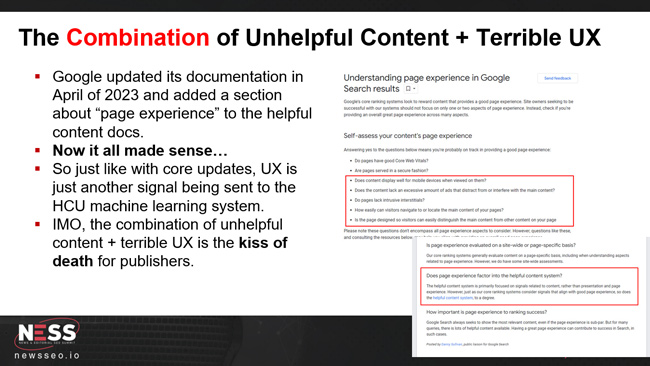

And remember, the September HCU seemed to incorporate UX into the equation (which I have heavily seen while analyzing many sites impacted). So user experience barriers like aggressive ads, popups, interstitials, and more can contribute to “unhelpful content”. So if you combine low-quality AI user-generated content with a terrible UX, you could have a serious problem on your hands with the HCU roaming the web. Beware.

Wrapping up: A warning for site owners that accept UGC.

Again, I wanted to cover this topic for any site that publishes user-generated content. Even if you have a larger-scale site with a ton of content, I would be very careful with letting any type of low-quality content on the site. And now with the ease of AI content generation tools, you should be careful about letting low-quality AI user-generated content on the site. Again, I’ve had several companies reach out after getting hit hard, and that was clearly part of the problem.

I’ll end this post with some tips and recommendations for site owners:

- Moderate heavily, and with an AI twist: If you accept user-generated content on your site, you should be already moderating heavily from a quality perspective. But now you should also be on the lookout for lower-quality AI content that’s being submitted.

- Pace of submission: One red flag could be the pace of submission by certain users. For example, I explained earlier that a company that reached out to me after notifcing submissions that seemed a bit off… When digging into that user, there were a bunch of submissions in a very short period of time. And after running those submissions through AI content detection tools, there was a 100% probability that those submissions were created via AI.

- Testing Lab: I recommend creating a testing lab that leverages several AI content detection tools. The tools are not perfect, but they are good at detecting lower-quality AI generated content. Have a process in place for checking content that’s been flagged. And I’ll cover APIs next, which can help check content in bulk.

- APIs are your friend: Some AI content detection tools have APIs that enable you to check content in bulk. If you have a large-scale site, with a lot of user-generated content, then leveraging an API could be a smart way to go. For example, GPTZero, Originality.ai, and others have APIs that you can leverage for checking content at scale.

- AI content policy for user-generated content: Have a policy in place about AI content that users can access and easily understand. Make sure users understand what is allowed, and what is not allowed, including what you will do if low-quality AI content is detected.

- Indexing-wise, be quick, be decisive: And finally, deal with the AI submissions quickly and make sure they don’t get indexed (or don’t stay indexed). As I have covered many times before in posts and presentations about major algorithm updates, all indexed pages are taken into account when Google evaluates quality. So focus on what I call “quality indexing” and make sure lower-quality AI user-generated content does not get indexed.

Summary: Watch for UGC with an AI twist.

For sites that accept user-generated content, it has always been important to moderate that content heavily from quality perspective. And now with AI content generation tools, UGC is now trickier to deal with, and moderation has gotten a bit harder. I recommend reviewing the tips and recommendations I provided in this post to create a process for flagging potential problems, and then handling those issues quickly. That’s the best way to maintain strong “quality indexing” levels, which is important for avoiding problems based on major algorithm updates like the helpful content update and broad core updates.

GG