Artificial Intelligence (AI) is revolutionizing the way we search and find information online. Google, a pioneer in this field, is constantly testing and integrating AI technologies to enhance its search results. From the Search Generative Experience (SGE) that provides AI-generated answers to the integration of Google’s Bard AI chat technology across various Google products, AI is reshaping our search experiences.

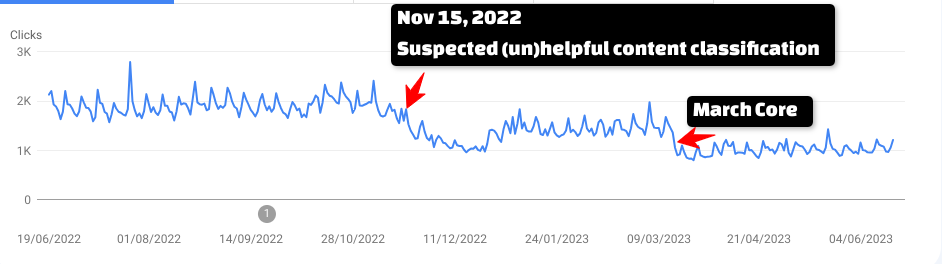

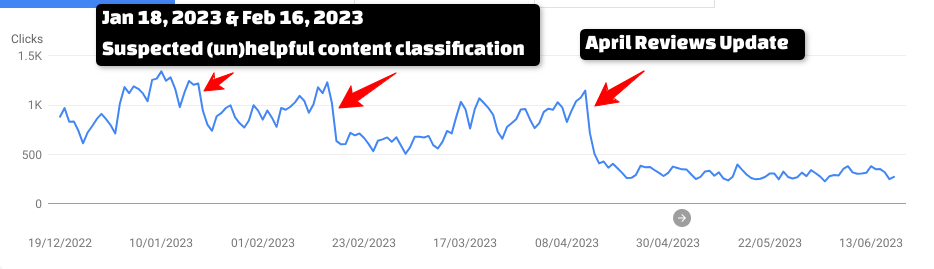

But what does this mean for your website’s visibility on Google? If you’ve noticed an inexplicable decline in your organic search traffic, there’s a good chance that Google’s AI-driven ‘helpful content system’ is influencing your rankings. This system uses machine learning to predict whether content is likely to be the most helpful for a searcher. If your content consistently falls short, your site may be classified as having ‘unhelpful content’.

In this article, I’ll delve into how AI has already dramatically changed Google’s ranking system and what you can do to ensure your website doesn’t get left behind.

I’ll also share insights on some sites that I suspect are feeling the effects of an ‘unhelpful content’ classification and explain why this might be the case.

I’ll share with you what I’ve learned about how the helpful content system works.

Podcast episode where I share more about my thoughts on how the helpful content system has changed search.

Transcript

I have long maintained that the key to ranking is to align with their descriptions of quality in the Quality Rater’s Guidelines. I wrote a book on it! Until recently, it has been hard to explain why other than to say that Google told us they build algorithms to assess the quality of a page, so it makes sense to align with their recommendations on quality.

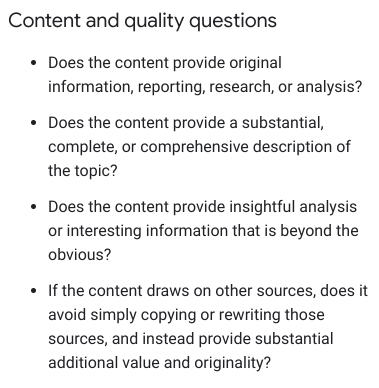

They tell us they do this by asking questions like, “Does this article contain insightful analysis or interesting information that is beyond obvious?” or, “Does the page provide substantial value when compared to other pages in search results?”

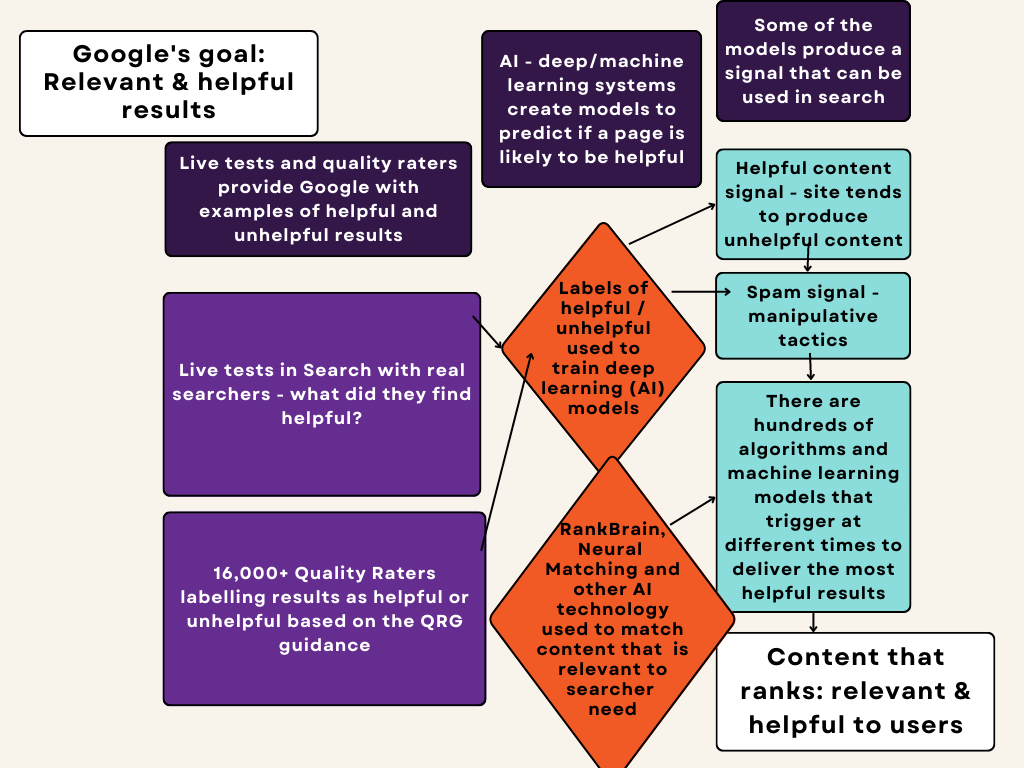

Google’s goal is to present the searcher with pages that are likely to be helpful to them and satisfy the need that caused them to search. They accomplish this by using a complex system of machine learning ****** that create signals Google can use in their ranking algorithms.

I believe that when it comes to ranking, nothing is more important than aligning with Google’s criteria for quality they have laid out in their documentation on creating helpful content, essentially a summary of the rater guidelines.

I tried to put my thoughts that I’ll explain more fully in a diagram.

A Brief Introduction About the Author

Dr. Marie Haynes

There is much I want to write about as it has been so long since I have published an article outside of my newsletter. In case you’re new to my work, here’s a bit of history. I was a veterinarian. I created a veterinary website and learned how to get it found on Google search. By the time Google’s Penguin algorithm launched, I was an active participant in many online SEO forums. I found myself in all sorts of fascinating conversations and brainstorming sessions, helping solve many SEO related problems.

In 2012, SEO drew me away from my veterinary career. I started by helping businesses remove Google penalties and then spent the next decade helping as many people as I could who were struggling with getting found online. The whole time I was learning more and more about what Google’s algorithms reward.

I’ve been honoured to be asked to share my thoughts on search at many conferences, podcasts, and in online search publications. As my work became more well known, I grew a really fun and exciting agency that helped many businesses. The pandemic brought about change once again, with remote work crushing our thriving office culture.

2022 was a year of change for me. I made the difficult decision to close down my agency. In February of 2022 I started to learn more and more about Google’s use of AI in their algorithms. Since then, I’ve been in an intensive learning season trying to understand more about Google’s use of AI in search. When OpenAI playground (the predecessor to ChatGPT came out, I spent hours and hours testing it. I still do!)

And now, with new AI advancements happening every day there’s no shortage of interesting things to learn and write about. I’d highly recommend this article on how ChatGPT works. It set off so many lightbulbs for me in understanding neural networks. It turns out…AI is just a bunch of math. Math that’s really good at making predictions. Consider the basic linear equation, y=mx+b which can model whether a certain object aligns with the points on a straight line. Pretty much anything can be modeled with the right mathematical equation. This includes predicting whether certain content is likely to align with what internet users typically find useful. This process involves determining the correct variables to assess and determining their relative importance or ‘weight.’

The Evolution of Google’s Algorithms and AI’s Role

Becoming an AI driven answer engine has always been Google’s goal. Google’s mission has been to make the world’s information accessible and useful. In the year 2000, Google co-founder Larry Page said,

“Artificial intelligence would be the ultimate version of Google. So we have the ultimate search engine that would understand everything on the web. It would understand exactly what you wanted, and it would give you the right thing. That’s obviously artificial intelligence.”

In 2013, Google’s head of search, Amit Singhal, said that “computers will know what people want and users won’t have to type their queries into a small box on a clean white page.” He said, “The destiny of search is to become that ‘Star Trek’ computer and that’s what we are building”

According to Google, they have been using AI to improve their algorithms for some time now, calling themselves an AI-first company since 2016.

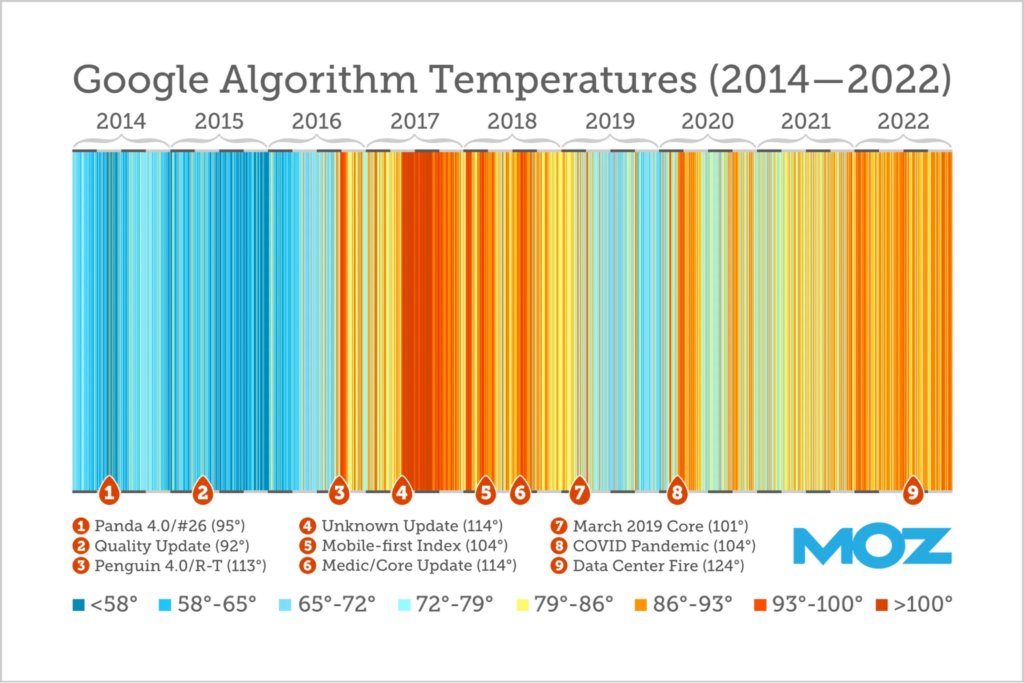

I am fascinated by this heatmap image created by Dr. Pete of Moz. The “hotter” or closer to red a bar is, the more turbulence there was in Google’s search results at that time. It would be a fair guess to say that Google started using AI in their algorithms in late 2016. Can you see what significant event happened around that time?

#3 on Dr. Pete’s heatmap represents Google’s 2016 Penguin 4.0 update, the update in which Google told us they could ignore unnatural links instead of algorithmically suppressing sites that had built them.

It’s also interesting to note that the original Penguin rollout of Penguin happened just a couple of weeks before Google launched the knowledge graph. The knowledge graph is important to AI. Google’s announcement about Bard tells us Bard combines the power, intelligence and creativity of their language ****** with “the breadth of the world’s knowledge”. That is likely the knowledge graph.

The more Google uses AI systems, the more important it becomes for us to align with the content those systems are designed to reward.

How Google’s Ranking Systems Utilize AI to Generate “Signals”

Several of Google’s ranking systems employ machine learning, a subset of AI, to generate signals. These signals play a crucial role in determining which sites Google ranks in its search results.

I’m going to mention this word signal a lot. A signal is a piece of information that Google’s algorithms can use when deciding which content to rank.

Here’s what Google says about how they use signals as clues or characteristics of a page that might align with what a human would consider high quality:

An example of a signal is the number of quality links pointing to a particular page. This is just one of the many types of signals Google can use in their ranking process.

One notable system that generates such signals is Google’s ‘Helpful Content System’.

The Role of the ‘Helpful Content System’ in Signal Generation

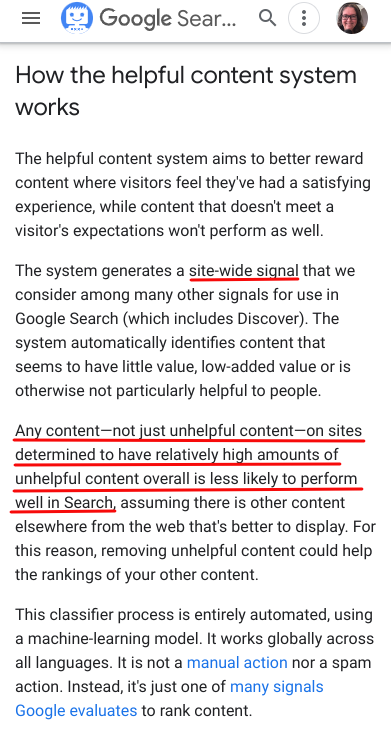

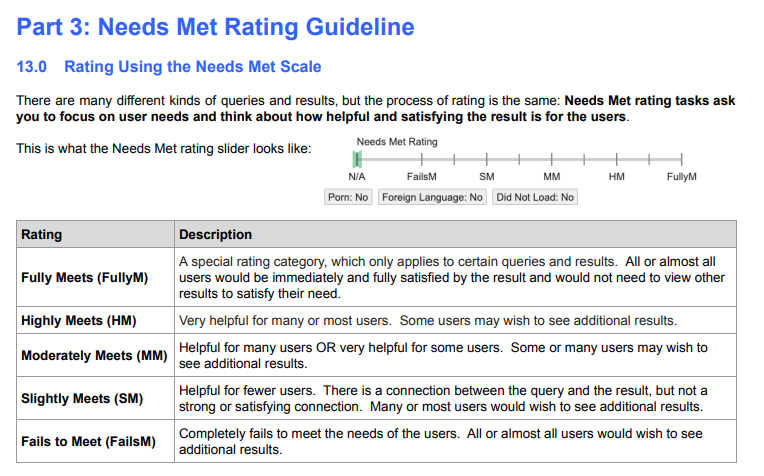

The helpful content system uses AI to generate a signal Google can use to identify content that has “little value, low-added value or is otherwise not particularly helpful to people.” This signal is considered along with many others in Google’s ranking process.

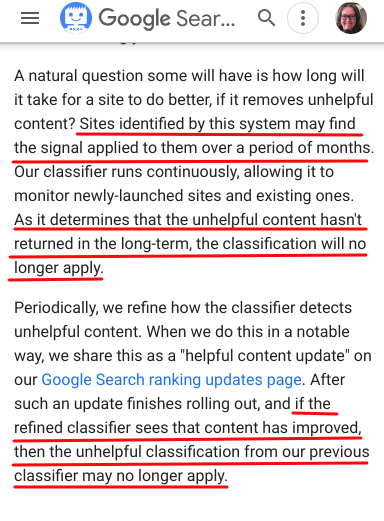

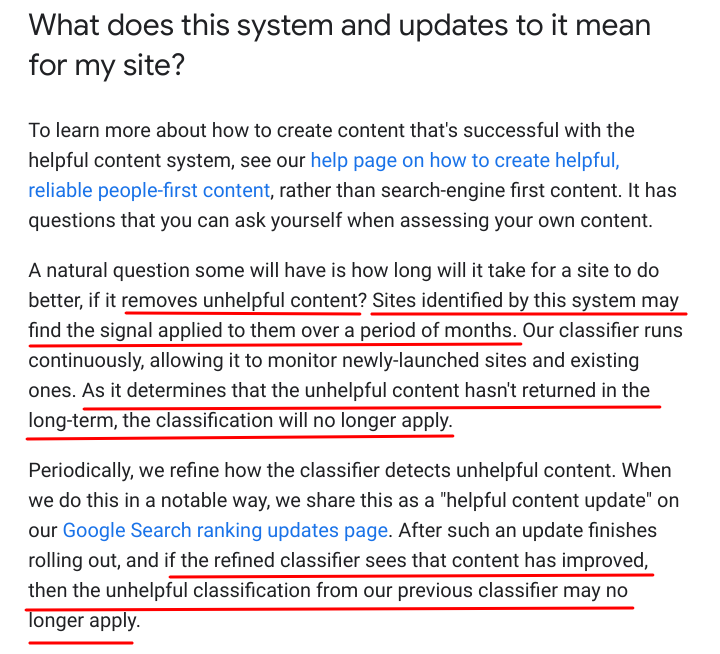

If your traffic has been declining, it might be the result of the Helpful Content System assessing a large proportion of your content as generally not the most helpful result when it comes to meeting the searcher’s primary need. This evaluation process runs continuously and impacts your site’s ranking over a period of months, meaning changes can occur at any time, not just in conjunction with announced Helpful Content System updates.

This classifier is essentially saying, “In general, content from this site is not the most helpful option for searchers when compared to others.” The presence of a high amount of unhelpful content on your site, as indicated by this classifier, could be a significant impediment that prevents your site from reaching its full ranking potential.

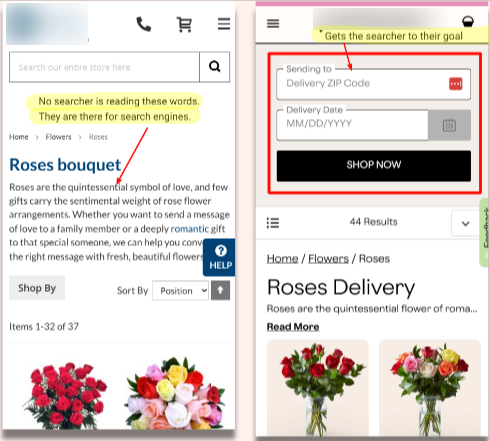

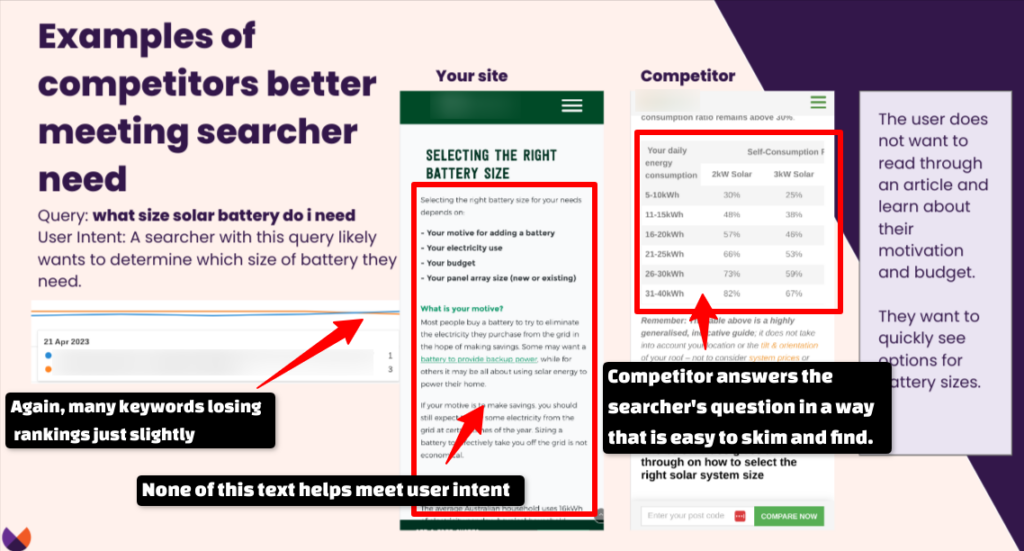

Each time I look at content Google’s algorithms started to prefer and compare it against the site that started to struggle, it is clearly more helpful at getting the searcher to their answer and meeting their need.

Perhaps they answer the searcher’s question faster, or get them to the product they want to buy more quickly. Maybe they have more helpful images and graphics or a table. Or, in many cases Google has started to surface a site from a business with real world experience which is offering a take on a topic that other sites did not. Often, the content that Google is preferring to rank has original insight and value beyond what else is available online.

Sometimes it’s hard to say why a page does well, other than it just seems to be better for the query somehow.

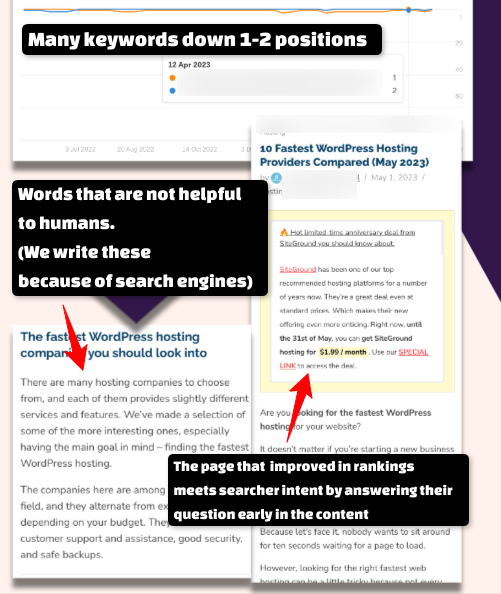

Here are some examples taken from recent site reviews I have done.

How Spam Detection Systems Contribute to Signal Generation

SpamBrain is an AI system that can not only identify attempts at manipulating search, but can now generate, once again, a signal, that identifies a site as one that tends to engage in schemes for ranking purposes.

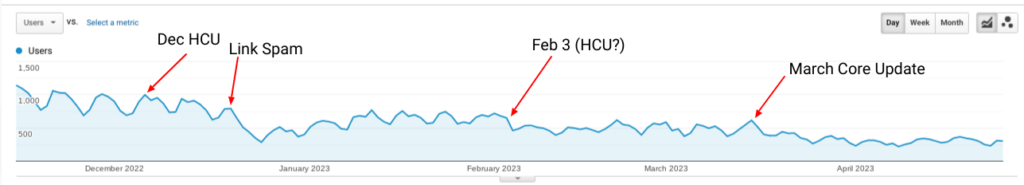

If your site has been in decline since December 14, it’s possible the December link spam update is to blame. With this update, SpamBrain got better at figuring out which sites have signals that are predictive of the site participating in manipulative ranking schemes.

This brings up many questions of which I have thoughts, but few have published studies or concrete examples. Should you use Google’s disavow tool? In some cases, I would recommend yes, especially if you have been building links for SEO purposes. I can’t imagine a situation where it would be acceptable to have a spam classifier on your site.

For many sites, perhaps most that are impacted by a spam update, disavowing is unlikely to help. In many cases, the losses don’t mean the site has been classified as spam, but rather, sites linking to it have, causing their links to lose value.

However, it can be difficult to determine if a site’s losses started specifically during the link spam update or were related to changes to the helpful content system as both were running at the same time.

For example, this site below was possibly impacted by the December 14 link spam update. But, losses tend to start in conjunction with the December 5, helpful content update. This is a common pattern.

This site had plenty of links pointing to it that were clearly made with the intent of manipulating rankings. In this case, I would recommend disavowing these links just in case they are sending signals to Google that the site participates in link schemes. But, my bet is that this site is unlikely to see improvement as the disavow won’t cause neutralized links to start counting again.

Some general thoughts on disavowing if you have possibly been impacted by Google’s spam systems:

- If you have built links for SEO purposes and you are seeing obvious declines in rankings and traffic starting December 14, 2022, it is worth considering using Google’s disavow tool to ask them to not count those links in their algorithms. You may have a spam classifier on your site. Good use of the disavow tool can possibly remove this classification.

- If you have done some link building in the past and are seeing decline mostly for the pages that were the target of those links, it’s possible that there is no spam classification on your site. Rather, the sites you got links from were detected as having patterns consistent with those that exist for ranking purposes had those links neutralized. I do not recommend disavow work in these cases.

- If you are not sure whether to disavow, it is likely a much better use of time to focus on improving your content in the eyes of the helpful content system. Or you can consult with me, or have me refer you to a disavow specialist.

How the Reviews System Rewards High-Quality Content

The reviews system is an AI machine learning system that assesses any content that is providing a recommendation by “giving an opinion, or providing analysis.” Any content…not just content reviewing products. It is a model that “aims to better reward high quality reviews, which is content that provides insightful analysis and original research and is written by experts or enthusiasts who know the topic well.”

I have seen several sites clearly impacted during the April 12 rollout of the reviews system that are not typical product review sites.

The reviews system evaluates content on a page level basis, but if you have a substantial amount of content evaluated by this system and found lacking, you can end up with a site-wide signal. If you were significantly impacted by a reviews update, such as the one Google ran on April 12, 2023 it is possible that Google’s machine learning algorithms have found your content to be less in line with their model of helpful review content than others.

The Intricacies of Google’s Core Updates

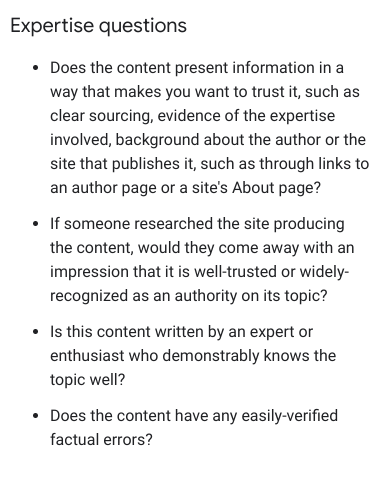

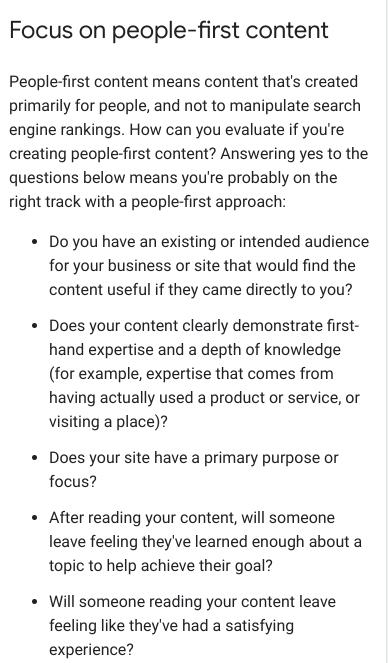

Google’s core updates aim to reward reliable and helpful content. They provide us with guidance on creating helpful, reliable people-first content, giving us a list of criteria to consider.

Here are a few of these criteria:

There are more as well. Seriously…I recommend thoroughly studying Google’s documentation on helpful content. These are the criteria they build their algorithms to recognize and reward.

Panda: The Genesis of Google’s Quality ******

Panda: The Genesis of Google’s Quality ******

We first saw a version of these criteria in Google’s Panda algorithm announcement in 2011. In that document Google says,

“These are the kind of questions we ask ourselves as we write algorithms that attempt to assess site quality.”

When Panda first rolled out, the SEO community recognized that sites with obviously thin content were most likely to be affected. But I don’t think many could predict that Google could one day use AI to create a model to determine the quality and helpfulness of a website’s content.

This was published by Google in 2011 re Panda:

“Our site quality algorithms are aimed at helping people find “high-quality” sites by reducing the rankings of low-quality content. The recent “Panda” change tackles the difficult task of algorithmically assessing website quality. Taking a step back, we wanted to explain some of the ideas and research that drive the development of our algorithms.

Below are some questions that one could use to assess the “quality” of a page or an article. These are the kinds of questions we ask ourselves as we write algorithms that attempt to assess site quality. Think of it as our take at encoding what we think our users want.

Of course, we aren’t disclosing the actual ranking signals used in our algorithms because we don’t want folks to game our search results; but if you want to step into Google’s mindset, the questions below provide some guidance on how we’ve been looking at the issue:

- Would you trust the information presented in this article?

- Is this article written by an expert or enthusiast who knows the topic well, or is it more shallow in nature?

- Does the site have duplicate, overlapping, or redundant articles on the same or similar topics with slightly different keyword variations?

- Would you be comfortable giving your credit card information to this site?

- Does this article have spelling, stylistic, or factual errors?

- Are the topics driven by genuine interests of readers of the site, or does the site generate content by attempting to guess what might rank well in search engines?

- Does the article provide original content or information, original reporting, original research, or original analysis?

- Does the page provide substantial value when compared to other pages in search results?

- How much quality control is done on content?

- Does the article describe both sides of a story?

- Is the site a recognized authority on its topic?

- Is the content mass-produced by or outsourced to a large number of creators, or spread across a large network of sites, so that individual pages or sites don’t get as much attention or care?

- Was the article edited well, or does it appear sloppy or hastily produced?

- For a health related query, would you trust information from this site?

- Would you recognize this site as an authoritative source when mentioned by name?

- Does this article provide a complete or comprehensive description of the topic?

- Does this article contain insightful analysis or interesting information that is beyond obvious?

- Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

- Does this article have an excessive amount of ads that distract from or interfere with the main content?

- Would you expect to see this article in a printed magazine, encyclopedia or book?

- Are the articles short, unsubstantial, or otherwise lacking in helpful specifics?

- Are the pages produced with great care and attention to detail vs. less attention to detail?

- Would users complain when they see pages from this site?

Writing an algorithm to assess page or site quality is a much harder task, but we hope the questions above give some insight into how we try to write algorithms that distinguish higher-quality sites from lower-quality sites.”

Google’s Panda filter was catastrophic for many sites. We developed vague theories on recovery as an industry that essentially boiled down to “remove, consolidate or improve thin content.”

But few considered Google’s advice on improving quality as things that could legitimately be algorithmically measured. At the time, the SEO community could not have foreseen that AI could be used to fundamentally transform the way Google evaluates content quality. By incorporating machine learning ******, Google’s algorithms can now interpret a myriad of data signals, learning to discern the helpfulness, relevance, and depth of the content, and adjusting rankings accordingly.

While we can theorize on how they do that, ultimately we know what the algorithms are designed to reward – content that aligns with Google’s helpful content guidance.

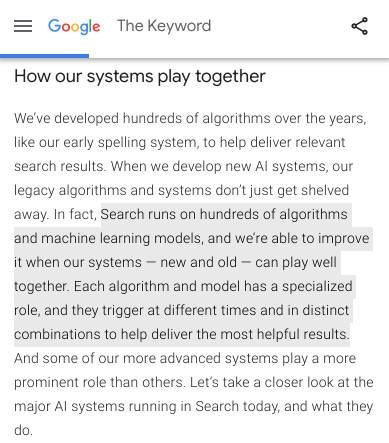

Search runs on hundreds of algorithms and machine learning ******

From Google’s article on how AI powers their search results:

If you’ve got time, that article is worth a read. It goes into more depth on how a deep learning system called RankBrain is used to help rank websites. They call it, “one of the major AI systems powering Search Today”. It also discusses neural matching which uses AI to figure out what the concept is that the searcher is looking for and which content matches that concept. They called this a “major leap forward from understanding words to concepts.”

If you’re getting overwhelmed, it all boils down to this:

Search runs on hundreds of algorithms and machine learning ****** that generate signals to help determine whether pages are likely to be high quality and helpful.

The key to ranking is to consistently be the most helpful result. The key to being the most helpful result is to consider each of Google’s criteria on helpful content. These criteria are a summarized version of Google’s Quality Raters’ Guidelines.

Understanding the role of the Quality Raters in machine learning algorithms

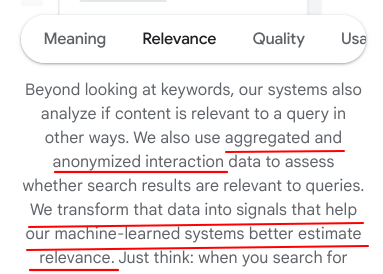

On Google’s “How Search Works” page they talk about employing machine learning systems to help them better estimate relevance.

Google says they use “interaction data”, data from people interacting with a search, to assess whether a search result is relevant. Then, they transform that data into signals. We mentioned above a few of the systems that generate signals including the helpful content and review systems.

What is this interaction data?

Google’s documentation on how the quality raters are used mentions two sources.

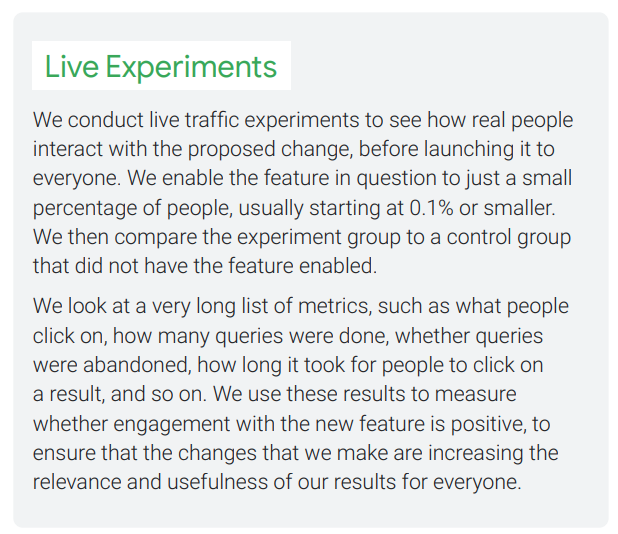

Interaction data: The Role of Live Searches

They get some data from live tests in search.

These live experiments can help the machine learning ****** determine whether the ****** are doing their job at better rewarding helpful content. If not, the systems can learn which characteristics can be given more weight in the algorithms to perform even better.

Google wants their ****** to be really good at recognizing when content is helpful to a searcher. To accomplish this, they need to show the model many examples of helpful and unhelpful results. The more examples they have, the better the model gets at predicting the right combination of factors to weigh to best measure quality.

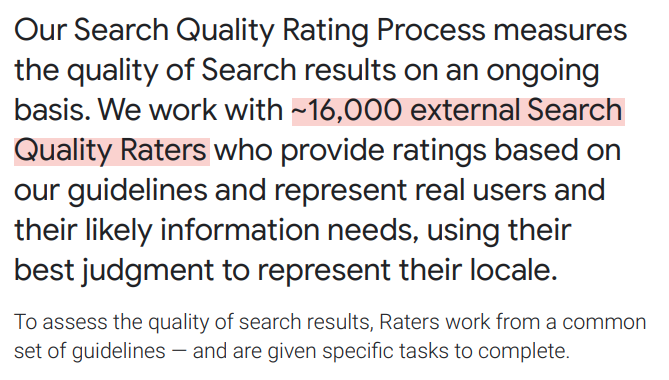

How does Google get loads of examples? They have an army of people who do this for them.

Who are these people?

Introducing the 16,000 Quality Raters.

Quality Raters: Labeling Pages as Helpful or Unhelpful

Again from Google’s documentation on the role of the QRG and the quality raters:

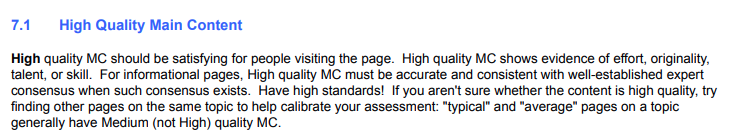

Google has a document that I believe every SEO should read a few times over, called the Quality Raters’ Guidelines (QRG), or Search Quality Evaluator Guidelines. It is essentially a textbook to help the raters understand what it is that makes content likely to be considered high quality and helpful by a searcher. These guidelines describe the type of content their AI ****** are designed to reward.

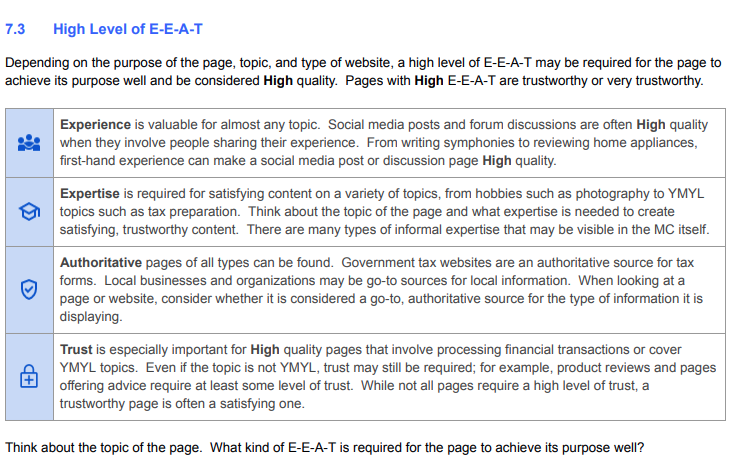

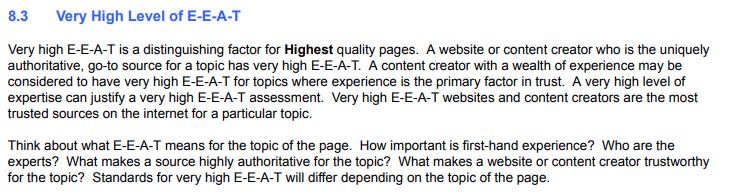

The helpful content criteria are a summarized version of the guidance suggested in the QRG. There are pages and pages of descriptions of high quality content in the QRG. Here are a few:

“Ratings are also used to improve search engines by providing examples of helpful and unhelpful results for different searches.”

Again, from Google’s documentation on the use of the quality raters:

Those labels of helpful and unhelpful are invaluable to Google’s machine learning algorithms. By categorizing search results as either ‘helpful’ or ‘unhelpful’, Quality Raters provide real-world, human feedback that the algorithms can learn from.

How is Page Helpfulness Determined?

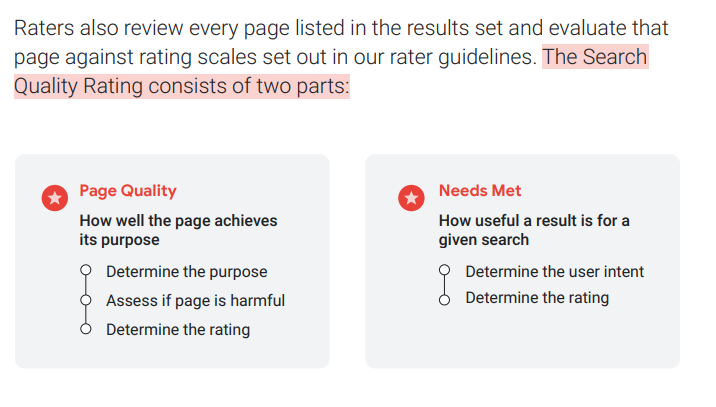

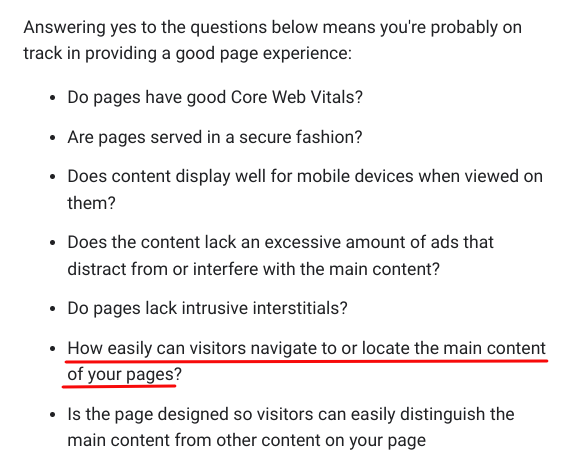

The raters use a rating scale that considers how well a page achieves its purpose at meeting searcher needs. For each page, they are to consider the quality of the page and also how useful it is in meeting the searcher’s needs.

Here is my definition of a high quality page:

A high quality page is one that understands the purpose of the user, and achieves that purpose without causing harm, offering a high-quality, user-friendly experience. It satisfies user intent by delivering useful, accurate content. It is a page that provides considerable value in response to a specific search query.

It’s not enough to just have a few good pages. Google says that having enough pages classified as unhelpful can cause your entire site to be suppressed – even your good pages.

Here’s more from Google on how pages are to be rated by quality raters:

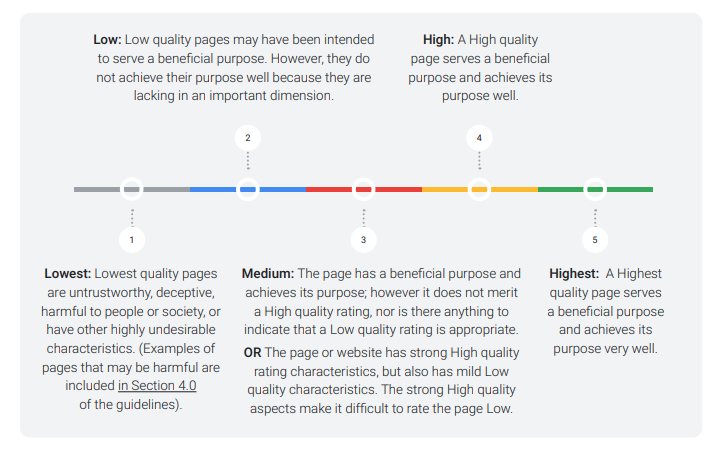

If you have seen declining search performance, it’s quite possible your content is sending signals to Google that it should be considered more in line with “medium” quality on the chart above than high. It’s beneficial. It achieves its purpose. But more often than not, it’s not likely to be the absolute best option to show searchers.

If most of your content fits this bill, you can find yourself dealing with a helpful content classifier. This classifier affects your entire site – not just the lower quality content.

For quite some time, a skilled SEO could accomplish a lot with on page SEO and link building to improve the ability to rank with medium quality content.

Today, machine learning ****** help Google decide which content is most aligned with the criteria people consider helpful. This makes it much harder to rank medium level content.

The Dual Pillars of Quality: E-E-A-T and Meeting User Needs

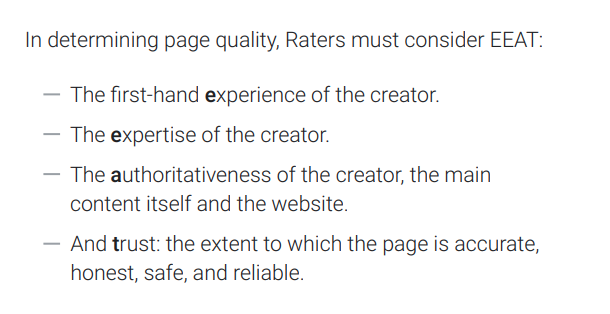

While the helpful content questions and the QRG give us loads of information to help us model quality content, it truly comes down to two things. E-E-A-T, and possibly even more important, how well your content meets the needs of searchers.

E-E-A-T: The Cornerstone of Quality

Quality is synonymous with E-E-A-T. (Experience, Expertise, Authoritativeness and Trustworthiness). In the QRG, E-E-A-T and Page Quality are essentially the same thing.

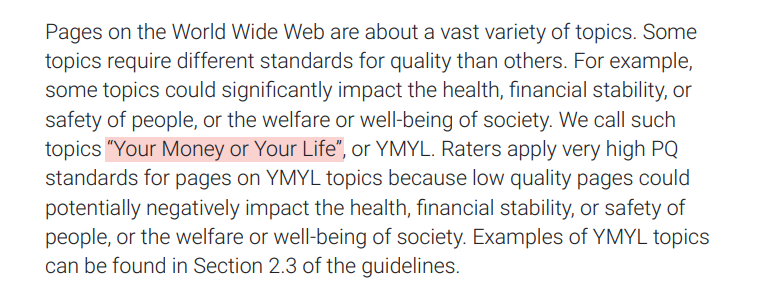

These criteria are even more important for pages that discuss topics such as health, finance, or other YMYL topics:

Google has told us that E-A-T, now with an added E as Google’s ****** are learning to better reward experience, is considered for every query.

But E-E-A-T is just part of the equation. Whether the searcher’s needs are likely to be met is also incredibly important. For years, many sites got an advantage in search by better demonstrating E-E-A-T. In most reviews I do now, while E-E-A-T can be improved upon, one of the most obvious shortcomings of pages that are struggling is that they don’t do as good a job at meeting the needs of searchers as competitors.

A huge component of Needs Met is Page Experience.

Page Experience: A Key Factor in Meeting User Needs

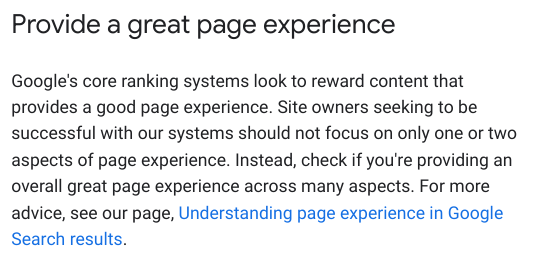

Here’s what Google says in their helpful content documentation:

They link to this documentation on understanding page experience, saying that if we want to be successful with their systems we should not focus only on one or two aspects of page experience, but instead, “check if you’re providing an overall great page experience across many aspects.”

While all of these factors can be considered when deciding on whether a page offers a good experience, I have underlined the line I believe is the most important:

I will leave you with some homework! Study Part 3 of the QRG: Needs Met Rating Guideline, especially the parts where it talks about fully meeting the needs of searchers.

One of the most important tips I can give when it comes to improving the helpfulness of your content is this:

- Determine what the searcher’s immediate need is

- Instead of padding the top of your article with words that humans are unlikely to read, meet that need as a top priority

So many of the pages I have analyzed that were impacted by the helpful content system have loads and loads of words that truly are only there to show search engines their content is relevant and not actually helpful to humans.

If you would like to go deeper in learning to understand user intent and improve your content to best meet their needs, you may find my helpful content workbook useful.

I created this workbook based on years of intensive study of Google’s algorithms. This book should help you create the kind of content users and Google consider the most helpful of its kind.

I created this workbook based on years of intensive study of Google’s algorithms. This book should help you create the kind of content users and Google consider the most helpful of its kind.

You’ll get a Google document you can copy and fill in. There are instructions to help you use the information in the rater guidelines to determine what user intent is and how best to meet that intent.

- Understanding Google’s helpful content system

- How to know if you are impacted

- What it takes to recover

- Thoroughly understanding user intent as described in great detail in the QRG

- Removing content

- Improving Page Experience

- Demonstrating E-E-A-T, especially experience

- The importance of popularity

- What to expect in terms of recovery

Checklists:

- Needs Met – these exercises will help you understand searcher need

- E-E-A-T / Content Quality improvement – We’ll walk through Google’s criteria on creating helpful content and discuss how to improve Google’s perception of your business, the people associated with it and its popularity and reputation.

- Page Experience – It’s about more than just core web vitals! A good page experience is about making it incredibly easy for users to find the answers they’re looking for.”

- Ensuring you have people first content

- Additional ideas to consider for improving quality

Conclusion: Navigating the New Era of AI-Driven Search Rankings

While there are many AI related changes to the search industry on the horizon, we need to pay close attention to the ways in which Google already uses AI, in particular machine learning, to improve search result relevance and helpfulness.

If your traffic is declining and you can’t understand why, there is a good chance that Google’s AI driven systems are learning that your content is not usually the most helpful result to meet searcher need. You may have a classification placed on your site that is hindering your ability to rank.

By aligning with the ideals described in the rater guidelines, summarized in Google’s helpful content criteria, we can improve our chances of being considered a helpful and relevant result by Google’s ranking algorithms.

For sites dealing with an unhelpful content classification, in order to recover, you will need to remove unhelpful content, do more to align with Google’s helpful content criteria, and then wait for several months to hopefully get the classification lifted.

I do believe recovery is possible. I have worked with some sites that have indeed recovered organic traffic after working hard to improve quality. Several others are currently working towards that goal. I hope to produce some case studies soon although it with the number of changes made, such as removing pages created more for SEO purposes than for meeting searcher need, and improving E-E-A-T on those that remain, it will be difficult to prove which lead to removal of the helpful content classifier.

Key Questions for the SEO Industry in the Age of AI

There are many questions that I have thoughts on, but would **** to hear your opinion on these as well.

- Given Google is using AI to create ****** that predict whether content is likely to be helpful, are you adapting your SEO strategy to focus more on creating helpful, relevant content? How? For many this type of shift represents a significant increase in expense. It is hard to justify this, especially for sites with large amounts of medium quality content that may be struggling right now.

- Is there value in link building? I believe links are still an important component of E-E-A-T, but so many of the links SEOs are working hard to obtain are likely ignored by Google’s algorithms. Links are one of the many things considered when assessing quality. Google has years of experience when it comes to understanding which links should be used as ranking signals. If you can get good, authoritative links that truly are recommendations, these give Google a signal of quality. Links alone are not enough to make something helpful though.

- Is it worthwhile learning more on understanding how Google’s neural networks determine which content is relevant? I believe there is value to be gained by understanding more about sending signals of being topically relevant and authoritative. I think there is a lot that can be gained for some sites here…not by blackhat methods, but rather, by creating a body of truly helpful content relevant to your topics. I’d like to spend more time on understanding how we can improve in this area.

- How do we communicate these shifts in Google’s algorithms to our clients? Many of the things we do regularly for SEO clients may not be as important as truly producing helpful content. Having a fast, technically sound site is important, but often will not make content significantly more helpful. Some type of link acquisition work can help improve E-E-A-T. But if your content is medium quality and rarely the best option to show searchers, no amount of link building or technical fixes will improve that.

Thoughts?

This article has taken me over six months to write. Hopefully it has helped you understand more about Google’s helpful content system. I would **** to hear your thoughts in the comments below.

If you need help assessing whether your site is impacted by Google’s helpful content system, after many months of working to understand the helpful content system I have once again opened up my options for site reviews. You can learn more here about working with me to brainstorm on improving site quality and recovering lost Google organic search traffic.

If you enjoyed this, you’ll **** my newsletter

Since 2012 I’ve been learning all I can about how Google’s algorithms work and sharing that with my subscribers: