The past two months in Google Land has been one of the most volatile times I can remember in the search industry. Google released the August broad core update, then a powerful helpful content update in September, then the October broad core update, and also sandwiched in a spam update which overlapped the October core update. Needless to say, it’s been a crazy and wild time for SEOs and site owners. Like I’ve said many times before, Welcome To Google Land.

As the updates have been rolling out, I have spoken with countless site owners that have been impacted. And during those conversations, I find myself explaining the same points and answering the same questions over and over. So, I finally decided to write a post covering some of the most important points that site owners need to understand about broad core updates. This is based on my experience helping many companies work to recover from core updates, which are major algorithm updates that roll out several times per year. My hope is the following information can help site owners that might not be familiar with broad core updates, maybe they don’t understand how they work, how sites can recover, and more.

This is a living document, and I’m sure I’ll add more key points as broad core updates evolve over time. Let’s begin (in no particular order).

Site-level quality algorithms:

I’ll start with a super-important topic for site owners to understand. Google has site-level quality algorithms that can have a big impact on how sites are impacted during broad core updates. This is why you can see massive surges or spikes when broad core updates roll out. Google is evaluating sites overall with broad core updates and not just on a url-by-url basis. So, don’t miss the forest for the trees… Focus on the big picture when improving your site. That’s how Google is looking at it…

The difference between relevancy adjustments, intent shifts, and quality problems:

If a site has been impacted negatively by a broad core update, then it’s important to understand the reason. I have covered this topic many times in my blog posts and presentations about broad core updates and I find myself explaining this over and over again. Actually, I have an entire post covering the difference between relevancy adjustments, intent shifts, and quality problems that site owners should definitely read. To quickly summarize the post, a drop from a broad core update is NOT always due to quality problems. It could be relevancy adjustments or intent shifts as well. If that’s the case, then quality isn’t the problem on your end and it’s not something you can really fix… It’s just that your content isn’t as relevant anymore for the query at hand or that another type of site is ranking for the query.

For relevancy adjustments, a good example is an article about a celebrity from two or three years ago that was ranking well (and it drops with a broad core update based on not being relevant anymore). For intent shifts, maybe a review site drops since Google is now ranking ecommerce sites instead. That’s on Google’s end, not yours. Don’t jump the gun and start nuking content or revamping your entire site until you know the cause of the drop.

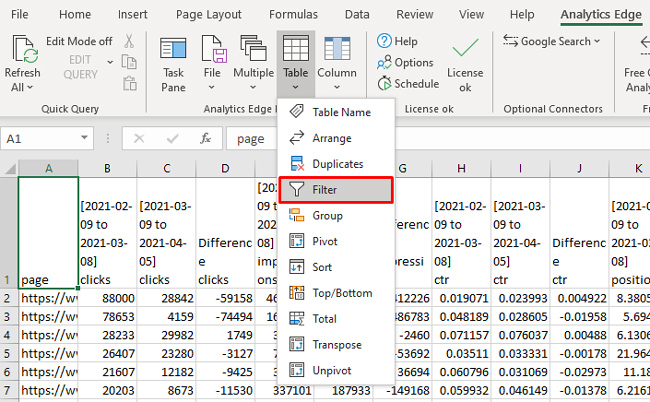

To start down that path, I highly recommend running a delta report to understand the queries and landing pages that dropped the most based on a broad core update. Dig in and determine if it was a relevancy adjustment, intent shift, or if it’s quality problems. Then take action (or not).

“Content is king”, but “quality” is more than just content.

We have heard the expression, “Content is king” over and over again for SEO. Content quality is obviously critically important, but it’s more than just content when it comes to broad core updates. This is something I have been saying since medieval Panda days when I noticed UX was part of the equation. For example, UX barriers, aggressive, disruptive, or deceptive advertising, deceptive affiliate setup, annoying popups and interstitials, and more.

And if you don’t believe me, then listen to Google’s John Mueller. In a webmaster hangout video from 2021, John explained that Google is evaluating a site overall with broad core updates. It’s more than just content. It covers UX issues, the advertising situation, how things are presented, sources, and more. This is just another reason that I highly recommend using the “kitchen sink” approach to remediation. If you solely focus on the content, and don’t take the entire user experience into account, then you could still end up in the gray area of Google’s algorithms (and see negative impact during broad core updates).

Thin doesn’t mean short. It’s about value to the user.

If quality is the problem, and you’re digging into content quality, then understand that thin doesn’t mean short. There are plenty of examples of pages with shorter content that can meet or exceed user expectations. It’s more about value to the user. Don’t just nuke a bunch of content because it’s short. Objectively evaluate if the content can meet or exceed user expectations.

Old does not mean low-quality:

Repeat after me. Older content does not mean low-quality content. There are many sites out there nuking older content thinking it’s thin. That’s not the case, and Google has even come out recently to reiterate that point. If older content is high quality, then it can be helping your site on multiple levels. It could have earned links and mentions over time, it could be helping with an overall quality evaluation, and more. This is an especially important topic for news publishers that might have very old content that isn’t receiving much traffic anymore. Don’t nuke that content if it’s high quality. Only remove it if it’s lower-quality or thin (if it can’t meet or exceed user expectations).

Broad core updates can impact other Google surfaces (beyond Web Search):

I find that some site owners focus just on web search when evaluating a drop from broad core updates, but there are other Google surfaces that can be driving a lot of traffic. For example, image search, video search, the News tab in Search, Discover, Google News proper, etc. Google is on record that these other surfaces can be impacted by broad core updates, and that’s exactly what I have seen over the years while helping companies that have been heavily impacted. If other surfaces are important for your business, then make sure to check trending for those surfaces when broad core updates roll out. Again, this is why you don’t want to be on the wrong side of a broad core update.

Recovery From Broad Core Updates:

For sites that are heavily impacted by a broad core update, you can typically only see recovery with another broad core update. Google has explained this a number of times, and it’s what I have seen many times while helping companies in the field. In addition, Google is on record explaining that its algorithms want to see significant improvement over the long-term in order for a site to recover. So, a site might need to wait for the next core update, or even another (or another). I’ve seen severe situations where it took a site over a year for a site to recover after implementing significant improvements across content, UX, the ad situation, affiliate setup, technical problems causing quality problems, and more.

And I said “typically” earlier because there are times that sites have recovered from a broad core update hit outside of a broad core update. I’ll cover how that can happen next.

Google could decouple algorithms from broad core updates and run them separately.

At the webmaster conference in Mountain View in 2019, I asked Google’s Paul Haahr if Google could roll out broad core updates more frequently (since many site owners were working hard to recover and they only roll out a few times per year). He explained that Google does so much evaluation in between core updates that it can be a bottleneck to rolling them out more frequently. But, Paul did explain that they could always decouple algorithms from broad core updates and run them separately. And we have seen that several times over the years.

For example, the September 2022 broad core update hammered several prominent news publishers (like CNN, The Wall Street Journal, The Daily Mail, etc.) When the core update completed, the news publishers were still down. But, four weeks later an unconfirmed update rolled out and those news publishers came roaring back. It was a great example of Google decoupling an algorithm (or several) from broad core updates and running them separately.

Remediation and the “Kitchen Sink” Approach:

Now that I covered how “quality” is more than just content, I can cover more about remediation if quality is the problem. It’s incredibly important to understand that Google wants to see significant improvement over the long-term. And Google is evaluating a site overall with broad core updates. That means you should NOT cherry pick changes… Instead, you should surface all potential quality problems and address as many as you can.

And since you can’t really recover until another broad core update (or more), then being too selective with the changes you are implementing could lead to never exiting the gray area (and never recovering). But you won’t know how close you are to recovering… I’ve always said that’s a maddening place to live for site owners. This is why it’s important to objectively analyze your site through the lens of broad core updates, surface as many issues as you can across content, UX, ads, affiliate setup, technical SEO problems causing quality issues, and more. Then fix as many as you can. I’ve often said there’s never one smoking gun with broad core update drops… there’s typically a battery of them. I’ve seen that across many large-scale and complex sites I have helped over the years.

“Quality indexing”: An incredibly important topic for site owners.

I have covered this topic many times in my blog posts and presentations about broad core updates. Google is on record explaining that every page indexed is taken into account when evaluating quality. This is why it’s super-important to understand quality across the entire site and focus on what I call “quality indexing”. That’s making sure only your highest-quality content is indexable, while ensuring low-quality or thin content is not indexed.

Don’t just look at content recently posted, look at all of your content. It’s not uncommon for me to find large pockets of low-quality or thin content throughout a large-scale and complex site. The ratio matters. Don’t look past this point.

Machine learning systems at play (and what that means):

Google is using machine learning with major algorithm updates like broad core updates, helpful content updates, and reviews updates. Understanding how they work is very important to understand. First, you are never going to reverse engineer a broad core update…. ever. With machine learning systems, Google is potentially sending thousands of signals to the machine learning system, which dictates weighting of those signals, and ultimately rankings. This is why improving a site overall is the way to go.

Bing’s Fabrice Canel explained this in 2020 with how Bing’s core ranking algorithm works. He explained even the engineers don’t understand the specific weighting of signals, since the machine learning system calculates the weighting. So, work to improve overall, surface all potential quality problems, and try to fix as many as you can. Use the “kitchen sink” approach to remediation. It’s a smart way to go.

It’s nearly impossible to test for broad core updates.

When speaking with site owners that have been heavily impacted by broad core updates, I often here something like, “We’ve run many tests, but nothing has worked…” When I ask more about those tests, they are often short-term tests of very specific things. The site owners didn’t implement enough changes, address enough problems, or keep the right changes in place long enough in my opinion. Then they rolled back the changes they did implement just after a few weeks (even if some were the right changes to implement!)

This is NOT how it works.

Again, Google is evaluating a site overall and over the long-term. You cannot perform short-term testing for broad core updates. My recommendation is to always implement the right changes for users and keep those changes in place over the long-term. That’s how you can recover from a broad core update hit. If not, you are essentially playing an SEO game of whack-a-mole with little chance of succeeding.

Rich snippets can be impacted:

This is a quick, but important point. Rich snippets can be lost during broad core updates based on Google’s reevaluation of quality. For example, it’s not uncommon for sites to lose review snippets if heavily impacted by a broad core update. It’s basically Google saying your site isn’t high quality enough to receive that treatment in the SERPs. The good news is that you can get them back if you do enough to increase quality over the long-term.

Recent changes aren’t reflected in broad core updates.

When speaking with some companies that reach out after being impacted by a broad core update, there are times they think something they implemented right before the update had a big impact on a drop. That’s probably not the case. Google explained in the past that with larger algorithm updates like broad core updates, Google is evaluating the site over an extended period of time. Recent changes wouldn’t be reflected in those updates. So, if you implemented a change a week or two before the update, it’s highly unlikely that would be causing a problem from a broad core update standpoint.

You’re probably too close to your own site. Run a user study.

I wanted to end with an incredibly important point that finds its way into almost all of my presentations and blog posts about broad core updates. I highly recommend running a user study through the lens of broad core updates. I wrote an entire post covering the power of user studies, and Google even linked to that post from their own post about core updates. It’s super-powerful to be able to gain feedback from objective users trying to accomplish a task on your site.

And I’m not referring to your spouse, children, or friends running through your site. I’m referring to selecting objective people from a user panel that fit your core demographics. Then having them try to accomplish tasks on your site and provide feedback. The questions you ask could be based on the original Panda questions Google provided, questions from Google’s post about broad core updates, questions based on the Quality Rater Guidelines, the helpful content documentation, and more.

And although running a user study sounds like an obvious step to take, I find most sites do not run user studies for one reason or another. I don’t understand why this happens… but it seems everyone is excited about running a study, but few actually execute the study. Again, you can read my post about running a user study through the lens of broad core updates to learn more about how that works, what you can learn, and more. I highly recommend setting up a study (or several).

Summary: Playing the long game with broad core updates.

I hope this post helped you learn more about how Google’s broad core updates work. Based on my experience, it’s important to play the long-game with core updates since Google is evaluating a site over the long-term quality-wise. If you have been negatively impacted by a broad core update due to quality problems, then understand that you need to significantly improve the site over time. And if impacted heavily, you can’t really recover until another broad core update rolls out (if you have done enough to recover). So, work to surface any problem that can be causing quality issues, work to fix as many as you can, and continue to improve the site over time. That’s your best path forward for recovering from a broad core update hit.

Again, I’ll update this post with more information over time. Until the next broad core update, good luck.

GG

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)