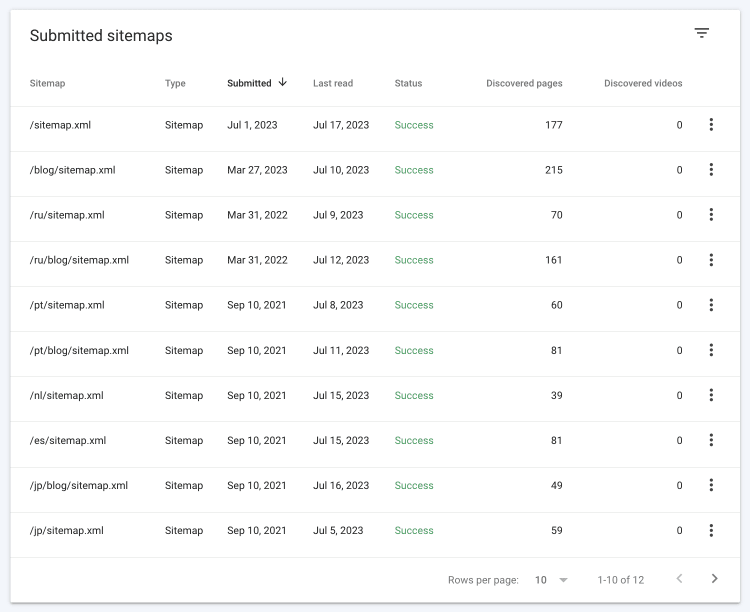

So you just created a sitemap and submitted it to Google. Congratulations! But wait, your sitemap status isn’t a Success. On the other hand, maybe your sitemap report looks good, but after checking the Page Indexing report, Google appears to have ignored your polite request and won’t index a good chunk of the pages from your sitemap. Now you’re wondering if there’s something you can do to improve your indexing stats.

Look no further because you’ll find all your answers in this post.

If you don’t yet have a sitemap and want to learn how to make one that shines, take a look at our introductory sitemapping crash course. You’ll learn all about the benefits of having a sitemap and discover several sitemap best practices. It’s important to consult a guide anyway, especially if you don’t yet know what <loc> and <lastmod> tags are used for, or if you’re still unfamiliar with video sitemaps or sitemap index files.

The first part of this post lists all the potential errors you may encounter in your Google Search Console’s Sitemaps report. If you’re working on troubleshooting issues, you can use a table of contents to navigate to the errors you are focused on.

The second chapter of this post features insights to help you make the most of your sitemap. These insights include:

- How to find trash pages in your sitemap.

- Where to find missing pages that you may have failed to include in your sitemap file.

- How to encourage Google to index more of your sitemap pages.

It’s highly recommended that you carefully study the second chapter of this post. Following these best practices can lead to a more efficient crawling and indexing process, which can ultimately raise your site’s visibility on Google.

Fixing Sitemaps report errors

Once you submit your sitemap to Google, you’ll be able to see if it successfully processed the file in the Status column. If your file follows all the rules, its status should be Success. In this chapter, we’ll discuss two other status codes, namely Couldn’t fetch and Has errors.

Google has issues crawling your sitemap file

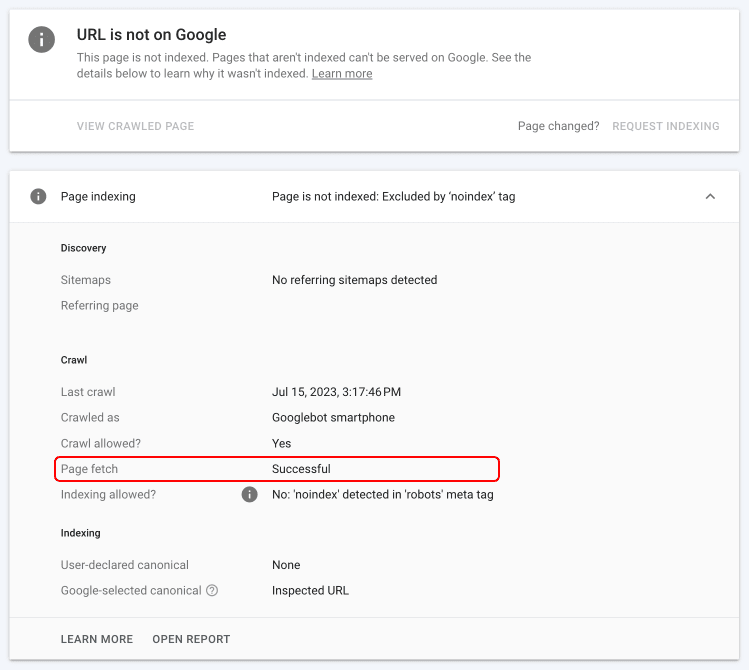

Let’s start with the most difficult scenario: Google can’t fetch your sitemap file. When this happens, you need to use the URL Inspection tool to find the source of the problem.

In the URL Inspection section, click the Live test button and check the Page fetch status. If it says Successful, there must be a bug on Google’s side. In this case, you should contact Google Support.

When reaching out to Google’s support team and reporting the issue, provide them with relevant details, including the URL of the sitemap, any error messages encountered, or observations you’ve made. Google will be able to assist you further and provide guidance on resolving the issue.

If there’s no bug on Google’s end and your sitemap can’t be fetched, make sure there is nothing blocking Google from accessing your sitemap. Sometimes robots.txt directives or even CMS plugins are to blame. Also, make sure you’ve entered a proper sitemap URL while paying attention to protocol and www.

These techniques can be applied to both single and sitemap index files. Now, let’s look at how to address some of the most common XML sitemap issues.

Sitemap index file errors

Sometimes, Google may fetch your submitted file and detect errors.

When using a sitemap index file, Google must process all separate sitemaps listed in it to access your website URLs. If Google fails to process URLs listed on the sitemap index file, you may receive an Invalid URL in sitemap index file error. This normally means that Google can’t find one or several of your sitemaps due to incomplete URLs or typos. All the URLs pointing to individual sitemaps in your sitemap index file should be fully-qualified, as Google will not be able to find them otherwise.

Besides, your sitemap index file shouldn’t list other sitemap index files, only sitemaps. But if you list them anyway, you’ll get an Incorrect sitemap index format: Nested sitemap indexes error.

The last error we’ll look at is Too many sitemaps in the sitemap index file. This can occur when huge websites list more than 50,000 sitemaps in a single file.

Sitemap size and compression errors

Size restrictions apply both to sitemap index files and individual sitemaps. Sitemap file size shouldn’t exceed 50 MB when uncompressed. The file also shouldn’t list more than 50,000 location URLs (not counting alternative ones). If you fail to adhere to these recommendations, you’ll get a Sitemap file size error.

When including localized versions of pages in your sitemap, it’s important to understand how Google counts URLs. According to Google’s John Mueller, Google considers only the <loc> positions as individual URLs in a sitemap. This means that even if you have multiple xhtml:link positions for different language versions of a page, they will be counted as one URL in terms of sitemap size limitations.

Another thing you should be aware of is that Google counts duplicate <loc> URLs as one in sitemaps. Google may not consider this as an error, but you should still keep your sitemap clean from duplicates. This is because duplicates won’t help your website get indexed any faster, but they will undoubtedly add clutter and can redundantly increase the sitemap file’s size.

While your sitemap shouldn’t be huge, it naturally shouldn’t be empty as well. If you submit an Empty sitemap, you will certainly get an error.

Learn how to split sitemaps into several files by reading our ultimate sitemap guide.

Also, earlier on in this article, we mentioned that the sitemap size should be less than 50 MB when uncompressed, but it is a common practice to compress sitemaps to save bandwidth. A commonly used tool for this purpose is gzip, which adds the gz extension to sitemaps. If you get a compression error in the Google Search Console report, this means something went wrong during the compression process. Your best bet is to try again.

Google has issues crawling your sitemap URLs

Google may not be able to crawl some of the URLs you listed on your sitemap for a few different reasons. Let’s take a look at some of the most common ones.

- Sitemap contains URLs that are being blocked by robots.txt. This error is a pretty clear one, especially since GSC will point you to each blocked URL. Depending on whether you want to index these URLs, you’ll have to either lift the block or remove them from your sitemap.

Other errors, such as URLs not accessible, URLs not followed, and URLs not allowed are not that obvious. Let’s briefly go through each of them.

- The URLs not accessible error means that Google has found your sitemap at the designated location but couldn’t fetch some of the URLs on your list. When this happens, use the URL Inspection tool. The procedure is the same as when Google can’t fetch your sitemap at all.

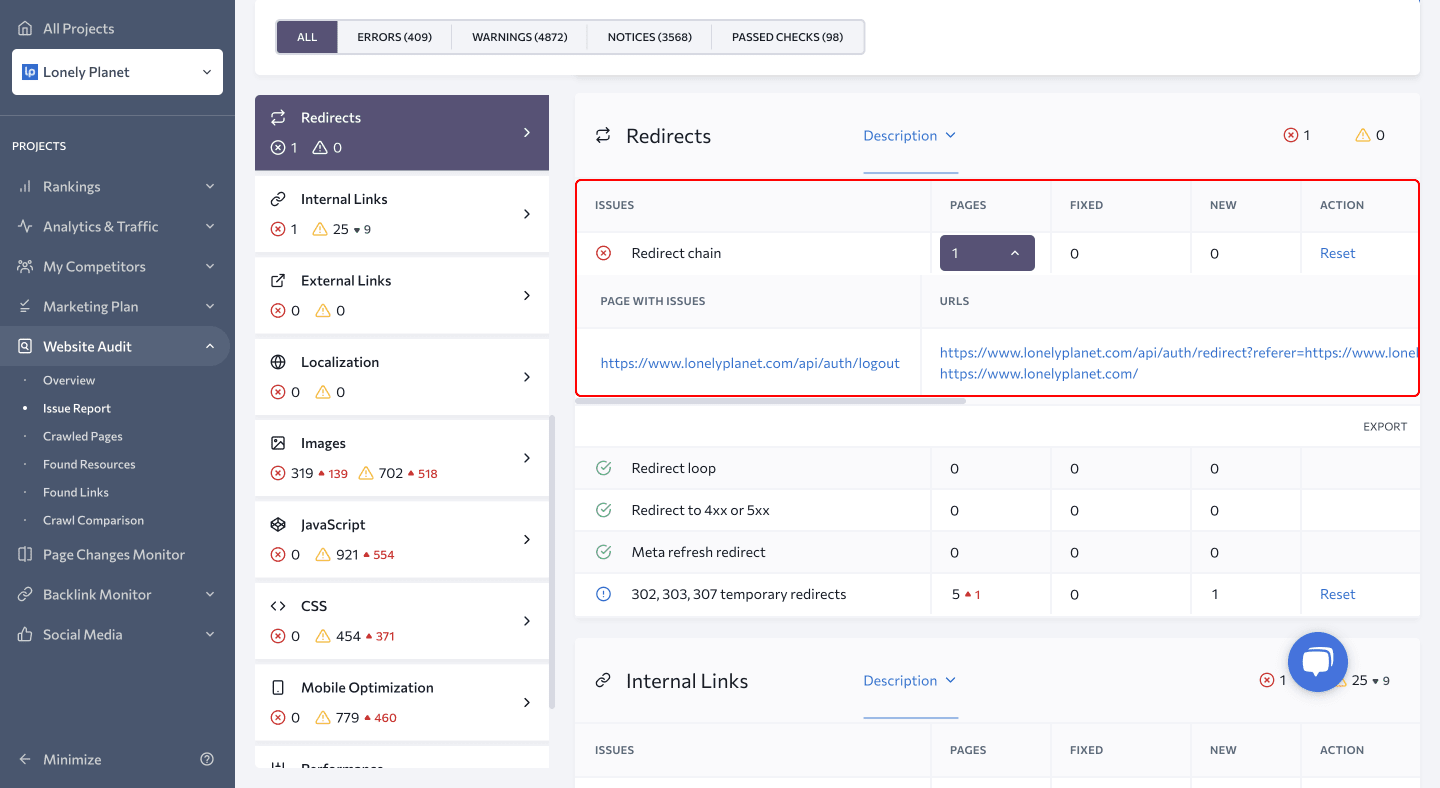

- The URLs not followed error occurs either because you used relative URLs on your sitemap instead of fully-qualified URLs, or simply because of redirect issues. Some activities that can lead to these errors include redirect chains and loops, temporary redirects used instead of permanent redirection, and HTML and JS redirects.

Try not to keep redirected URLs in your XML sitemaps for longer than necessary. Even Google’s John Mueller has belabored this point, mentioning on more than one occasion that these old URLs should be included temporarily instead of long term. At one point, he suggested removing them within less than six months, but these days he recommends keeping them in the sitemap for no more than three months.

Including redirected URLs in the sitemap is a useful strategy, sure, but it has a limited overall impact. This is why you should periodically review and update your XML sitemap to ensure it includes relevant and current URLs. Then double down on this by removing redirected URLs after the recommended time frame of one to three months.

Google Search Console will not specify the exact cause of the problem, so you’ll have to use other tools to figure out which issues need to be fixed. For example, Site Audit by SE Ranking has a dedicated Redirects section that can help you check your website for any redirect problems.

If the tool finds any issues, you can access all the necessary information on each error by clicking on the number of affected pages. This will ensure that you know which page features an error and how it is linked to other pages of the website.

- The URL not allowed error indicates that your sitemap features URLs at a higher level or on a different domain than the sitemap file itself. For example, if your sitemap is located at yoursite.com/category1/sitemap.xml and you’ve added a page to it that is located at yoursite.com/page1, Google won’t be able to access that page.

Speaking of different domains, be cautious as Google treats HTTP and HTTPS, as well as www and non-www versions of your site, as distinct entities. If you recently switched to HTTPS, make sure to generate a new sitemap with HTTPS URLs.

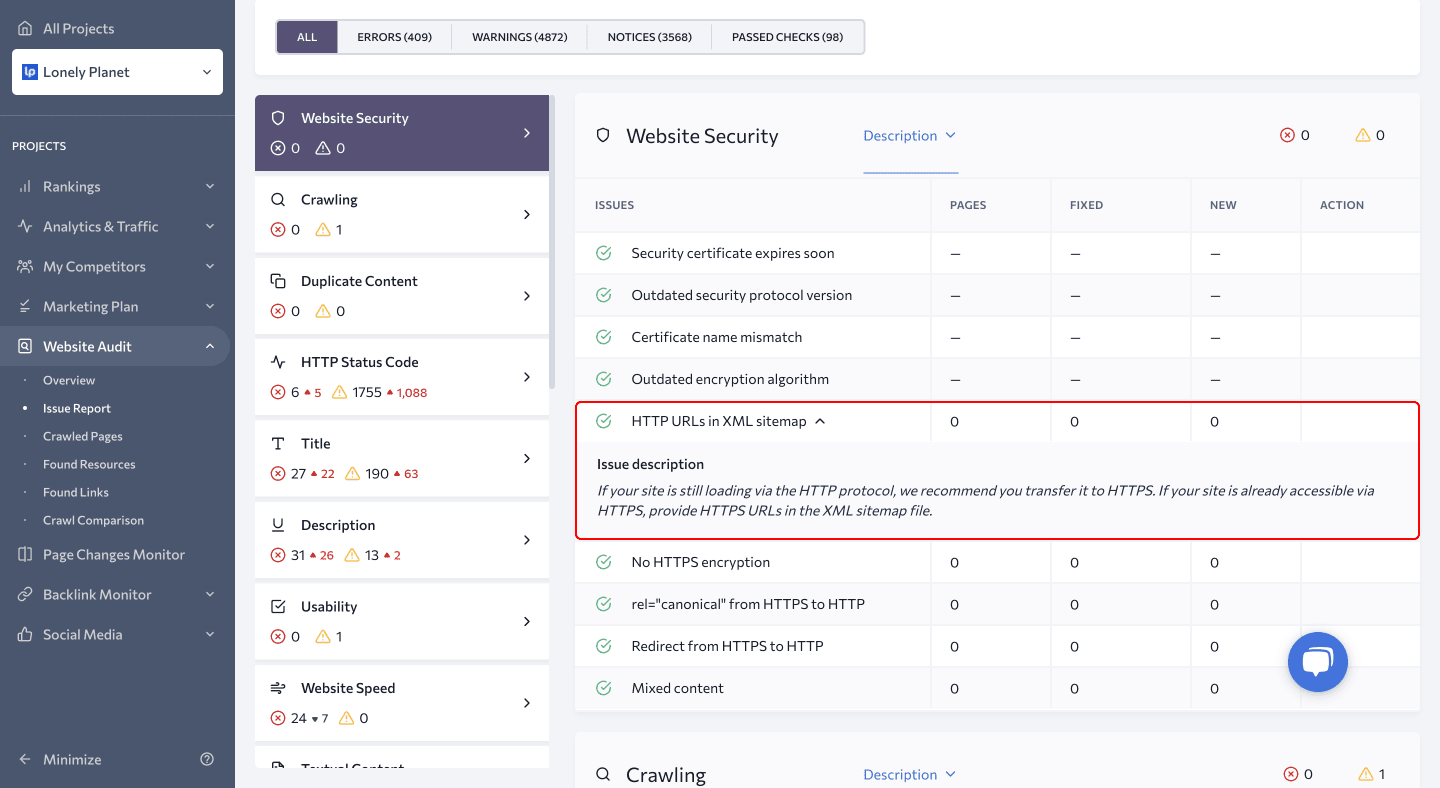

SE Ranking’s Website Audit tool will also warn you when these instances happen.

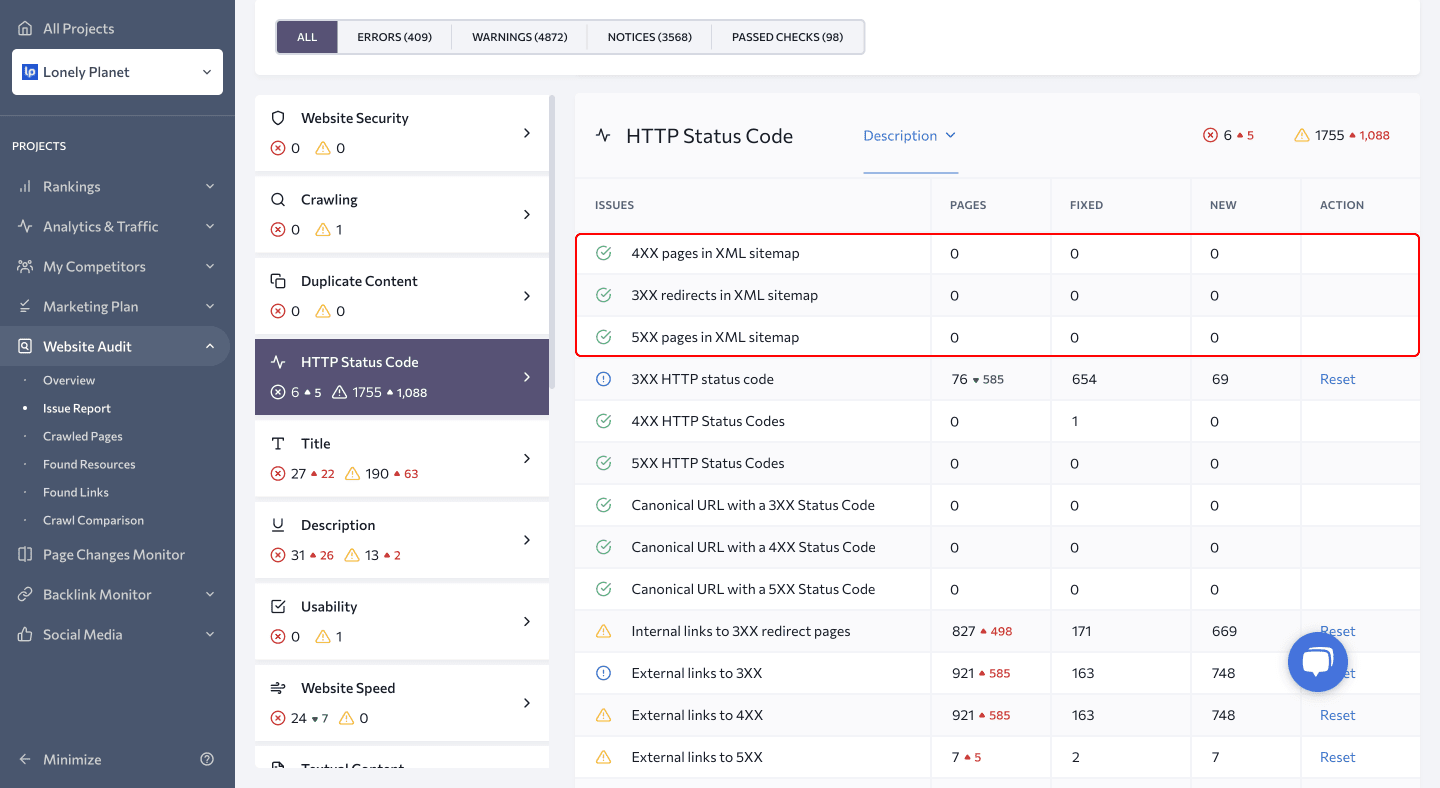

- Finally, there’s one more thing that can prevent Google from crawling a page—an HTTP non-200 status code. This error is labeled as HTTP error in the Google Search Console report, and the exact error code is specified for each instance. You can find all the necessary information in the HTTP section of SE Ranking’s Website Audit.

Google suspects you’ve listed the wrong URLs

Do not include thin content or soft 404 pages when managing your sitemap, as doing so can negatively impact your website’s SEO:

- Thin content refers to pages that offer limited or duplicate content, providing little value to users. To address this issue, conduct both manual reviews and data analysis to identify such pages lacking substance or quality. For example, you can use Google Analytics to spot pages with low engagement rates and minimal traffic, as they may be candidates for thin content. Once identified, you have three options: noindex these pages, improve their quality through content rework, or remove them from your website entirely.

- Soft 404 pages return a “200 OK” status code instead of a “404 Not Found” status, misleading both search engines and users. To identify these pages, go to Google Search Console’s Page Indexing report, where soft 404 pages will be listed among the pages not indexed by Google. Review these pages closely and take appropriate action. If the page truly doesn’t exist, set up the correct 404 or 410 error status to indicate its absence. On the other hand, if the page does exist and you want Google to index it, focus on enhancing its content and then resubmit it for indexing.

Syntax-based sitemap errors

You typically won’t have to worry about syntax-based sitemap errors if you generate a sitemap with one of the special tools, as they handle tags and attributes properly. Still, if you created your sitemap manually, you may encounter one of the following issues:

- Incorrect namespace. The namespace listed within your <urlset> tag should be one of the accepted protocols. Currently, the following protocols are used:

| News sitemaps | xmlns:news=” |

| Video sitemaps | xmlns:video=” |

| Image sitemaps | xmlns:image:=” |

| hreflang sitemaps | xhtml:hreflang:=” |

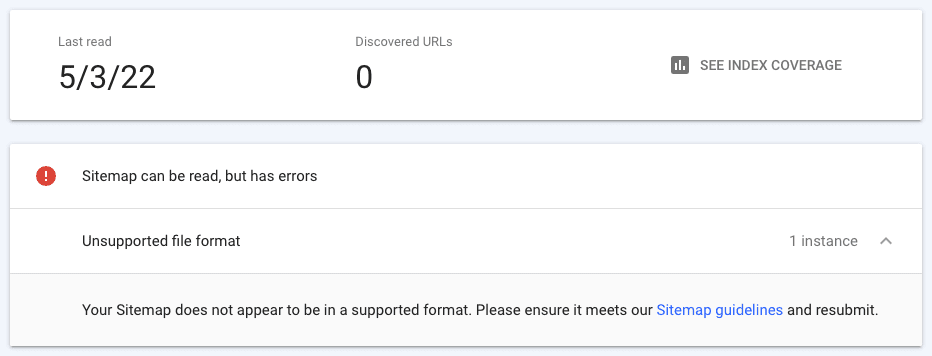

- If you used the wrong protocol for your sitemap, you’ll get the Unsupported format error. This error can also occur due to various syntax errors, such as using incorrect quotation marks (only straight single or double quotes are accepted) or missing the encoding tag.

There are also several video-sitemap-specific errors: Thumbnail too large/small, Video location and play page location are the same, Video location URL appears to be a play page URL. Find more details on these errors here.

To ensure the accuracy and proper structure of your XML sitemaps, you must know how to prevent syntax errors and common sitemap mistakes. One of the most convenient ways to accomplish this is by using XML sitemap validators like this one. Tools like these will generate a comprehensive report, highlight problematic sections or lines of code, and provide you with valuable insights on how to fix common sitemap errors.

Once you have fixed all sitemap errors mentioned in your GSC report, resubmit your updated sitemap, and notify Google by pinging the updated sitemap URL. Send a GET request to the following address in your browser or using the command line, specifying the full URL of the sitemap:

This will ensure that you’re actively flagging a change in the sitemap file, prompting Google to take notice and expedite the crawling and indexing process.

To learn the ins and out of website indexing, read this complete guide.

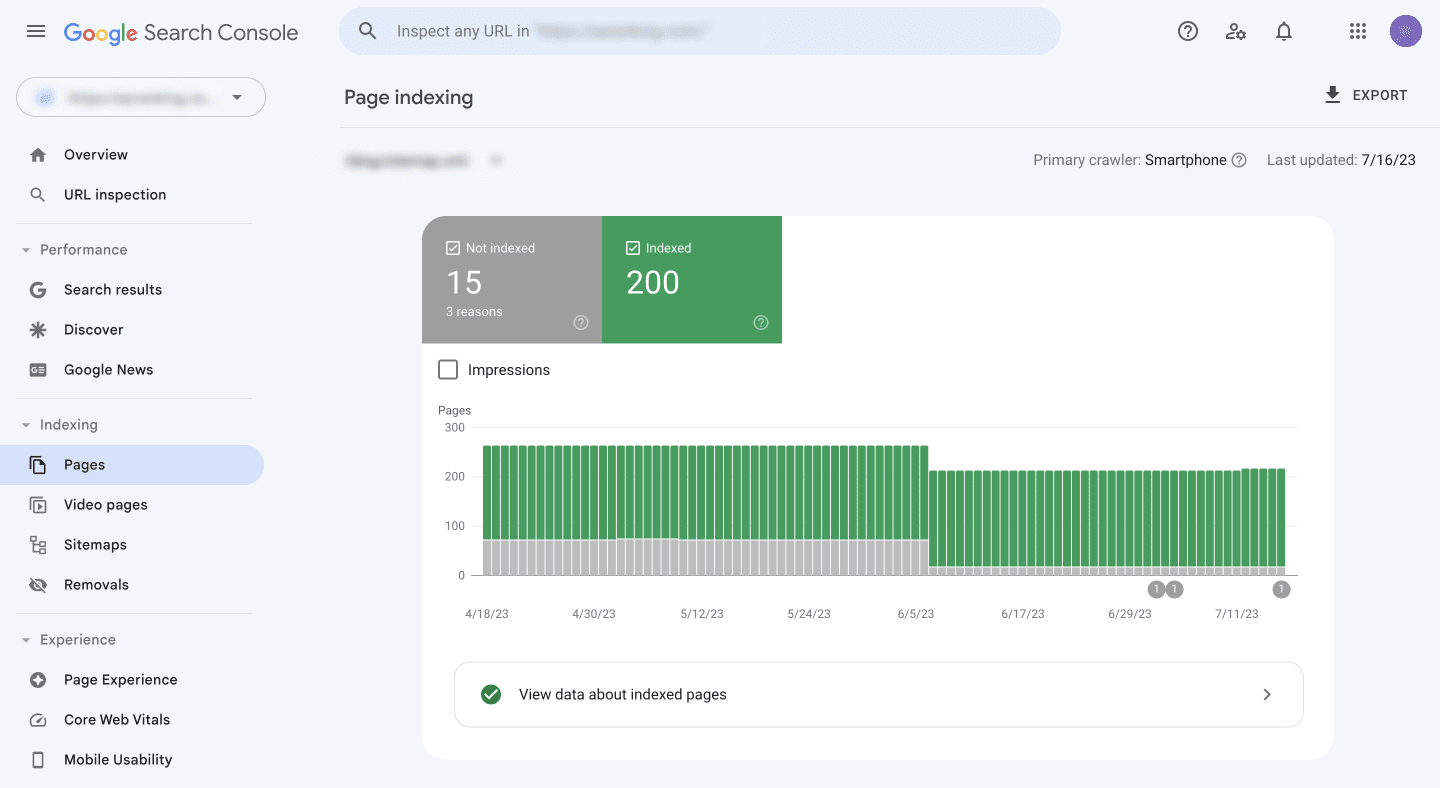

Balancing the submitted URLs vs indexed URLs ratio

Your sitemap or sitemap index file status may say Success, but that doesn’t mean your work is complete. Click the See Page Indexing button next to the number of discovered URLs to go to the respective report. You may begin investigating it only to discover that not all of the pages you submitted were indexed.

When monitoring the indexing status of your website’s pages in Google Search Console, you can use the sitemap filter feature to easily switch between sitemaps and page categories.

To access this feature, navigate to the Page Indexing report in Google Search Console, select the Sitemap filter, and then choose the desired category or sitemap you want to examine. This is where you can view the following reports:

- All known pages: includes all pages discovered by Google.

- Submitted pages: lists pages submitted via your sitemap

- Unsubmitted pages: highlights pages that Google has found but were not submitted via your sitemap.

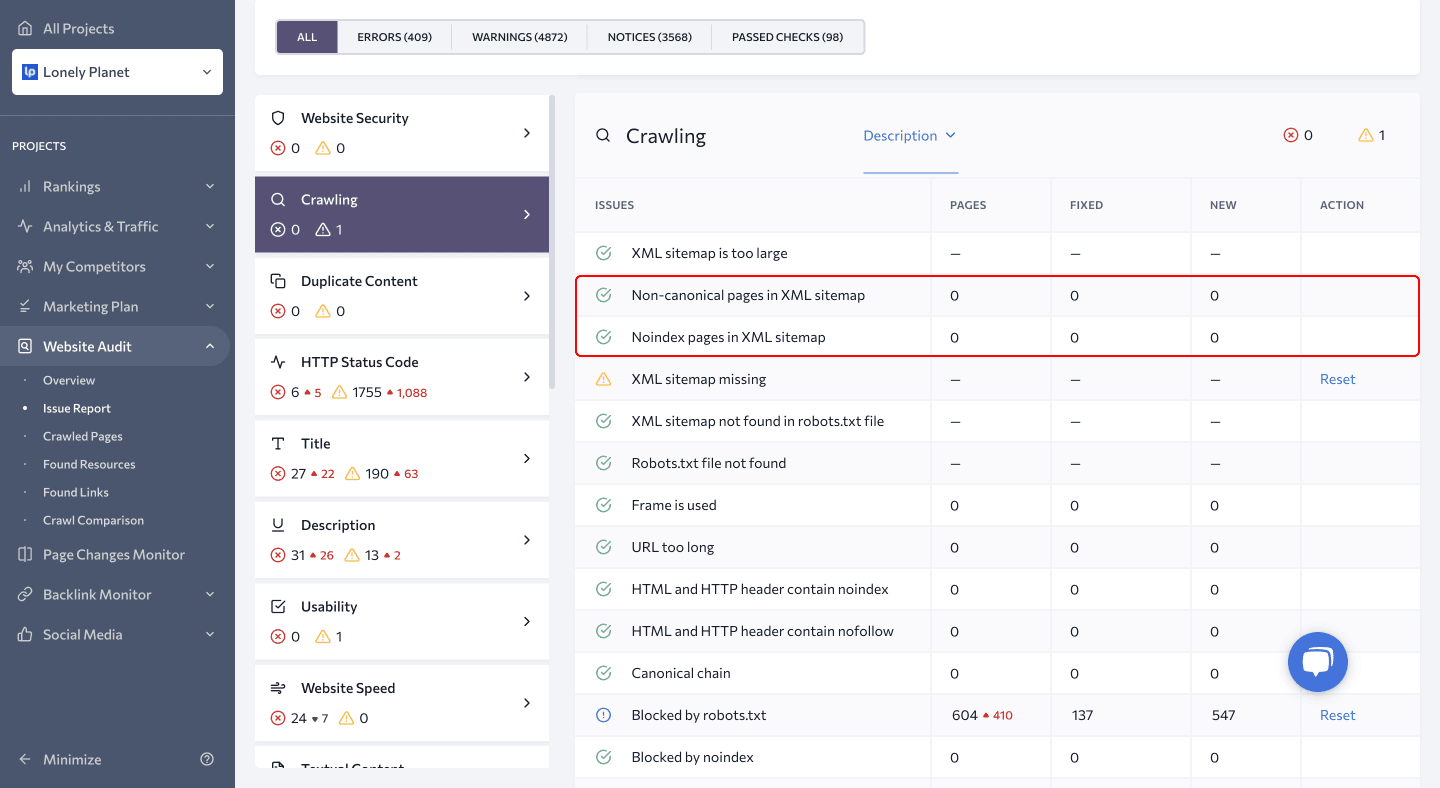

Now, it’s not only okay but common to have pages excluded from indexing. This is because Google cannot like and index all pages of your website. Many websites have pages that webmasters don’t want to index, such as admin areas, utility pages, duplicates, and alternative pages. If Google is not indexing your pages, it’s likely because you added pages that shouldn’t be on your sitemap. Google may not be able to index and crawl the page because of a noindex directive, or Google may be unsure about whether you want the page indexed or not, such as when you add non-canonical pages to your sitemap. Each of these instances can be found in different tabs of the Google Search Console’s Page Indexing report, but it’s more convenient to check them using SE Ranking’s Website Audit tool, which will show any crawling issues in the Crawling section of the Issue report.

To resolve the non-indexed pages issue, remove noindex and non-canonical pages from your sitemap. Alternatively, if the pages were marked as noindex and non-canonical by mistake, fix the wrong tag issues to enable proper indexing.

Once you’re sure your sitemap is not sending confusing signals to Google, go through the Page Indexing report to find instances where you and Google disagree on the value of a page.

- In the Indexed tab, you can discover pages that Google has successfully crawled and indexed. To access this list, click View data about indexed pages below the chart on the summary page of the report. Keep in mind that this report lists only 1,000 URLs, so not all pages may be included. For more detailed data on a specific URL, select it from the list or add it to the search bar at the top of the page and click the Inspect URL button. This will provide additional insights into how Google perceives and treats that URL.

At the bottom of the page, you’ll find the Improve page appearance section, which presents indexed pages that could benefit from enhancements. Pay close attention to pages that were indexed despite having a noindex directive. In such cases, Google’s judgment is likely accurate, and you should consider removing the noindex tag from these pages or reviewing your X-Robots tag settings. You may want to add these pages to your sitemap, as Google believes these to be high quality. You should also watch out for duplicate pages that were indexed but not present on your sitemap—such cases often arise due to poor pagination and parameter handling.

- In the Not Indexed tab, you’ll find pages that Google couldn’t index due to various reasons. These could include indexing errors or intentional exclusions, such as pages blocked by robots.txt, old 404 pages, or pages with noindex or canonical tags.

The reasons for URLs not being indexed are listed in the Why pages aren’t indexed table, which displays the status, source, and number of affected pages. Take the time to thoroughly review each case. Pay particular attention to canonical pages that Google chose not to index, as the search engine may believe there are better alternatives on your website. If Google’s assessment is correct, consider fixing your canonical tags. If you still believe the page should be indexed, focus on improving its content, backlink profile, and internal linking to convince Google that it is more valuable than other options.

After resolving the issue, you can inform Google and request validation of the fix by clicking the button provided within the issue report.

It’s recommended to take a closer look at all of these pages and then see what you can do to increase their value—work on content, user experience, internal linking, and more.

Conclusion

Creating a sitemap is easy, thanks to the wide variety of sitemap-generating tools out there on the market. Still, if you simply settle on any random tool and then ignore site-mapping best practices, you might end up submitting loads of low-quality pages to Google via your sitemap.

We hope this guide has helped you fix every single error on your Google Search Console’s Sitemaps report. We recommend only keeping juicy, high-quality pages on your sitemap while removing all pages that may give a bad impression on search engines. If you have any remaining questions, don’t hesitate to reach out to us via our live chat or get in touch with us on Facebook.