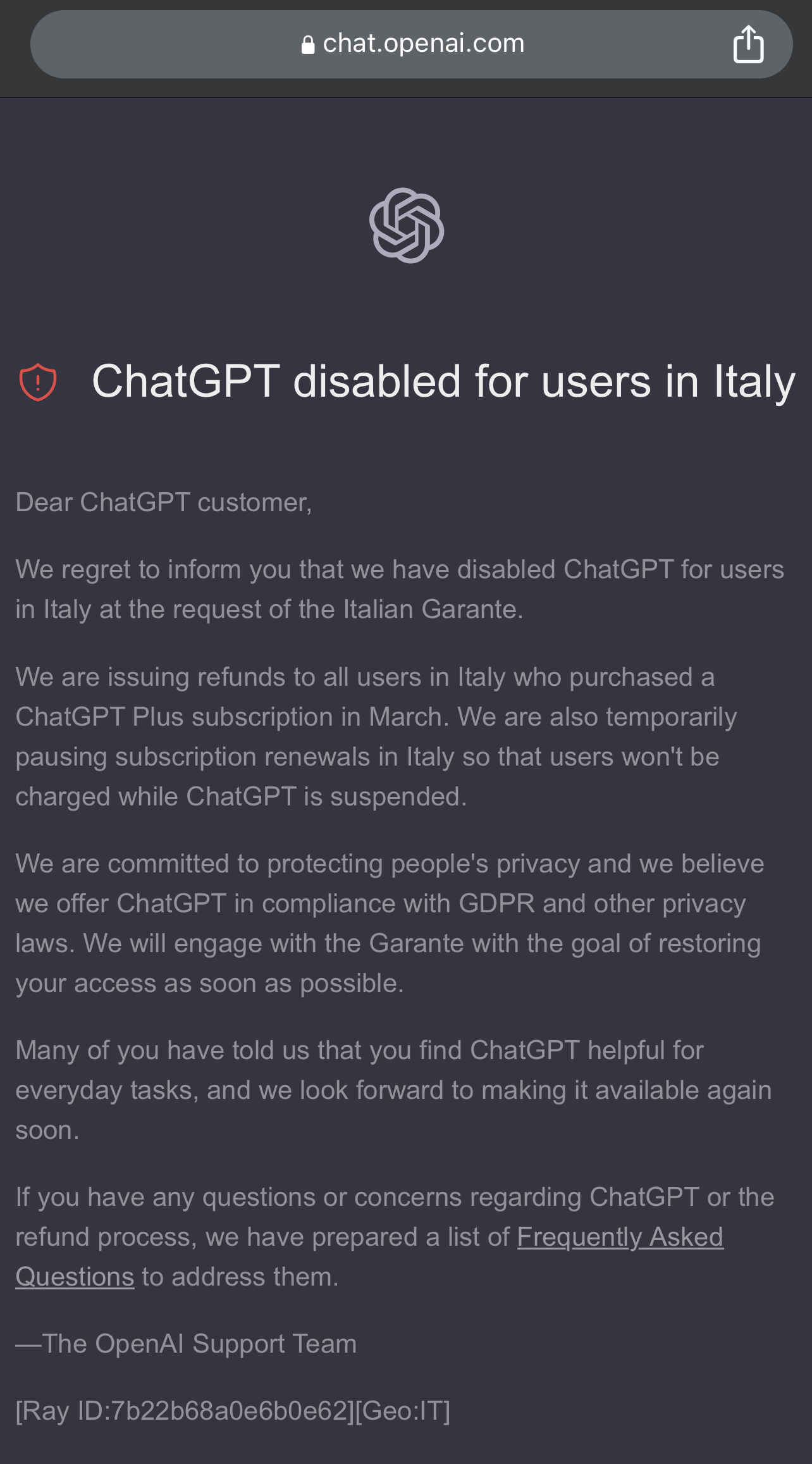

Imagine logging in to your most valuable business tool when you arrive at work, only to be greeted by this:

“ChatGPT disabled for users in Italy

Dear ChatGPT customer,

We regret to inform you that we have disabled ChatGPT for users in Italy at the request of the Italian Garante.”

OpenAI gave Italian users this message as a result of an investigation by the Garante per la protezione dei dati personali (Guarantor for the protection of personal data). The Garante cites specific violations as follows:

- OpenAI did not properly inform users that it collected personal data.

- OpenAI did not provide a legal reason for collecting personal information to train its algorithm.

- ChatGPT processes personal information inaccurately without the use of real facts.

- OpenAI did not require users to verify their age, even though the content ChatGPT generates is intended for users over 13 years of age and requires parental consent for those under 18.

Effectively, an entire country lost access to a highly-utilized technology because its government is concerned that personal data is being improperly handled by another country – and that the technology is unsafe for younger audiences.

Diletta De Cicco, Milan-based Counsel on Data Privacy, Cybersecurity, and Digital Assets with Squire Patton Boggs, noted:

“Unsurprisingly, the Garante’s decision came out right after a data breach affected users’ conversations and data provided to OpenAI.

It also comes at a time where generative AIs are making their ways into the general public at a fast pace (and are not only adopted by tech-savvy users).

Somewhat more surprisingly, while the Italian press release refers to the recent breach incident, there is no reference to that in the Italian decision to justify the temporary ban, which is based on: inaccuracy of the data, lack of information to users and individuals in general, missing age verification for children, and lack of legal basis for training data.”

Although OpenAI LLC operates in the United States, it has to comply with the Italian Personal Data Protection Code because it handles and stores the personal information of users in Italy.

The Personal Data Protection Code was Italy’s main law concerning private data protection until the European Union enacted the General Data Protection Regulation (GDPR) in 2018. Italy’s law was updated to match the GDPR.

What Is The GDPR?

The GDPR was introduced in an effort to protect the privacy of personal information in the EU. Organizations and businesses operating in the EU must comply with GDPR regulations on personal data handling, storage, and usage.

If an organization or business needs to handle an Italian user’s personal information, it must comply with both the Italian Personal Data Protection Code and the GDPR.

How Could ChatGPT Break GDPR Rules?

If OpenAI cannot prove its case against the Italian Garante, it may spark additional scrutiny for violating GDPR guidelines related to the following:

- ChatGPT stores user input – which may contain personal information from EU users (as a part of its training process).

- OpenAI allows trainers to view ChatGPT conversations.

- OpenAI allows users to delete their accounts but says that they cannot delete specific prompts. It notes that users should not share sensitive personal information in ChatGPT conversations.

OpenAI offers legal reasons for processing personal information from European Economic Area (which includes EU countries), UK, and Swiss users in section nine of the Privacy Policy.

The Terms of Use page defines content as the input (your prompt) and output (the generative AI response). Each user of ChatGPT has the right to use content generated using OpenAI tools personally and commercially.

OpenAI informs users of the OpenAI API that services using the personal data of EU residents must adhere to GDPR, CCPA, and applicable local privacy laws for its users.

As each AI evolves, generative AI content may contain user inputs as a part of its training data, which may include personally sensitive information from users worldwide.

Rafi Azim-Khan, Global Head of Data Privacy and Marketing Law for Pillsbury Winthrop Shaw Pittman LLP, commented:

“Recent laws being proposed in Europe (AI Act) have attracted attention, but it can often be a mistake to overlook other laws that are already in force that can apply, such as GDPR.

The Italian regulator’s enforcement action against OpenAI and ChatGPT this week reminded everyone that laws such as GDPR do impact the use of AI.”

Azim-Khan also pointed to potential issues with sources of information and data used to generate ChatGPT responses.

“Some of the AI results show errors, so there are concerns over the quality of the data scraped from the internet and/or used to train the tech,” he noted. “GDPR gives individuals rights to rectify errors (as does CCPA/CPRA in California).”

What About The CCPA, Anyway?

OpenAI addresses privacy issues for California users in section five of its privacy policy.

It discloses the information shared with third parties, including affiliates, vendors, service providers, law enforcement, and parties involved in transactions with OpenAI products.

This information includes user contact and login details, network activity, content, and geolocation data.

How Could This Affect Microsoft Usage In Italy And The EU?

To address concerns with data privacy and the GDPR, Microsoft created the Trust Center.

Microsoft users can learn more about how their data is used on Microsoft services, including Bing and Microsoft Copilot, which run on OpenAI technology.

Should Generative AI Users Worry?

“The bottom line is this [the Italian Garante case] could be the tip of the iceberg as other enforcers take a closer look at AI ******,” says Azim-Khan.

“It will be interesting to see what the other European data protection authorities will do,” whether they will immediately follow the Garante or rather take a wait-and-see approach,” De Cicco adds. “One would have hoped to see a common EU response to such a socially sensitive matter.”

If the Italian Garante wins its case, other governments may begin to investigate more technologies – including ChatGPT’s peers and competitors, like Google Bard – to see if they violate similar guidelines for the safety of personal data and younger audiences.

“More bans could follow the Italian one,” Azim-Khan says. At “a minimum, we may see AI developers having to delete huge data sets and retrain their bots.”

OpenAI recently updated its blog with a commitment to safe AI systems.

Featured image: pcruciatti/Shutterstock

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)