![Documenting Google’s site-level evaluation and impact on search rankings using the ‘Gabeback Machine’ [Googler Quotes, Videos, Documentation and more] Documenting Google’s site-level evaluation and impact on search rankings using the ‘Gabeback Machine’ [Googler Quotes, Videos, Documentation and more]](https://www.gsqi.com/marketing-blog/wp-content/uploads/sites/3/2024/11/google-site-level-gabeback-machine.jpg)

It’s not like all urls on a site suddenly become low quality overnight… It’s a site-level adjustment that can impact rankings across an entire site.

After the recent creator summit, some attendees explained that Google’s Pandu Nayak joined the group to answer questions about the helpful content update, Google’s evaluation of quality, the current state of the search results, and more. One comment coming from that meeting was triggering for the site owners, and for the broader SEO community. When asked about the HCU site-level classifier, and site-level impact rankings-wise, Pandu said that there was none and that Google handles rankings at the page-level.

My head almost exploded after reading those comments for several reasons. First, that’s definitely not true, and Google itself has explained site-level rankings impact many, many times over the years. Don’t worry, I’ll come back to that soon. Second, the update that most of the group was discussing (the helpful content update) was literally crafted to apply a site-level classifier when a website was deemed to have a substantial amount of content written for Search versus humans. It was literally in the announcement and documentation until Google baked the update into its core ranking system. I’ll also cover more about the HCU soon.

Google has not been shy about explaining it has site-level quality algorithms that can have a big impact on rankings, and especially during major algorithm updates like broad core updates. Also, Google has crafted several algorithm updates over the years to specifically have site-level impact. For example, medieval Panda, Penguin, Pirate, and of course the helpful content update that I just explained. That update impacted sites broadly (negatively dragging down sites when a classifier was applied).

So, hearing from a senior-level executive at Google (VP of Search) explain that everything is page level did not go over well (with both the meeting attendees and the overall SEO community). It came across as gaslighting, and again, it’s not true based on everything I’ve heard from Googlers over the years, what Google has explained about major algorithm updates, and what I have seen while helping many companies deal with major algorithm update drops over the years. I will also revisit Pandu’s statement at the end of this post about site-level scoring impacting page-level scoring, and what I think Pandu might have meant with his statement.

Documenting site-level impact over the years: This is where the “Gabeback Machine” comes in handy.

I would like to officially welcome to the G-Squared Archives. Over the years, I have documented many Googler statements about major algorithm updates, how those updates work, how rankings can be impacted by various systems, algorithms, manual actions, and more. That information has been extremely helpful since Googler statements matter. And Google’s documentation matters. I like to call my archive “The Gabeback Machine” since it’s like Wayback Machine, but laser focused on SEO.

Below, I’ll cover a number of important points regarding site-level evaluation and site-level impact on rankings. After going through the information, you can make your own decisions about if there is site-level impact. I think you’ll get it quickly, and maybe you’ll even learn a thing or two based on what I’ve documented over the years.

Let’s begin.

Major Algorithm Updates With Broad Impact (Site-level):

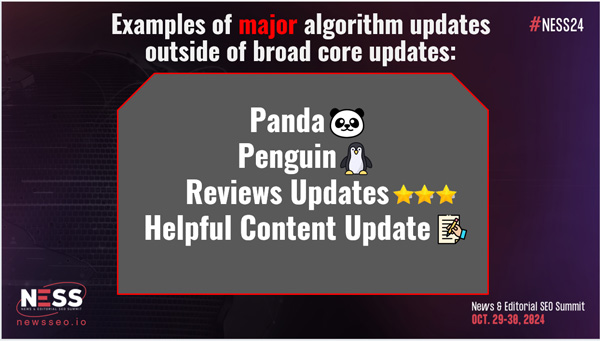

Google has crafted some powerful algorithm updates over the years outside of its core ranking system. I actually just covered this topic in my NESS presentation. When Google identifies a loophole or problem that its core ranking system isn’t handling, it can craft additional algorithm updates and systems in a “lab”. After heavy testing, Google can release those updates to tackle those specific problems.

Sometimes those additional algorithm updates work well, and then sometimes they go off the rails. Regardless, they have historically had site-level impact. For example, medieval Panda, the original Penguin, Pirate (which still roams the high SEO seas), and then the latest algorithm update to hit the scene – the infamous helpful content update (HCU).

When those updates rolled out, sites that were impacted often dropped off a cliff. They were punitive algorithm updates (meant to punish and not to boost). That’s because a classifier was applied to sites that were doing whatever those algorithms were targeting. You can think of that as a site-wide ranking signal that can drag down rankings from across an entire site. Not all drop the same way for each query, but it’s like walking in heavy mud and every step forward is tough… There’s basically a filter applied to your site rankings-wise. And “site” is often at the hostname level (so subdomain). I’ll cover more about that soon.

It’s not like every url became low quality on one day…

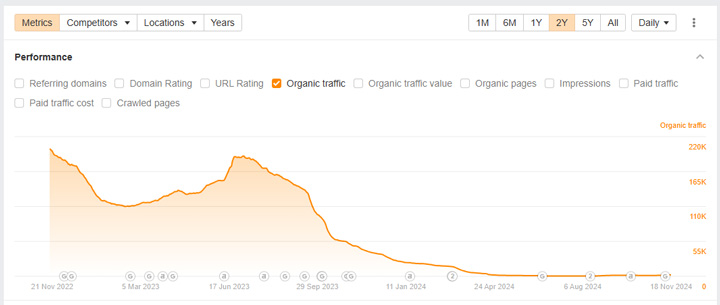

When impacted by one of the updates I mentioned, you can easily see the huge drop in the site’s visibility trending over time. Those sites often plummeted all on one day. Regarding site-level versus page-level impact, it’s not like every url on the site suddenly became low quality… That’s ridiculous. It’s just that the classifier had been applied, and boom, rankings tanked across the entire site.

For example, here are some sites impacted by the helpful content update:

Does that look like page-level impact to you?

Exhibit 1: HCU

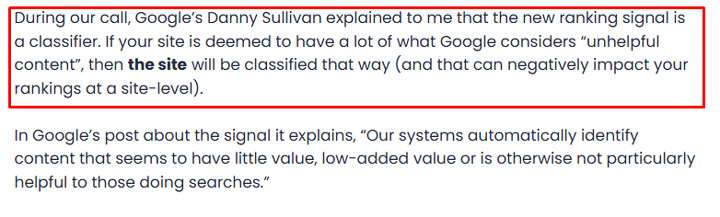

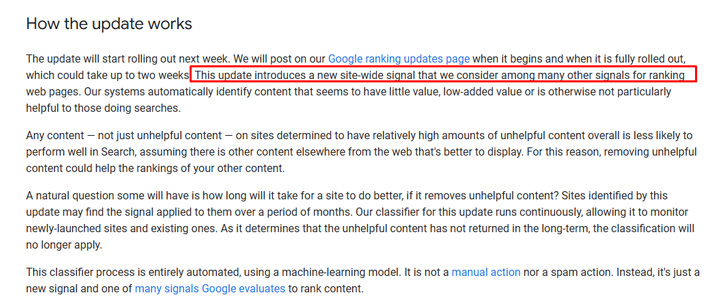

And here is exhibit one for my case that Google has site-level classifiers. First, I was one of a handful of SEOs contacted by Google in the summer of 2022 before the original helpful content update was released. Google wanted to explain what they had crafted and give some of us a heads-up, since we focused heavily on the topic of algorithm updates. During that call, which was documented in my post about the first helpful content update, Google explained it was a site-level classifier which could impact rankings across the entire site.

And of course, the site-level part was also documented in their own posts and documentation about the helpful content update.

So just to clarify, GOOGLE ITSELF DOCUMENTED THAT THE HCU WOULD HAVE SITE-LEVEL IMPACT.

Beyond the HCU, Panda, Penguin, and Pirate also had site-level impact. By the way, Pirate is still running and refreshed often from what we know. When a site was impacted by Panda, Penguin, or Pirate, rankings would plummet across the entire site. Again, it was like having a filter applied. It was not a page-level demotion. It was site level based on a classifier being applied to the site.

Exhibit 2: Google’s Penguin algorithm.

Google launched Penguin in 2012 to target sites creating many spammy links to game PageRank. That algorithm update also was a site-level adjustment. Many sites impacted plummeted in rankings as soon as the update was launched. It was an extremely punitive algorithm, just like the HCU and Panda.

Here is a quote from the launch announcement. Notice the wording about decreasing rankings for sites (and not pages):

“In the next few days, we’re launching an important algorithm change targeted at webspam. The change will decrease rankings for sites that we believe are violating Google’s quality guidelines. This algorithm represents another step in our efforts to reduce webspam and promote high-quality content.”

“Sites affected by this change might not be easily recognizable as spamming without deep analysis or expertise, but the common thread is that these sites are doing much more than ethical SEO; we believe they are engaging in webspam tactics to manipulate search engine rankings.”

Exhibit 3: Medieval Panda algorithm:

Panda was launched in 2011 and was targeting sites with a substantial amount of low-quality and/or thin content. Sites impacted would often drop heavily in rankings as soon as the update rolled out. There was a Panda score calculated and sites on the wrong side of the algorithm would pay a heavy price. It was like a huge negative filter applied to the site. The good news was that Panda ran often (usually every 6-8 weeks). And sites did have a chance to recover… many did.

Here is a quote from the launch announcement. Again, notice the language about sites and not pages:

“This update is designed to reduce rankings for low-quality sites—sites which are low-value add for users, copy content from other websites or sites that are just not very useful. At the same time, it will provide better rankings for high-quality sites—sites with original content and information such as research, in-depth reports, thoughtful analysis and so on.”

“Therefore, it is important for high-quality sites to be rewarded, and that’s exactly what this change does.”

“Based on our testing, we’ve found the algorithm is very accurate at detecting site quality. If you believe your site is high-quality and has been impacted by this change, we encourage you to evaluate the different aspects of your site extensively.”

Exhibit 4: Google’s Pirate algorithm.

Aye, matey! Many don’t think about Google’s Pirate algorithm, but it can also have a big impact on rankings across a site (for those receiving many DMCA takedowns). I wrote a post covering my analysis of the algorithm update in 2013, and like Panda, Penguin, and the HCU, sites often dropped heavily when impacted by Pirate.

Here is a quote from the announcement about Pirate. Yep, sites and not pages:

“Sites with high numbers of removal notices may appear lower in our results.”

A quick note about site versus hostname for major algorithm updates:

And by the way, Google’s site-level quality algorithms are typically applied at the hostname-level. That’s why you can often see a site with multiple subdomains see varying impact during major algorithm updates. One subdomain might drop heavily, while the others surge, or remain stable.

And this all dovetails nicely into a whole bunch of quotes, links, and videos about site-level evaluation and impact (based on quotes from Googlers and information contained in Google’s documentation). Again, I tapped into the “Gabeback Machine” to find those important pieces of information.

So strap your SEO helmets on. There’s a lot to cover.

Exhibit X: Information from Google about site-level impact: Documentation, Links, Tweets, and Videos.

Again, I document everything. It’s a thing of mine. From timestamped webmaster hangout videos to important tweets and social shares to quotes from conference Q&As, I think it’s important to document Googler statements about algorithm updates, systems, rankings, and more.

In my opinion, it’s incredibly important for site owners to understand site-level impact, so I’ve been very careful with documenting all of this over the years. I have also shared a good amount of this in my posts and presentations about major algorithm updates, while also sharing across social media. And it ends up that Barry has covered some of my tweets about site-level impact too. With all that being said, I wanted to gather more of those quotes, tweets, and videos in one place (especially based on Pandu Nayak’s recent comments). Hence, this post!

I’ll present everything below about site-level evaluation and impact in no particular order:

Google’s John Mueller explains that quality is a site-level signal.

“We do index things page by page, we rank things page by page, but there’s some signals that we can’t reliably collect on a per page basis where we do need to have a bit of a better understanding of the overall site. And quality kind of falls into that category.”

A Google patent explaining that Google is ranking search results in pages using page-level scores and applying site-level scores based on things such as quality.

Deal with ALL quality problems -> Via John Mueller:

Have many older low-quality pages? Yes, that can hurt your site in Search. Google looks at the website overall, so if it sees a lot of low-quality content, then Google can take that into account for rankings:

More from John Mueller about site-level impact:

“Of course we rank pages, that’s where the content is. We also have other signals that are on a broader level – there’s no conspiracy. Panda, Penguin, even basic things like geotargeting, safe-search, Search Console settings, etc.”

Quotes from Google’s Paul Haahr, a lead ranking engineer: Article written by Danny Sullivan (not at Google at the time) covering quotes from Paul:

“When all things are equal with two different pages, sitewide signals can help individual pages.”

“Consider two articles on the same topic, one on the Wall Street Journal and another on some fly-by-***** domain. Given absolutely no other information, given the information we have now, the Wall Street Journal article looks better. That would be us propagating information from the domain to the page level,” Haahr said.

And another quote from the interview:

“That’s not to say that Google doesn’t have sitewide signals that, in turn, can influence individual pages. How fast a site is or whether a site has been impacted by malware are two things that can have an impact on pages within those sites. Or in the past, Google’s “Penguin Update” that was aimed at spam operated on a site-wide basis“

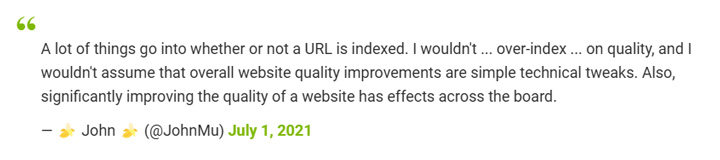

March 2024 core update FAQ from Google:

In Google’s own documentation about the March 2024 broad core update, there is an FAQ titled, “What ranking signals does a site have?” In that answer, Google explains that they do have some site-wide signals that are considered. So again, it’s right in Google’s docs about broad core updates.

Via John Mueller: “When evaluating relevance, Google tries to look at the bigger picture of the site.” And “Some things Google evaluates are focused on the domain level.“

“Yes, we do sometimes look at the website overall to see how it fits in with the rest of the web, and to see when it would be relevant to show in the search results…”

“We try to evaluate the quality of a website overall as a first understanding how good the site is. Does it have relevant, unique, and compelling content that we can show in the search results?”

With core updates, there are things focused more on the site overall, the bigger picture.

“For some things, we look at the quality of the site overall. If you have significant portions that are lower quality, then we might think the site overall is not great. And that can have effects in different places across the website. Lower-quality content can pull down the higher-quality content on the site.”

Regarding the Product Reviews Update, low-quality content can drag down the high-quality content. Here was my tweet: Impacted by the Product Reviews Update & have a mix of high quality and low-quality content? Via John Mueller: “Having a mix like that can throw things off. Google looks at a site overall from a quality perspective, so the low can be dragging down the high.”:

John Mueller about core updates. Not about individual issues, more about site quality overall.

“Google doesn’t focus on individual issues, but rather the relevance of the site overall (including content quality, UX issues, ads on the page, how things are presented, sources, & more):

When working on improvements, don’t just focus on improving everything around the content… you should work on improving the content too. i.e. Where is there low-quality content, where are users confused, etc.? Then address that.”

After removing low-quality content, how does a quality evaluation work? -> Via John Mueller: “It can take months (6+ months) for Google to reevaluate a site after improving quality overall. It’s partially due to reindexing & partially due to collecting quality signals. Also, testing for broad core updates is very hard since a small subset of pages isn’t enough for Google to see a site as higher quality.”

“A lot of things go into whether or not a URL is indexed. I wouldn’t … over-index … on quality, and I wouldn’t assume that overall website quality improvements are simple technical tweaks. Also, significantly improving the quality of a website has effects across the board.”

From an article on Search Engine Roundtable. Here’s the tweet from John:

Now the great Bill Slawski covering patents about Google calculating site-quality scores.

Here is information about losing or gaining rich snippets based on an overall site quality evaluation:

At 3:45 in the podcast (and something I have documented several times in my blog posts). If you lose rich snippets site-wide, then there could be larger quality issues. There are several site-wide signals Google uses that can end up impacting things like rich snippets. Beware. And there was a Panda score which could be lowering your overall site quality.

More from Search Engine Roundtable about losing rich snippets due to overall quality:

And from Barry at Search Engine Roundtable about the loss of rich snippets based on site-level quality evaluation:

And I covered more on Twitter about this:

Site-level quality signal -> If rich results aren’t shown in the SERPs, and it’s set up correctly from a technical POV, then that’s usually a sign that Google’s quality algorithms are not 100% happy with the website. i.e. Can Google trust the site enough to show rich results?…

John about improving one page and how that can impact rankings for the one page.

But, there are still signals where Google needs to look at the site-level.

Quality is one of those signals. Improve the site overall and over time.

Google about low-quality UGC on some parts of a site impacting the entire site:

Google’s documentation explaining this: https://developers.google.com/search/blog/2021/05/prevent-portions-of-site-from-spam

Google Researches about authority and trust:

In the proposal, the Google researchers address authority & trust. Yep, domain-level: “There are many known techniques for estimating the authority or veracity of a webpage, from fact-checking claims within a single page to aggregating quality signals at the logical domain level.“

Also for search engines, if Google can recognize that a site is good for the broader topic, then it can surface that site for broader queries on the topic as well. Google doesn’t have to focus on individual pages to surface the site for that broader topic.

Google’s quality algorithms impacting Discover visibility (broadly):

Via John Mueller:

Yes, low quality pages on a site can impact the overall “authority” of that site. A few won’t kill you:

How does Google pick up quality changes & how long to see impact? Via John Mueller:

There’s no specific timeframe for a lot of our quality algorithms. It can take months as Google needs to see changes across the entire site (as it reprocesses the entire site).

John about an overall view of the site expertise-wise:

Via John Mueller: Re: the March core update, these are quality updates we roll out. On the one hand, technical SEO issues are always smart to fix, but if the overall view of the site makes it hard to tell you’re an expert, then that can play a role as well.

For a new page, if it’s on a high-quality site we trust, we might rank it higher from the start.

Revisiting Pandu’s comments about page-level versus site-level impact.

Before ending this post, I wanted to quickly revisit Pandu’s comments about page-level versus site-level impact on rankings. Maybe the confusion just comes down to semantics. For example, maybe Google’s page-level ranking takes site-level scoring into account. There were several mentions of that in the examples I provided above (like from Google’s Paul Haahr.) If that’s the case, then Pandu might be technically correct about page-level impact, but there could be filters being applied based on site-level scoring.

For example, you can think of a Panda score, HCU classifier, Pirate classifier, site-level quality scoring for broad core updates, etc., impacting page-level scoring. I’m just thinking out loud, but the idea of just page-level scoring without taking site-level scoring into account is crazy to me. And everything I’ve documented over the years, and what I’ve seen with my own eyes while helping many companies deal with major algorithm updates, tells me that site-level evaluation and impact is absolutely happening.

Again, it’s not like all urls on a site suddenly became low quality overnight. It’s a site-level evaluation that can drag down, or raise up, rankings across an entire site. Yep, site-level impact is real.

GG