As the predominant web crawler accounting for nearly 29% of bot hits, Googlebot plays a crucial role in determining how users access information online.

By continuously scanning over millions of domains, Googlebot helps Google’s algorithms understand the vast landscape of the internet and maintain its dominance in search with over 92% of the global market share.

Despite being the most active crawler, many site owners remain unaware of how Googlebot functions and what aspects of their site it assesses.

Read on as we demystify Googlebot’s inner workings in 2024 by examining its various types, core purpose, and how your site can optimize itself for this influential bot.

What Is Googlebot and How Does it Work?

Googlebot is the web crawler Google uses to discover and archive web pages for Google Search. To function, Googlebot relies on web crawling to find and read pages on the internet systematically. It discovers new pages by following links on previously encountered pages and other methods that we’ll outline later.

It then shares the crawled pages with Google’s systems that rank search results. In this process, Googlebot considers both how many pages it crawls (called crawl volume) and how effectively it can index important pages (called crawl efficacy). There are some other concepts that are also important to understand that I’ll outline below:

Crawl Budget

This is the daily maximum number of URLs that Googlebot will crawl for a specific domain. For most websites and clients, this is not a concern as Google will allocate enough resources to find all of your content. Of the hundreds of sites I’ve worked on, I’ve only encountered a few instances where the crawl budget was an issue. For one site that had over 100 million pages, Googlebot’s crawls were maxed out at 4 million per day and that wasn’t enough to get all of the content indexed. I was able to get in contact with Google engineers and have them increase the crawl budget to 8 million daily crawls. This is an extremely rare scenario and most sites will only see a few thousand daily crawls, so this is not a concern.

Crawl Rate

The volume of requests that Googlebot makes in a given timeframe. It can be measured in requests per second, or most often daily crawls that you can find in the crawl reports in Google Search Console. Googlebot measures server response time in real time and if your site starts slowing down, it will lower the crawl rate so as not to slow down the experience for your users. If your site is lacking resources and is slow to respond, Googlebot will not discover and get to new or updated content as quickly.

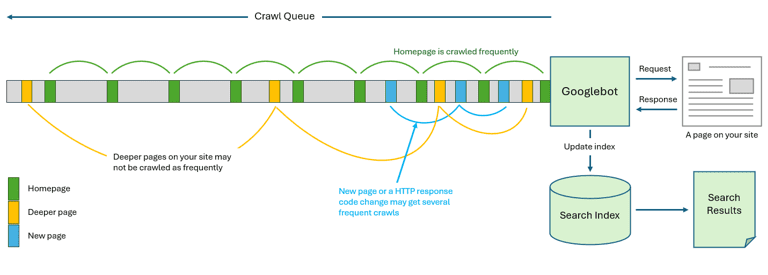

Crawl Queue

This is the list of URLs that Googlebot will crawl. It’s a continuous stream of URLs and as Google discovers new URLs it has to add them to the queue to be crawled. The process of updating and maintaining the queue is quite complex and Google has to manage several scenarios, for example:

- When a new page is discovered

- When a page changes HTTP status code (e.g.: deleted or redirected)

- When a page is blocked from crawling

- When a site is down for maintenance (usually when a HTTP 503 response is received)

- When the server is unavailable due to a DNS, network issue, or other infrastructure issue

- When a site’s internal linking changes, altering link signals for an individual page

- If a page’s content changes regularly or was updated since the last crawl

Each of these scenarios will cause Googlebot to reevaluate a URL and change the crawl rate and/or the crawl frequency.

Crawl Frequency

This describes how often Googlebot will crawl an individual URL. Typically your homepage is your most important page and has the strongest link signals, so it will be crawled most often. Conversely, pages that are deeper on your site, that are further away (need more clicks from the homepage) will be crawled less frequently. This means that any updates to your homepage will be found by Google pretty quickly, but an update to a product page under 3 subcategories and listed on page 50 may not be crawled for several months.

When Google discovers a new URL, or the HTTP status changes, Googlebot will often crawl that page again several times, to make sure the change is permanent before updating the search index. Many sites become temporarily unavailable for a variety of reasons, so ensuring the changes are stable helps it conserve resources in updating its index and helps searchers.

Below is an illustration showing a simplified view of the crawl queue, how different pages may have different crawl frequencies in the queue and how a page is requested and makes its way into the search index and then the search results:

How Google Discovers Pages on Your Site

Google uses a variety of methods to discover URLs on your site to add to the crawl queue, it does not just rely on the links that Googlebot finds. Below are a few other methods of URL discovery:

- Links from other sites

- Internal links

- XML sitemap files

- Web forms that use a GET action

- URLs from atom, RSS or other feeds

- URLs from Google ads

- URLs from social media feeds

- URL submissions from Google Search Console

- URLs in emails

- URLs included in JavaScript files

- URLs found in the robots.txt file

- URLs discovered from it’s other crawlers

All of these, and other methods contribute to a **** of URLs that can be sent to the crawl queue. This means developers need to be careful how web applications, navigation, pagination and other site features are coded as they can potentially generate thousands, or millions of additional low value URLs that Google may want to crawl.

Developer Pro Tip: When populating a variable in JavaScript avoid using the forward slash as a delimiter as it looks like a URL to Google and it will start crawling those URLs. e.g.:

var myCategories == “home/hardware/tools”;

If Google finds code like this, it will think it’s a relative URL and start to crawl it.

Types of Google Web Crawlers

Google effectively uses different web crawlers to index content across the internet for its search engine and ad services. Each type of crawler is designed for specific media formats and devices. Here are some examples:

-

Googlebot Smartphone

Googlebot Smartphone crawlers are optimized to index mobile-friendly web pages.

They can render and understand the layout and content designed for small screens. This ensures that smartphone users searching on Google receive the most relevant mobile versions of websites in their search results.

-

Googlebot Desktop

Googlebot Desktop crawlers index traditional desktop and laptop web pages.

They are focused on optimized content and navigation designed for larger screens. Desktop crawlers help surface the standard computer views of websites to those performing searches from a non-mobile device.

-

Googlebot Image

Googlebot Image crawlers index image files on the Internet.

They allow Google to understand images and integrate them into image searches. It does this by being able to scan image files and can extract metadata, file names, and visual characteristics of images.

Pro Tip: By using optimized image names and metadata, you can help people discover your visual content more easily through Google Image searches.

-

Googlebot News

Googlebot News crawlers specialize in finding and understanding news articles and content.

They aim to index breaking news stories and updates from publishers comprehensively. News content must be processed and indexed much more quickly than evergreen content due to its time-sensitive nature.

This supports Google News and ensures timely coverage of current events through its news search features.

-

Googlebot Video

Googlebot Video crawlers can discover, parse, and verify embedded video files on the web.

As such, it helps to integrate video content into Google’s search results for relevant video queries from users and feed video content into Google’s video search and recommendation features.

-

Googlebot Adsbot

Googlebot Adsbot crawls and analyzes landing page content and compares it to the ad copy which is factored into the quality score. As advertising makes up a large portion of content online, Googlebot Adsbot plays an important supporting role. It is designed specifically for ads and promotional material.

-

Googlebot Storebot

Googlebot also uses Storebot as a specialized web crawler. Storebot focuses its crawling exclusively on e-commerce sites, retailers, and other commercial pages. It parses product listings, pricing information, reviews, and other details that are crucial for shopping queries.

Storebot provides a deep analysis of pricing, availability, and other commerce-related data across different stores. This information helps Googlebot comprehensively understand these commercial corners of the web. With Storebot’s assistance, Googlebot can better serve users searching for products online through Search and other Google services.

These are the most popular crawlers that most sites will encounter, you can see their full list of Googlebot crawlers.

Why You Should Make Your Site Available for Crawling

To ensure your website is correctly represented in search results and evolves with changes to search algorithms, it’s crucial to make your site available for crawling. Here are a few key reasons why:

Get More Organic Traffic

When Google’s crawler visits your site, it helps search engines understand the structure, content and linking relationships of your website. This allows them to accurately index your pages so searchers can find them in the SERPS.

As Google’s John Mueller says, “Indexing is the process where Google discovers, renders, and understands your pages so they can be included in Google search results.”

In other words, the more crawlable your site is, the better job search engines can do at indexing all of your important pages and serving them in search results.

Improve Your Site Performance and UX

As crawlers render and analyze your pages, they can provide feedback on site speed and content format that impacts user experience.

This information is typically available within your website analytics or search console tools.

As issues found during crawling, like slow pages or pages loading improperly, can hurt users’ interactions with your site—you can leverage this feedback to improve the overall user experience across your website.

Pro Tip: Keeping your site well-organized and crawl-optimized can ensure a better experience for search engine crawlers and real users. According to Search Engine Watch, a better user interface leads to increased time on site and a lower bounce rate.

Why You Should Not Make Your Site Crawlable

We recommend that development or staging sites are blocked from being crawled so they don’t inadvertently end up in the search index. It’s not sufficient to just hope that Google won’t find it, we need to be explicit, as remember, Google uses a variety of methods to discover URLs.

Just remember that when you push your new site live, check you didn’t overwrite the current robots.txt file with the one that’s blocking access. I can’t tell you how many times I’ve seen this happen.

If you have sensitive, private, or personal information, or other content that you do not want in the search results, then that should also be blocked. However, if you happen to find some content in the search results that needs to be removed, you can block it using the robots.txt, meta robots noindex tag, password protect it, or the x-robots-tag in the HTTP header, then use the URL removal tool in Google Search Console.

How to Optimize Your Site for Crawling

Providing a sitemap can help Google discover pages on your site more rapidly which affects how quickly they can be added or updated in the search results. But there are many other considerations including your internal link structure, how the Content Management System (CMS) functions, site search, and other site features.

Here are some best practices that can help Google find and understand your most valuable pages.

- Ensure easy accessibility and good UX: Make sure your site structure is logically organized and internal links allow smooth navigation between pages. Google and users will more readily crawl and engage with a site that loads quickly, is easy to navigate.

- Use meta tags: Meta descriptions and titles inform Google about your page content. Ensure each page has unique, keyword-rich meta tags to clarify what the page is about. Web crawlers will visit your pages to read the meta tags and understand what content is on each page.

- Create, high-quality, helpful content: Original, regularly updated content encourages repeat visitors and search engines to re-crawl pages. Google favors sites with fresh, in-depth material covering topic areas completely.

- Increase content distribution and update old pages: Leverage social sharing and internal linking to distribute valuable content. Keep signature pages like the homepage updated monthly. Outdated pages may be excluded from search results.

- Use organic keywords: Include your target keywords and related synonyms in a natural, readable way within headings, page text, and alt tags. This helps search engines understand the topic of your content. Overly repetitive or unnatural use of keywords can be counterproductive.

- If you have a very large site, ensure you have adequate server resources so Googlebot doesn’t slow down or pause crawling. It tries to be polite and monitor server response times in real time, so it doesn’t slow down your site for users.

- Ensure all of your important pages have a good internal linking structure to allow Googlebot to find them. Your important pages are going to be typically no more than 3 clicks from the homepage.

- Ensure your XML sitemaps are up to **** and formatted correctly.

- Be mindful of crawl traps and block them from being crawled. These are sections of a site that can produce an almost infinite number of URLs, the classic example is a web based calendar, where there are links to pages for multiple years, months and days.

- Block pages that offer no, or very little value. Many ecommerce sites have filtering, or faceted search, and sorting functions that can also add hundreds of thousands, or even millions of additional URLs on your site.

- Block internal site search results, as these can also generate thousands or millions of additional URLs.

- For very large sites try to get external deep links as part of your link building strategy, as that also helps provide Google with signals that those pages are important. Those deep inbound links also help the other deeper pages on the site.

- Link to the canonical version of your pages, by avoiding the use of tracking parameters or click trackers like bit.ly. This also helps avoid redirect chains, which just adds more overhead to your server resources, crawlers, and for users.

- Ensure your Content Delivery Network (CDN) that hosts resources like images, JavaScript and CSS files needed to render the page are not blocking Googlebot.

- Avoid cloaking, i.e. detecting the user-agent string and serving different content to Googlebot than users. This is not only more difficult to set up, maintain, and troubleshoot, but may also be against Google’s Webmaster Guidelines.

How Can You Resolve Crawling Issues?

There are several steps you can take to resolve crawling issues and ensure Googlebot is interacting with your site appropriately:

- Register your site with Google Search Console: This is Google’s dashboard that offers Google’s view on how it sees your website. It provides many reports to identify problem areas on your site and a way for Google to contact you. The indexing reports will show you areas of your site that have problems and provide a sample list of affected URLs.

- Audit Robots.txt: Carefully audit your robots.txt file and ensure it doesn’t contain any disallow rules blocking important site sections from being crawled. A single typing mistake could prevent proper discovery.

- Crawl your own site: There are many crawling tools available that can be run on your own PC, or SAAS based services at varying price points. They will provide a summary and series of reports on what they find, which is often more comprehensive than Google Search Console, as those reports usually only show a sample of URLs with issues.

- Check for redirects: Double-check your .htaccess file and server configurations for any redirect rules that may unintentionally force Googlebot to exit prematurely. Check all the redirects to ensure they function properly.

- Identify low-quality links: Audit all backlinks to find and disavow those coming from link schemes or unrelated sites that could be seen as manipulative. Disavowing low-quality links may help unblock crawling to valuable pages.

- Assess the XML sitemap file: Validate that the sitemap.xml file points to all intended internal pages and is generated regularly. Outdated sitemaps will be missing your new content, which may hinder them from being discovered and crawled. If your XML sitemaps contain links to URLs that redirect or have 4XX errors, search engines may not trust them and use them for future crawling, diminishing their entire purpose.

- Analyze server response codes: In Google Search Console, check the crawl stats reports and troubleshoot issues with the URL Inspection tool, carefully monitor page response codes for timeouts and blocked resources.

- Analyze your log files: There are tools and services that will allow you to upload your server logs and analyze them. This can be useful for large sites that are having problems getting content discovered and indexed.

A Hat Tip to Bingbot

While this article is focused on Googlebot, we don’t want to forget about Bingbot, which is used by Bing. Many of the same principals that work for Googlebot also work for Bingbot, so you don’t usually need to do anything different.

However, at times Bingbot can be quite aggressive in its crawl rate, so if you find that you need to reclaim server resources, you can adjust the crawl rate in Bing Webmaster tools with the Crawl Control settings. I’ve done this in the past on huge sites to bias most of the crawling to occur during off peak hours; your server admins will thank you for that.

Conclusion

Google’s advice is not to optimize your site for Google, but to optimize it for users, and that’s true when it comes to the content and page experience, however, as we’ve laid out in this article, there are a lot of intricacies in the way a website is developed and maintained that can affect how Googlebot discovers and crawls your site. For a small to medium-sized site that might be powered by WordPress, many of these considerations are not a factor, however, this start to become increasingly important for e-commerce sites and other large sites that may have a custom Content Management System (CMS), and site features that can potentially generate thousands or millions of unnecessary URLs.

Googlebot will continue to play a vital role in how Google understands and indexes the internet in 2024 and beyond. While new technologies may emerge to analyze online content in different ways, Googlebot’s tried and true web crawling methodology remains highly effective at discovering fresh and relevant information at a massive scale.

The post Demystifying Google’s Web Crawling: The Role of Googlebot in 2024 appeared first on Vizion Interactive.

https://www.vizion.com/blog/demystifying-googles-web-crawling-the-role-of-googlebot-in-2024/

#seotips #seo #digitalmarketing #searchengineoptimization #seoexpert #seoservices #seomarketing #digitalmarketingtips #marketing #seotools #seoagency #seostrategy #contentmarketing #socialmediamarketing #google #digitalmarketingagency #searchenginemarketing #onlinemarketing #socialmedia #onpageseo #website #marketingtips #seotipsandtricks #searchengineoptimisation #searchengine #digitalmarketingstrategy #googleranking #offpageseo #keywords #webdesign

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)