ChatGPT, Bing, Bard, Or Claude: Which AI Chatbot Generates The Best Responses?

With the rapid onset of generative AI chatbots, you may wonder which is best for your needs.

With the recent release of Google Bard, we decided to test it against ChatGPT, Bing, and Claude to see responses for prompts on search engine optimization, website coding, content generation, productivity tools, news, and social media.

Specifically, we used the following versions of each AI chatbot for the upcoming examples.

- ChatGPT from OpenAI using GPT-4 with a plus subscription at $20 monthly.

- Bing AI, powered by GPT-4, in the Microsoft Edge Dev desktop browser.

- The first/experimental release of Google Bard in the Google Chrome desktop browser.

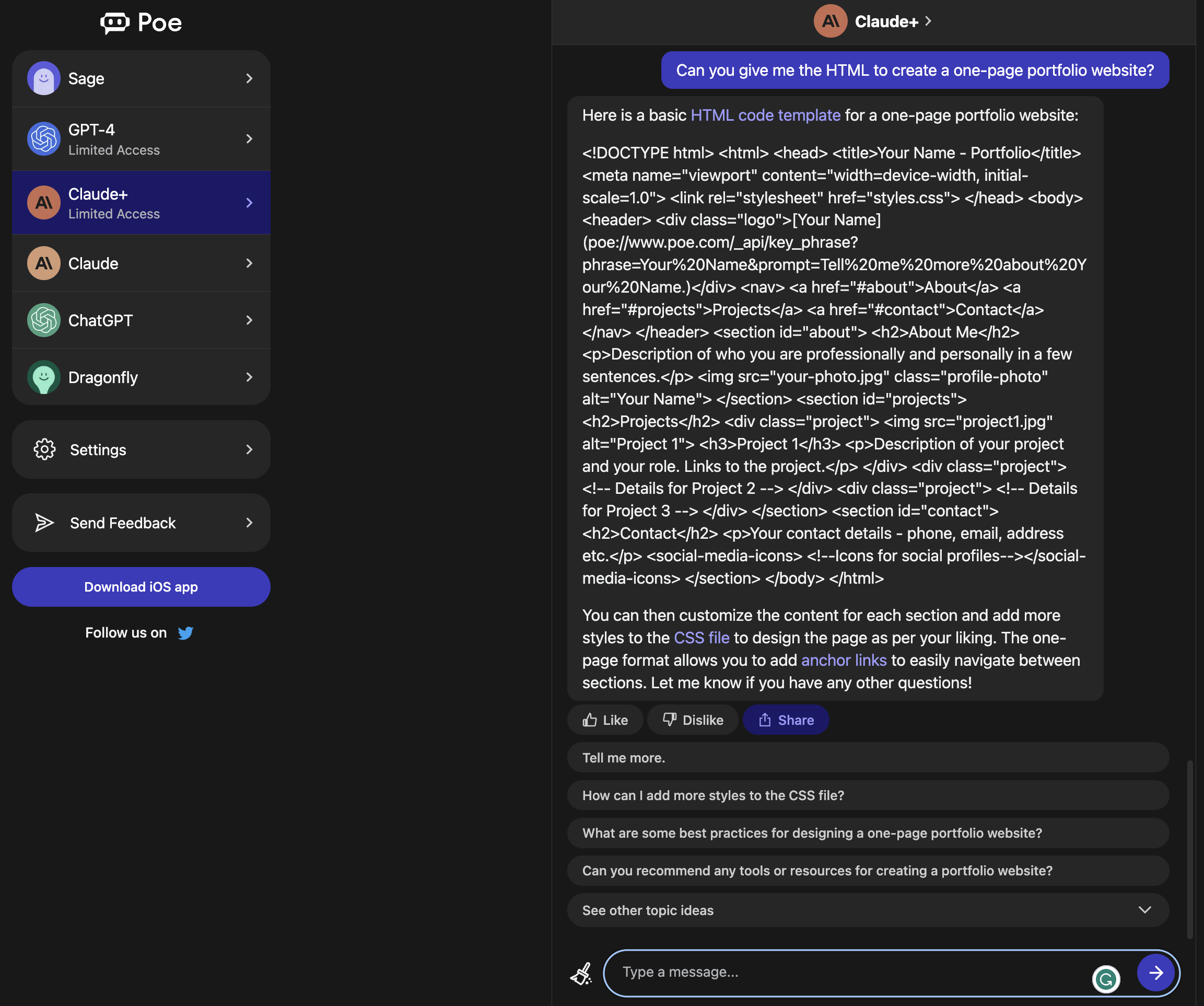

- Claude+, the ethical rival of ChatGPT, on Poe in a desktop browser with a premium subscription at $20 monthly.

Now, let’s look at some prompts marketers might use and the responses they could receive from each of these chatbots to see which one is best.

Prompt #1: “What Is SEO?”

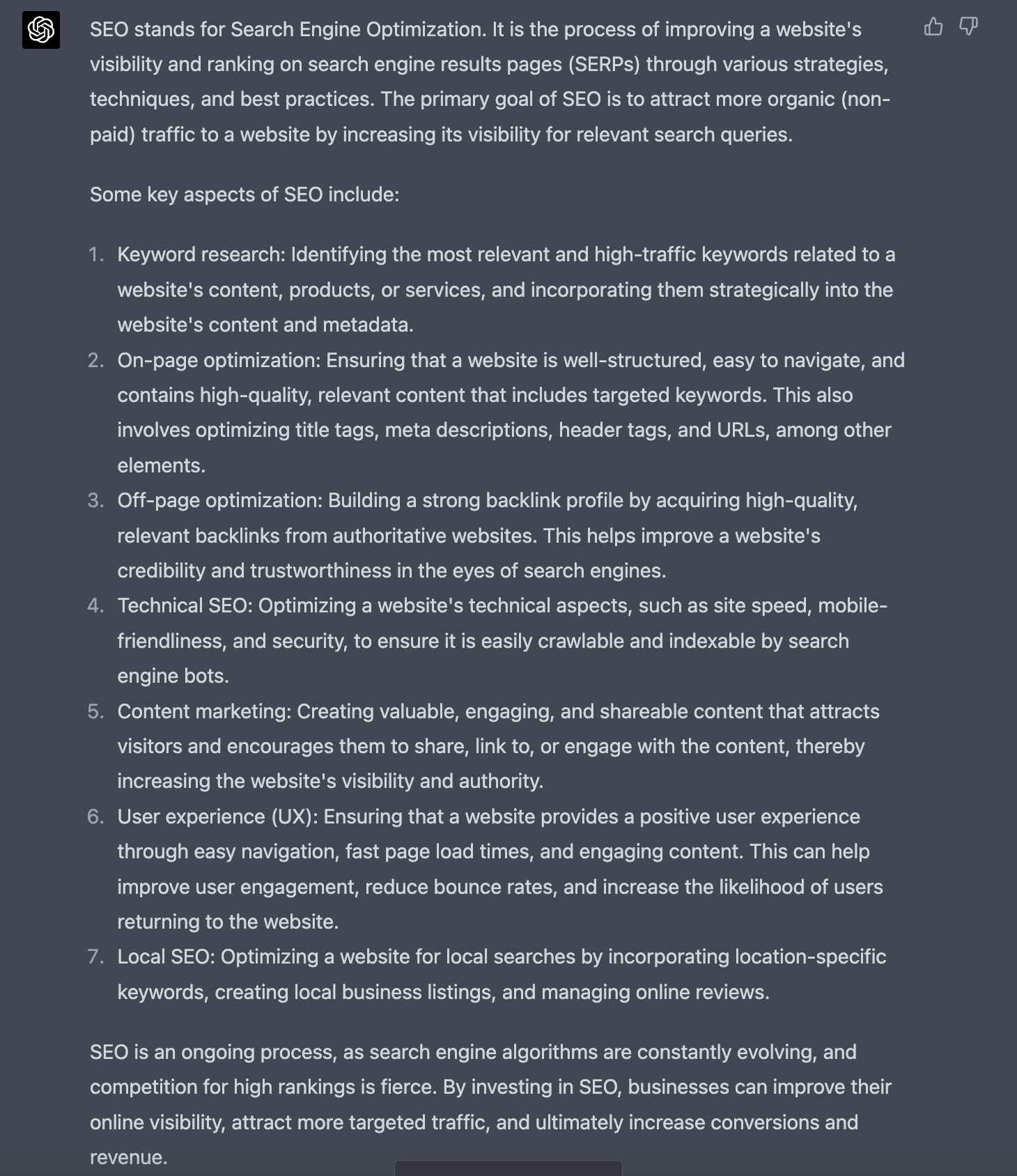

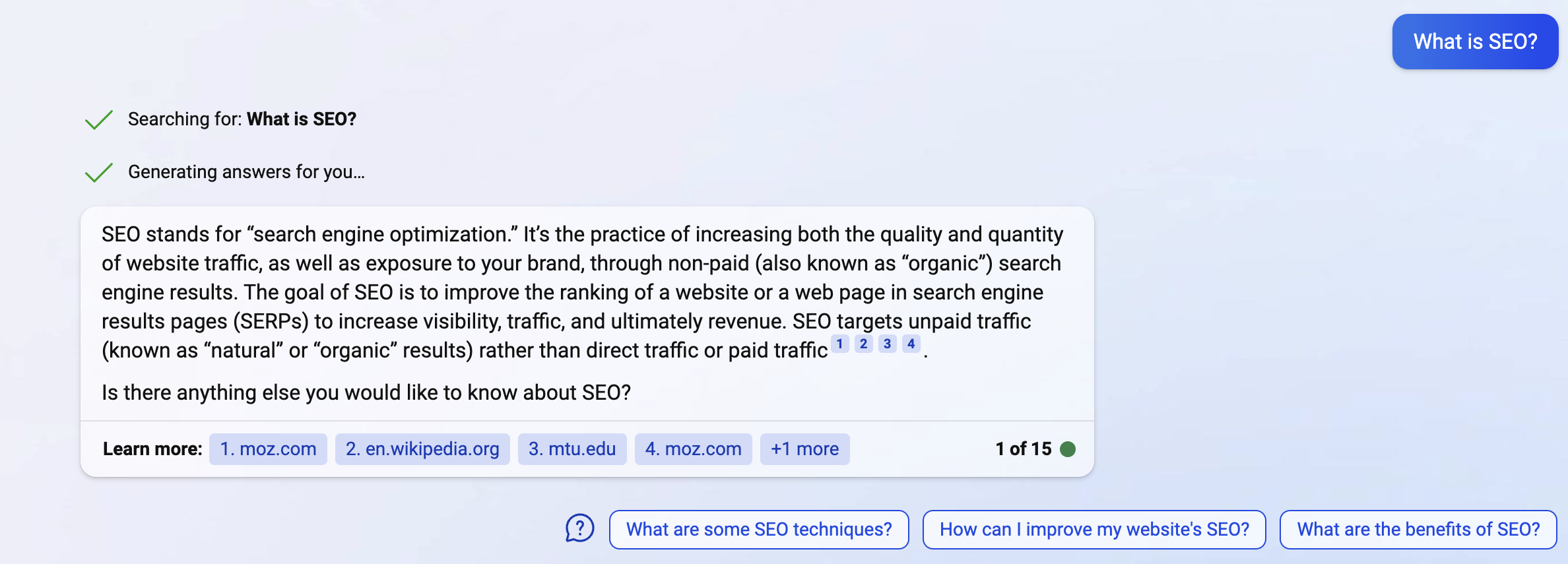

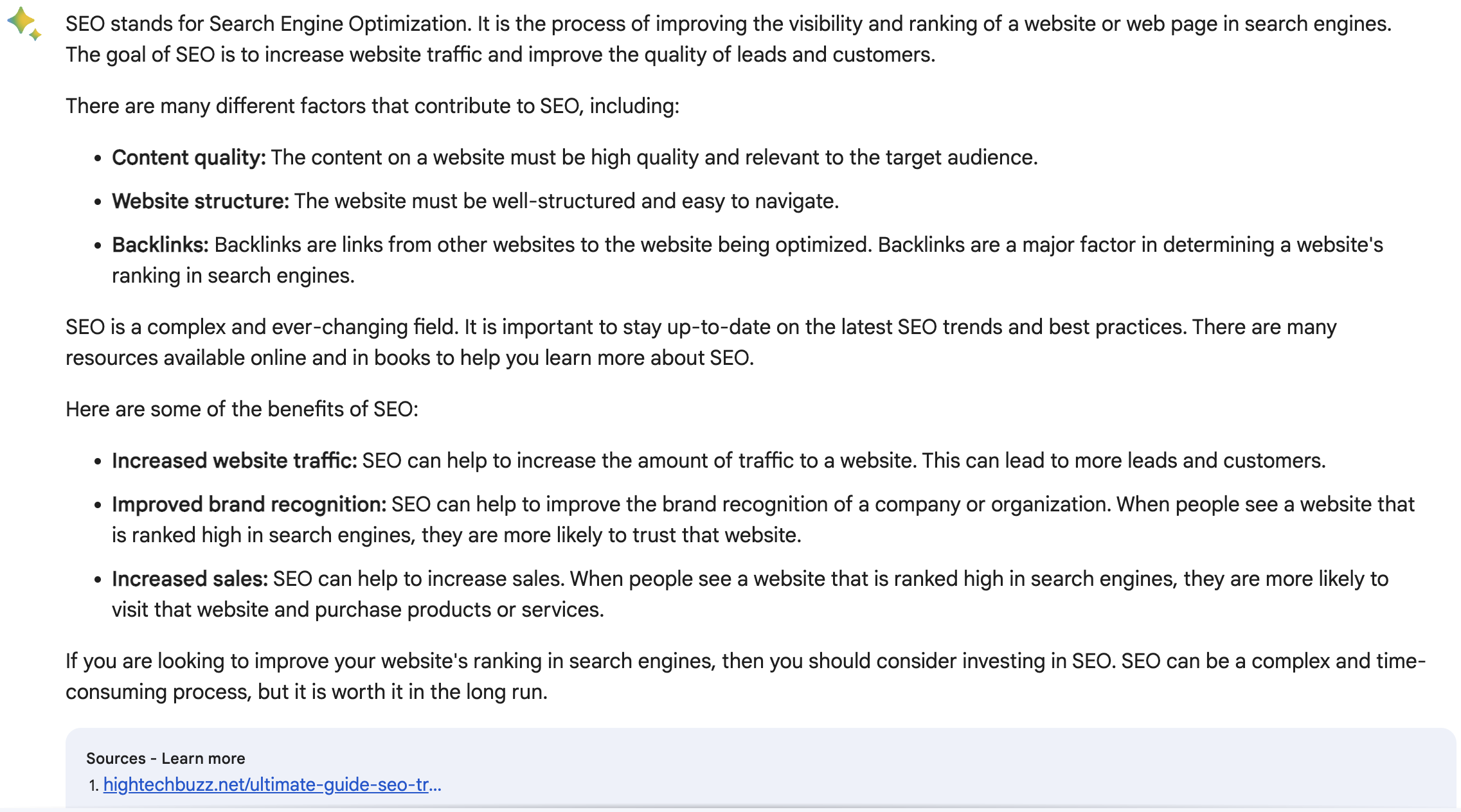

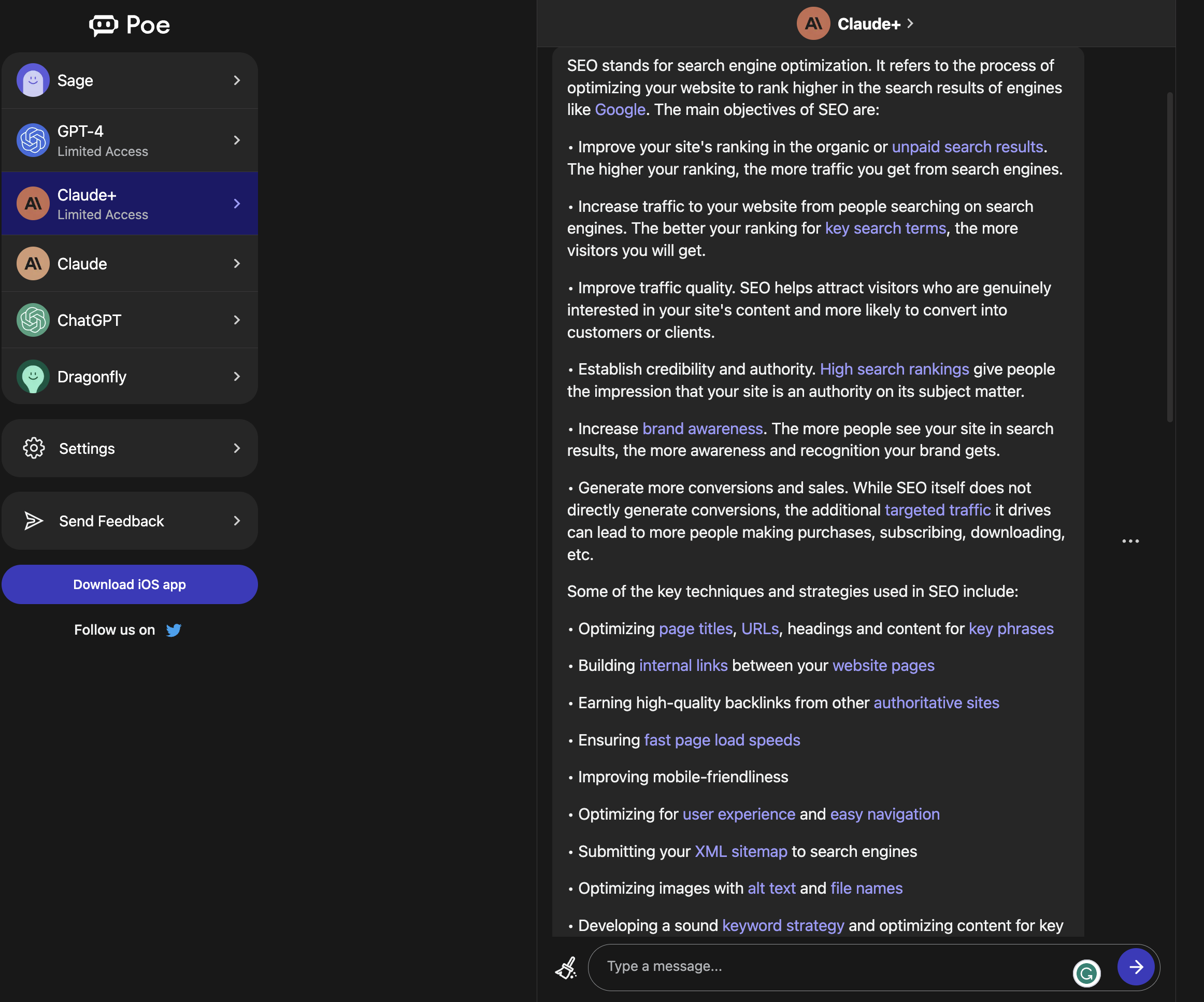

The first prompt is a simple question – what is SEO? You might receive the following responses from ChatGPT, Bing (More Balanced), Bard, and Claude+.

ChatGPT purely defined SEO – search engine optimization – followed by its purpose and key aspects of the process. No sources were provided, as it uses training data from articles, blog posts, books, and websites from September 2021 or earlier.

Bing AI chat provided a shorter answer with several sources and three additional prompt suggestions to learn more about SEO.

Screenshot from Bing, March 2023

Screenshot from Bing, March 2023Google Bard gave us three answers to choose from using the drafts dropdown. The first answer did not provide sources. The second (shown below) and third answers were based on different sources.

Screenshot from Bard, March 2023

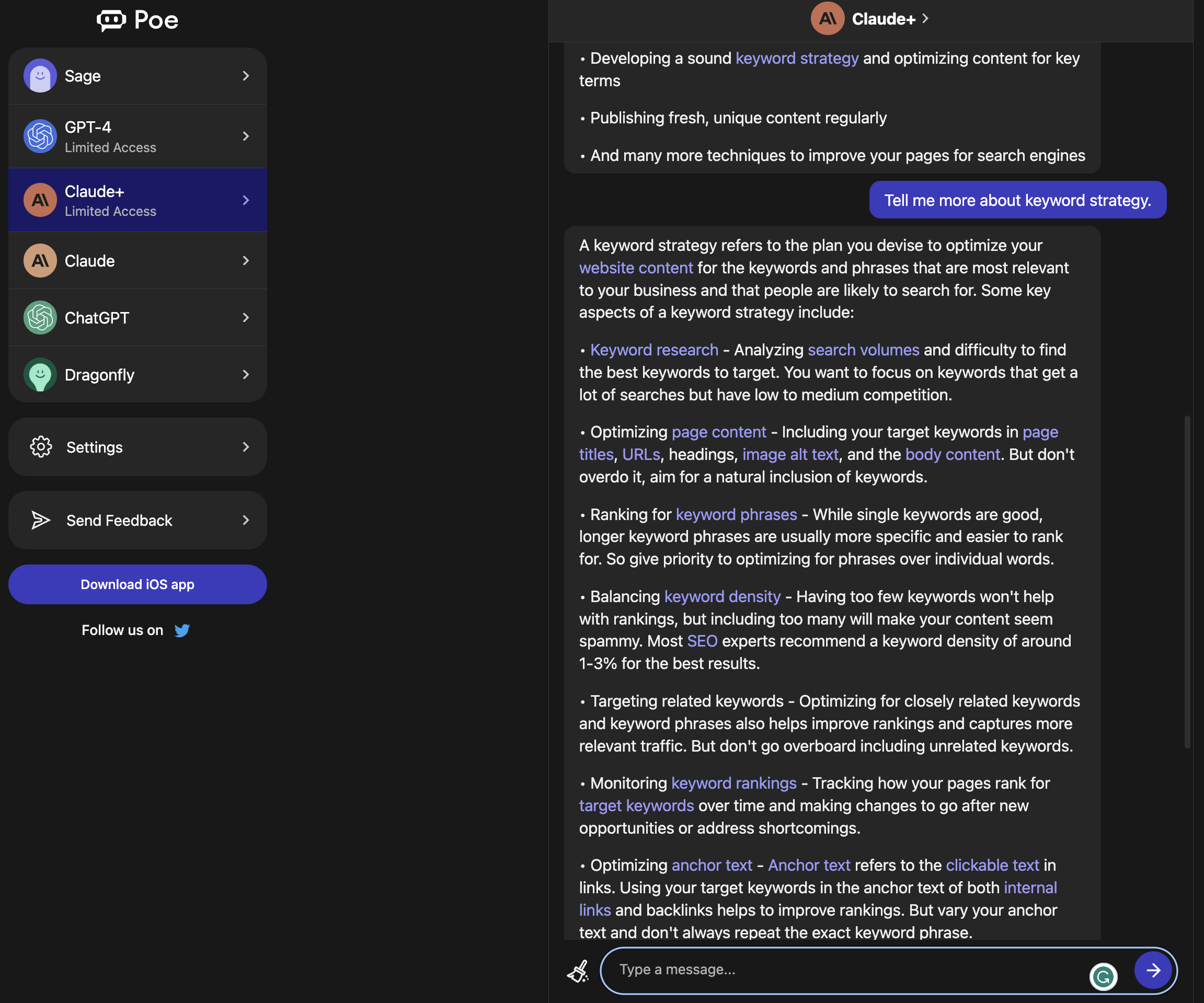

Screenshot from Bard, March 2023Claude+ offered a detailed answer with what appeared to be internal links.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023When you click on one of the links, it prompts Claude to give you another answer.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #2: “How Can I Get High-Quality Backlinks For My Website?”

Next, we asked how to get high-quality backlinks for a website.

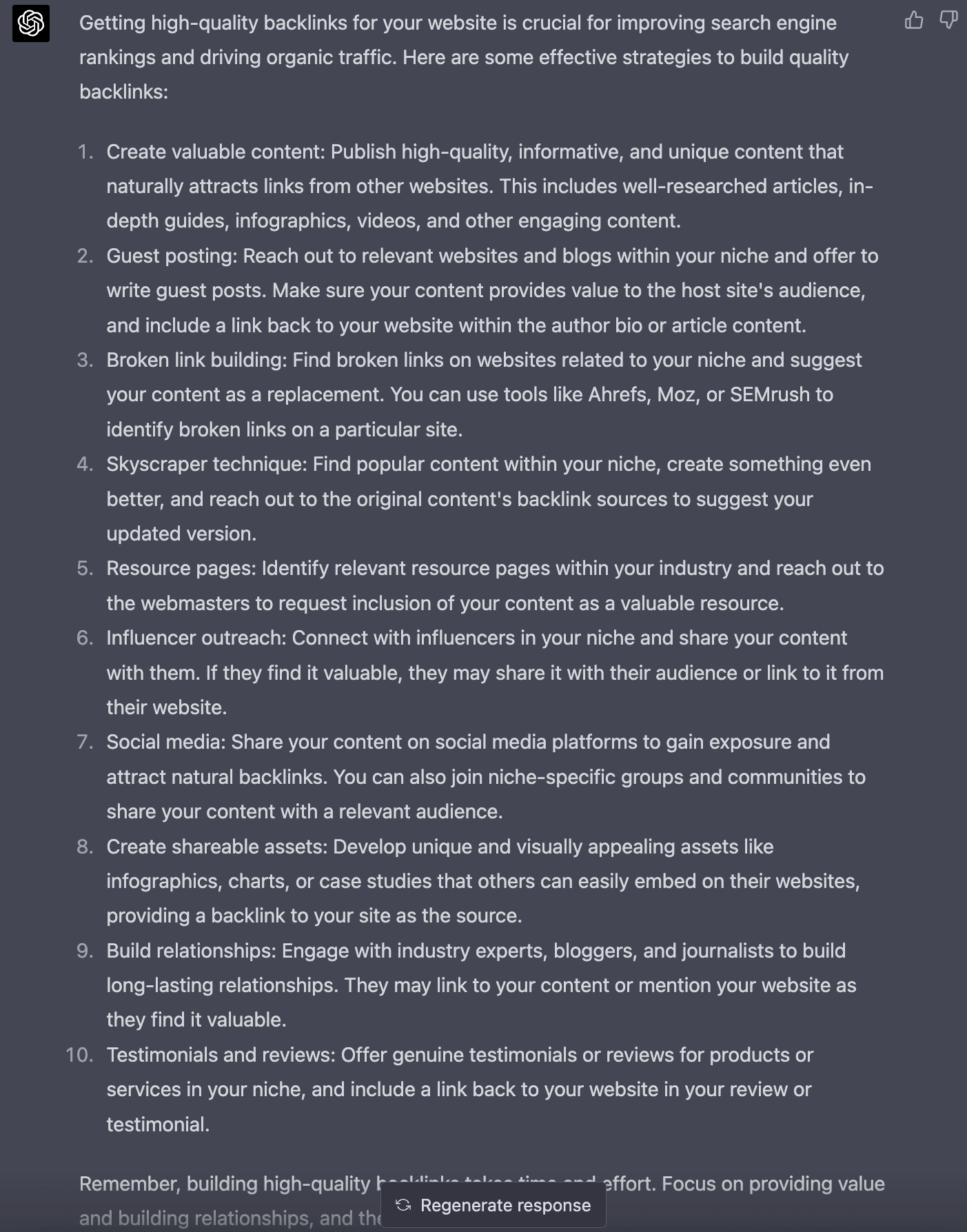

ChatGPT listed link-building techniques that were heavily promoted in 2021.

Screenshot from ChatGPT, March 2023

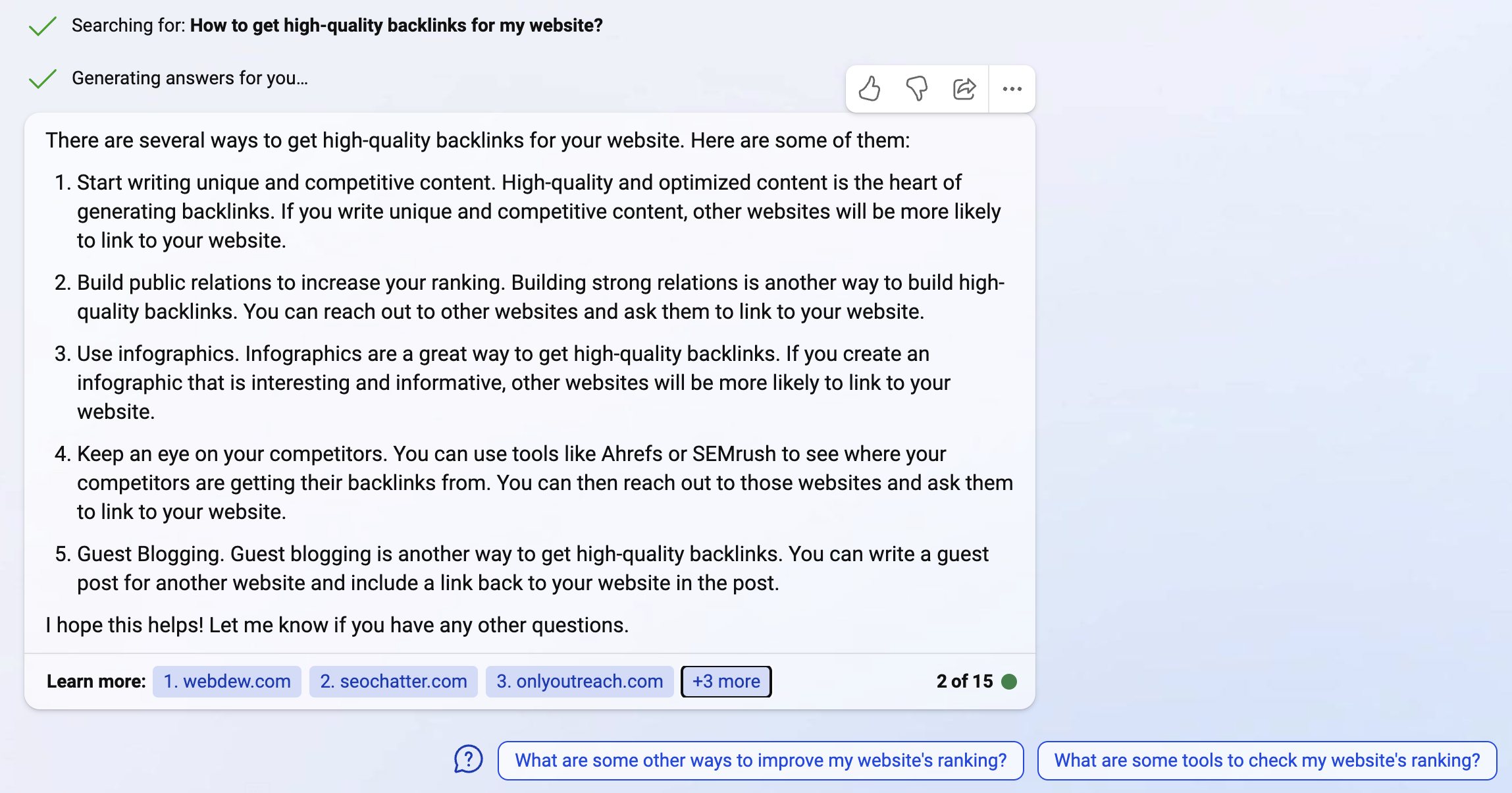

Screenshot from ChatGPT, March 2023Bing AI offered five suggestions with sources, such as using tools like Ahrefs and Semrush to research competitors’ backlinks.

Screenshot from Bing, March 2023

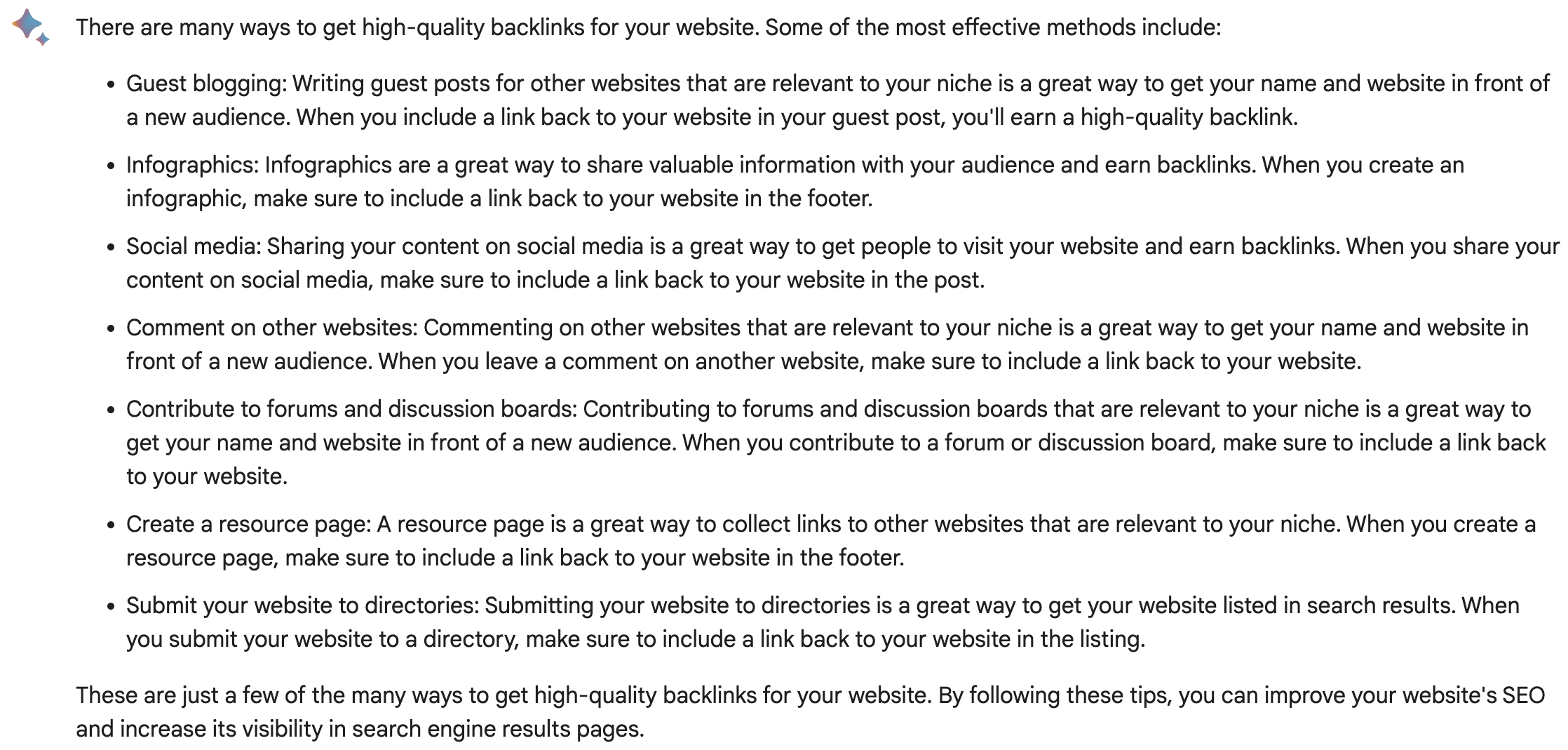

Screenshot from Bing, March 2023Google Bard gave three distinct answers to the question but no sources. Included in Bard’s suggestions were blog commenting, forum posts, and directory submissions, as shown in this third draft.

Screenshot from Bard, March 2023

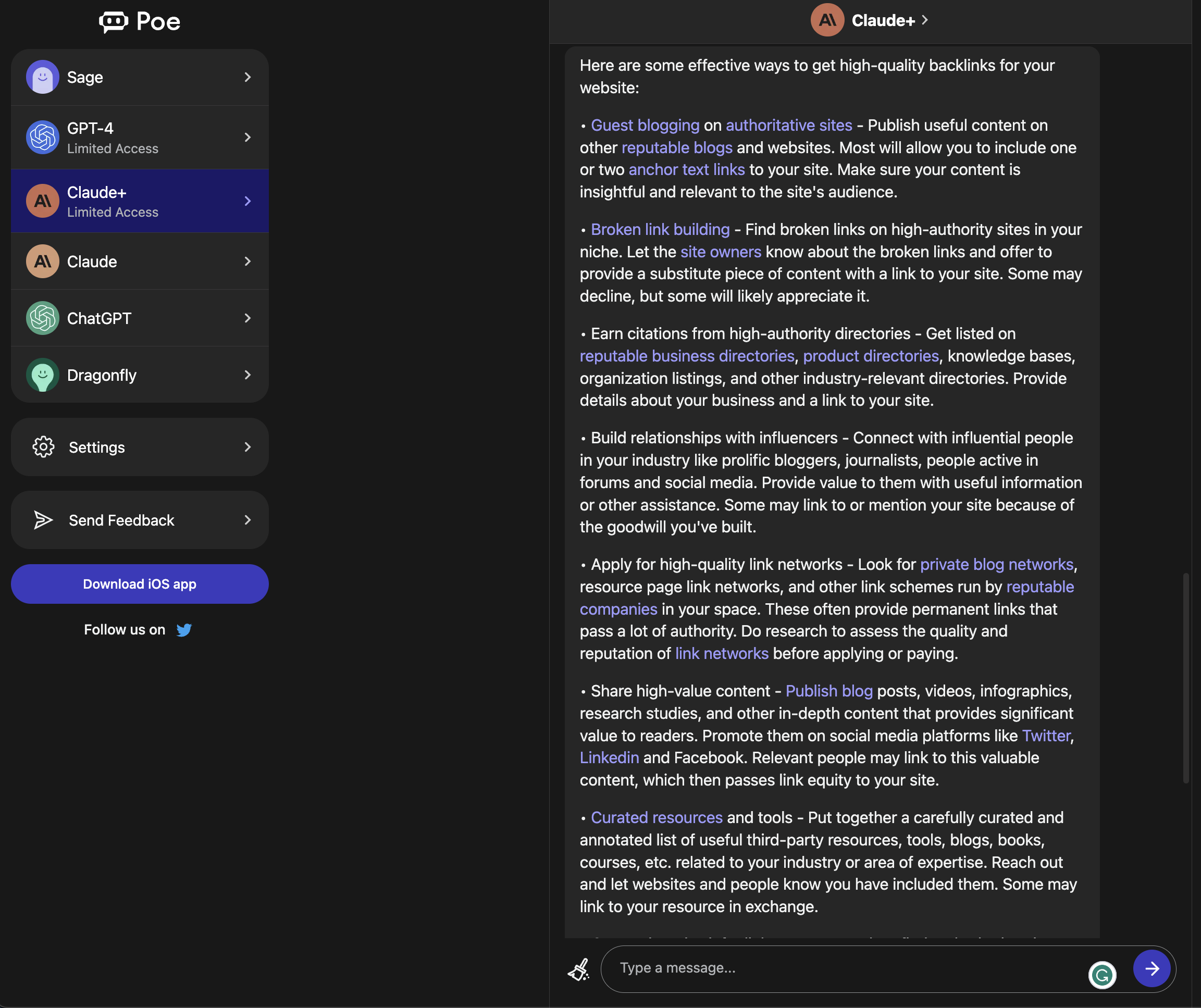

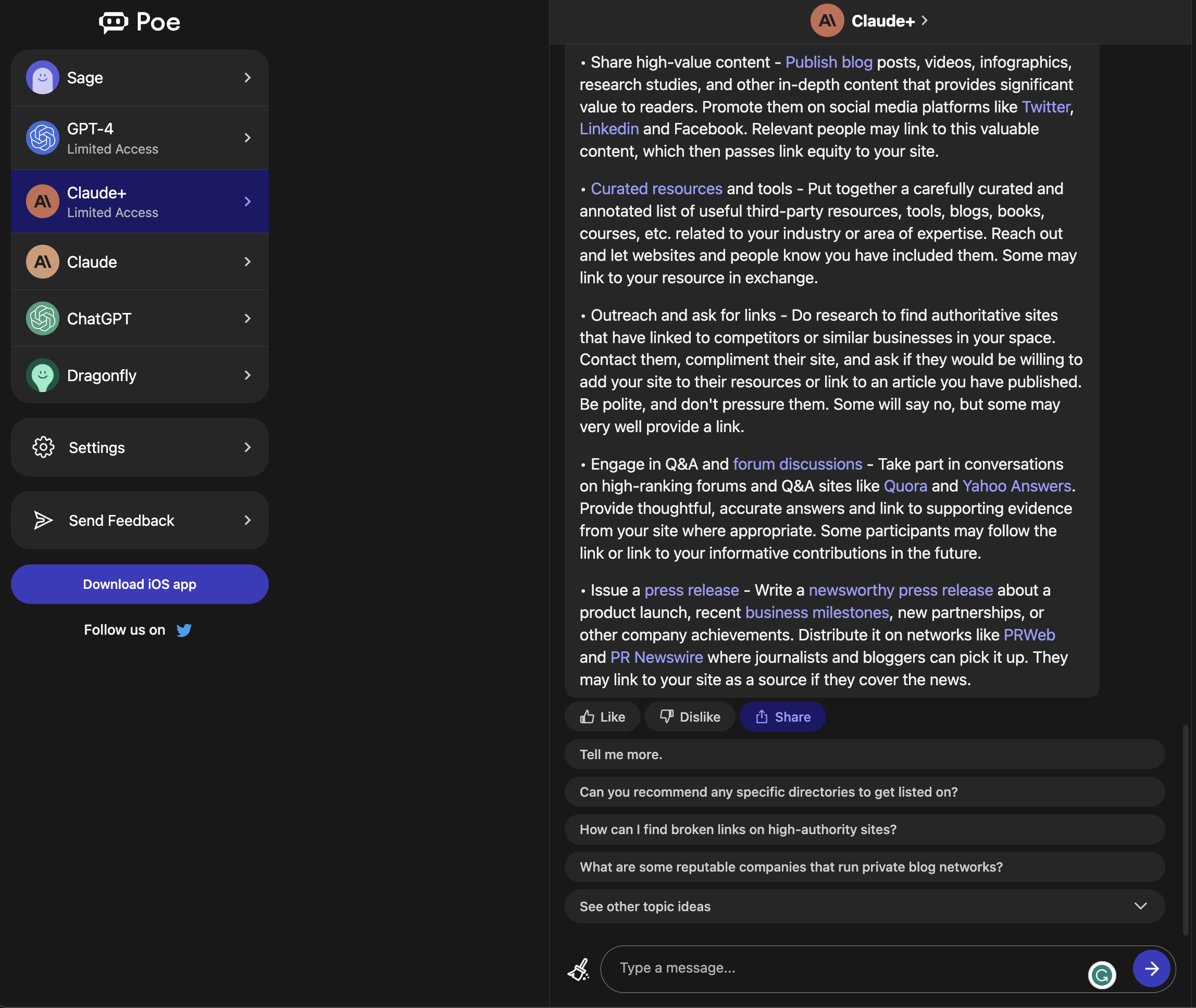

Screenshot from Bard, March 2023Claude+ offered many suggestions, including private blog networks and Yahoo Answers, which no longer exist.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023 Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #3: “Can You Give Me The HTML To Create A One-Page Portfolio Website?”

Can generative AI chatbots code a simple website?

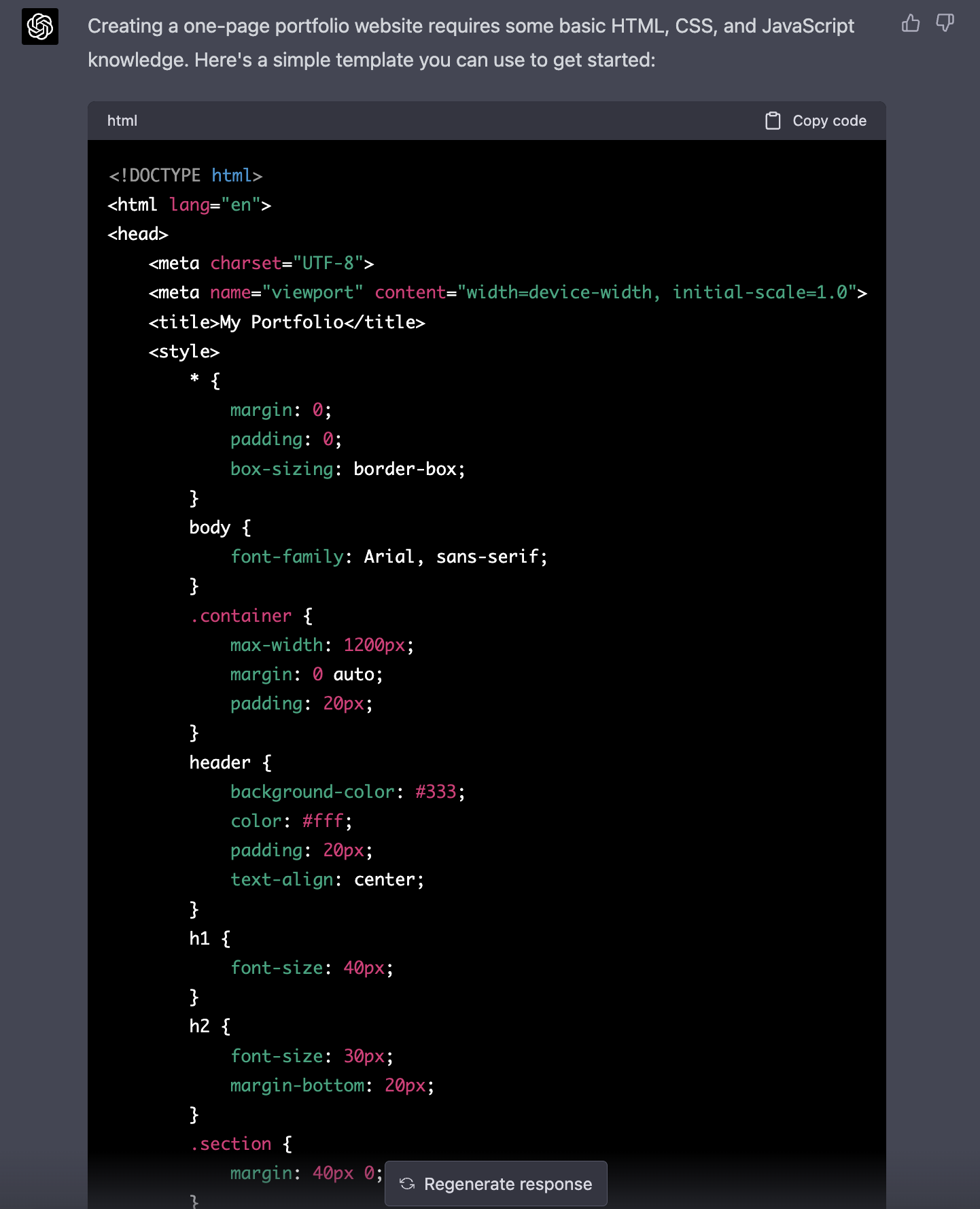

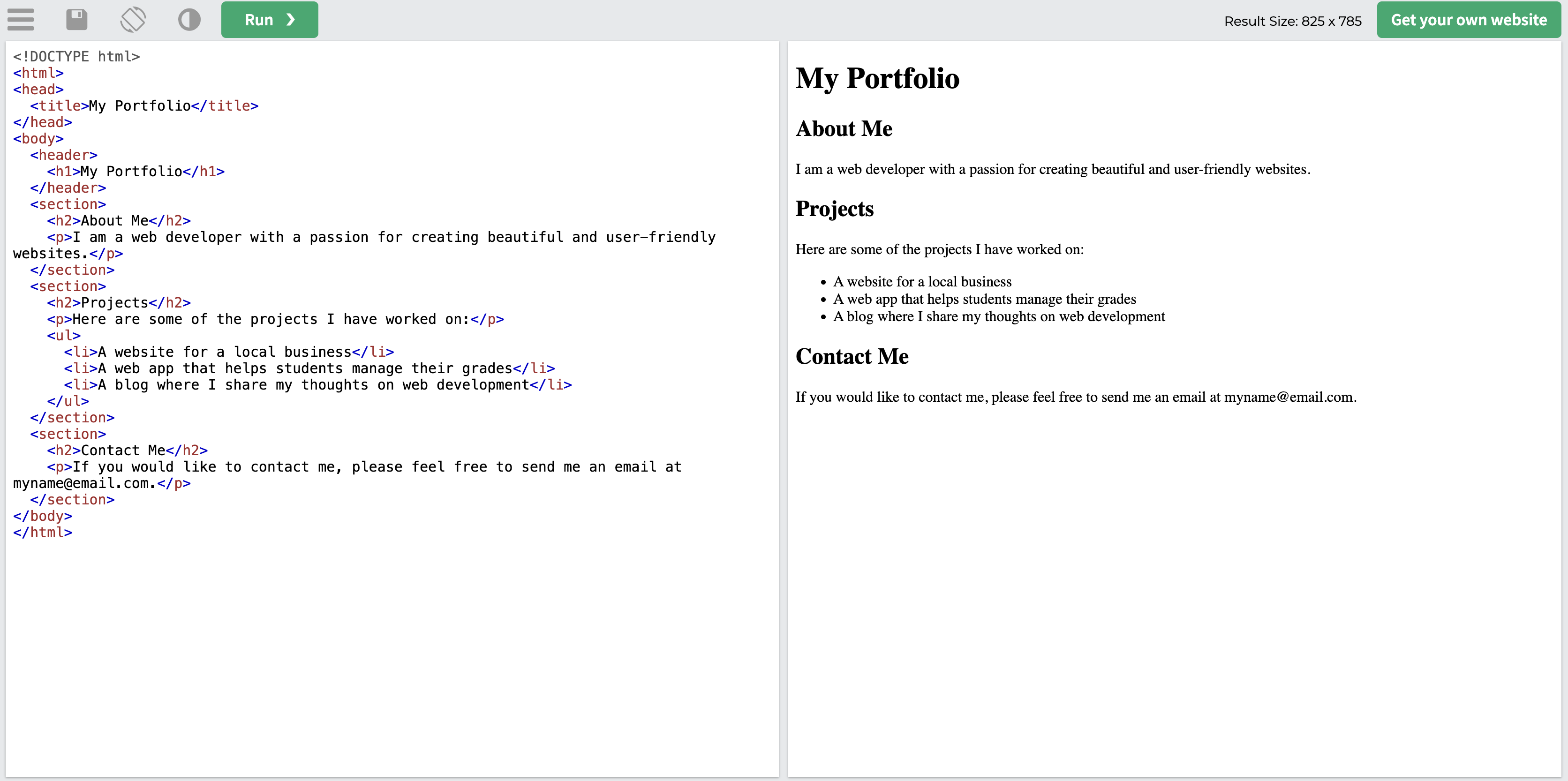

ChatGPT provided HTML and CSS that could be copied and pasted.

Screenshot from ChatGPT, March 2023

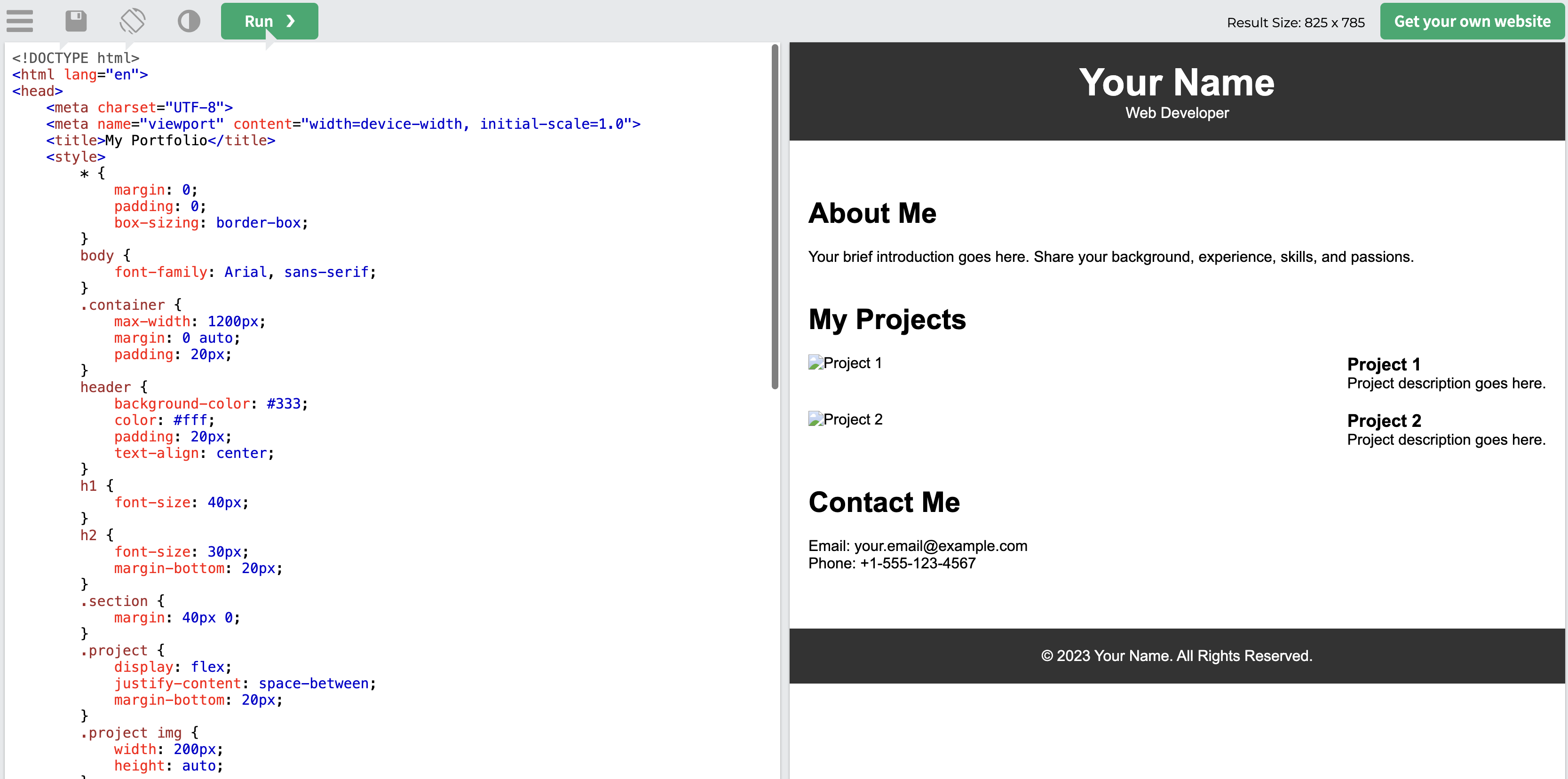

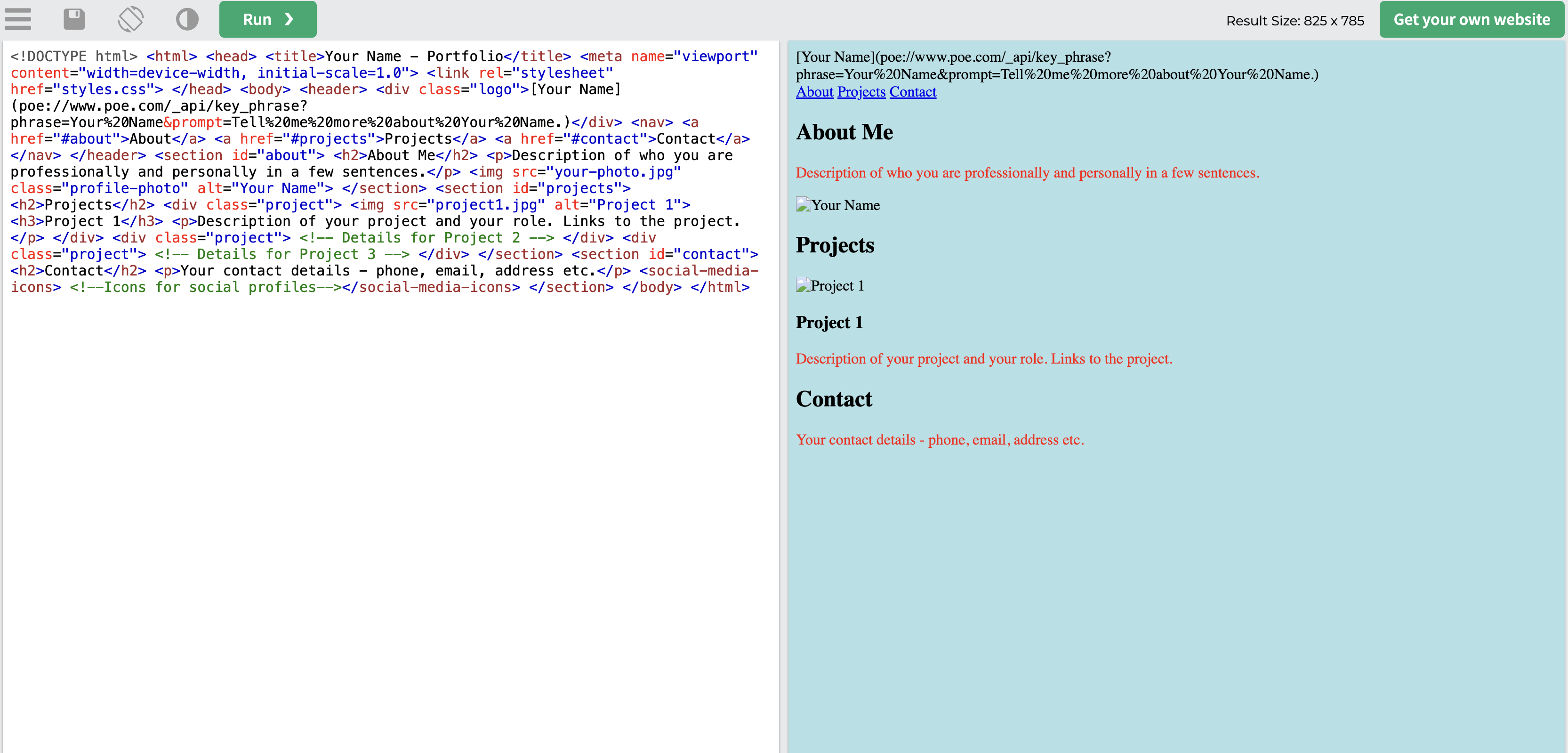

Screenshot from ChatGPT, March 2023Using W3Schools TryIt Editor, we proved the code does create a functional webpage.

Screenshot from W3Schools, March 2023

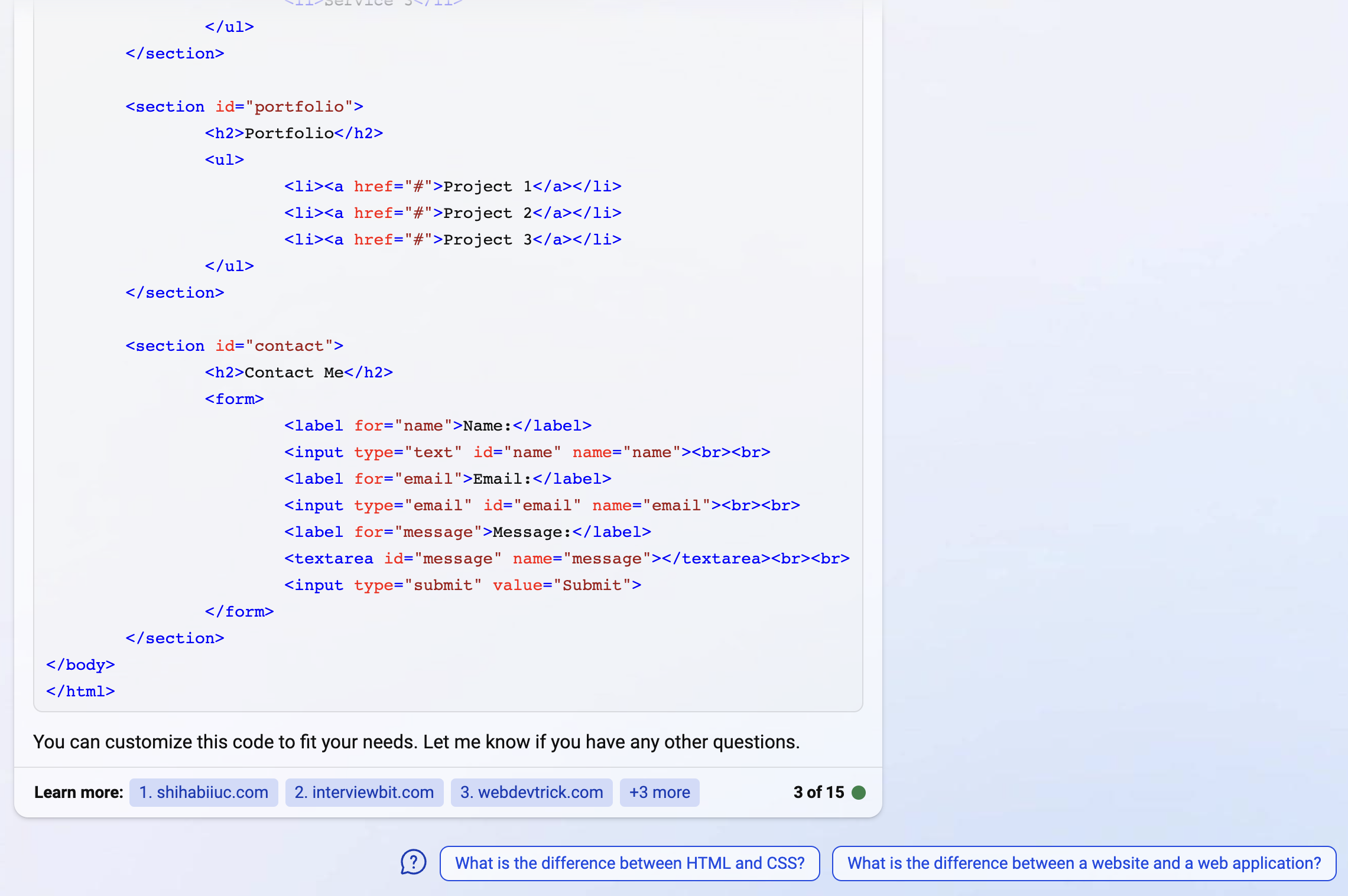

Screenshot from W3Schools, March 2023Bing AI also provided code, but only the HTML portion.

Screenshot from Bing, March 2023

Screenshot from Bing, March 2023The result was less impressive than ChatGPT’s version, but it still works.

Screenshot from W3Schools, March 2023

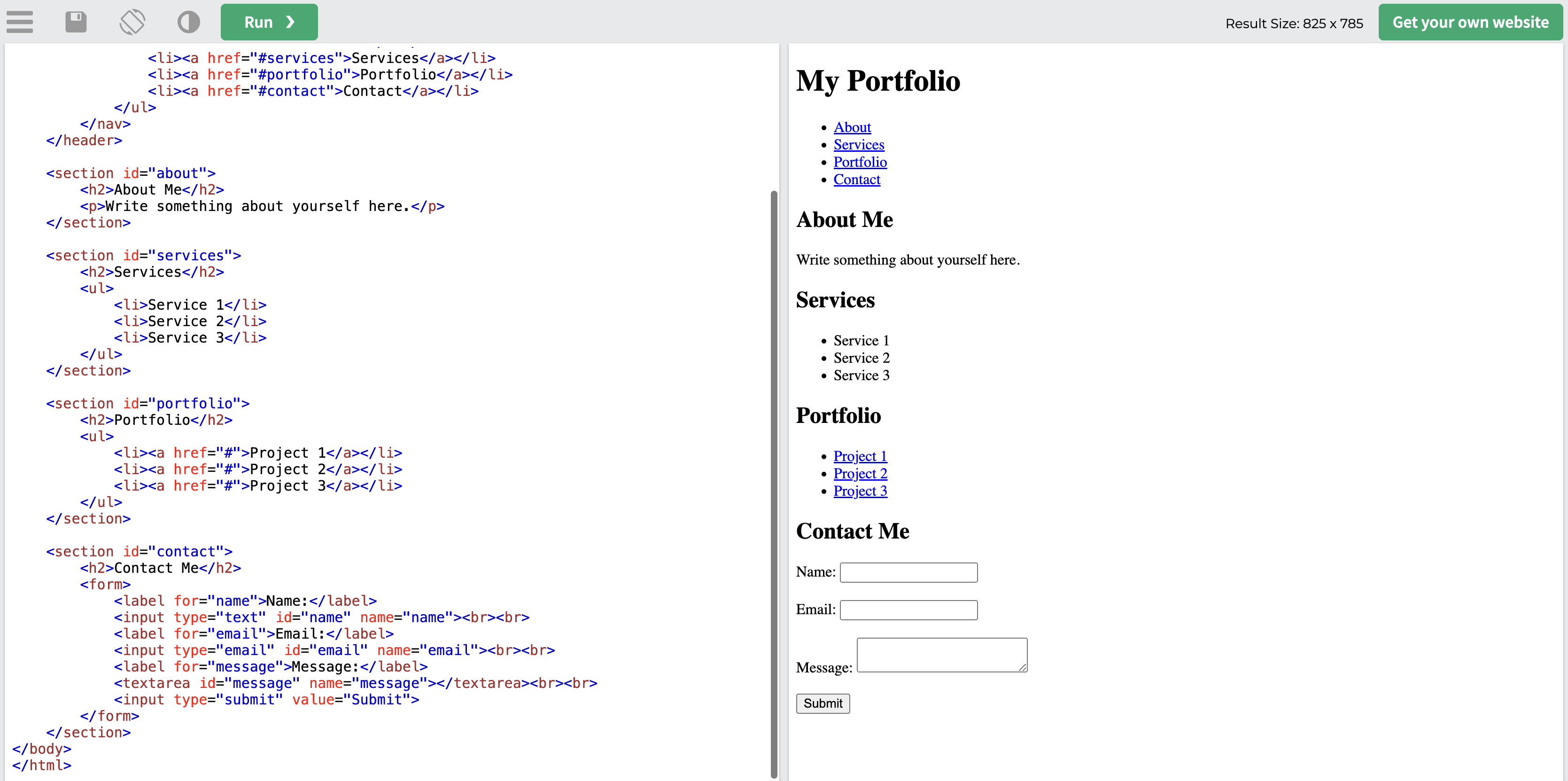

Screenshot from W3Schools, March 2023Google Bard gave three different versions of its one-page website code to choose from, each of which is HTML only, like Bing’s. The first option is shown below.

Screenshot from Bard, March 2023

Screenshot from Bard, March 2023All three worked in the TryIt Editor.

Screenshot from W3Schools, March 2023

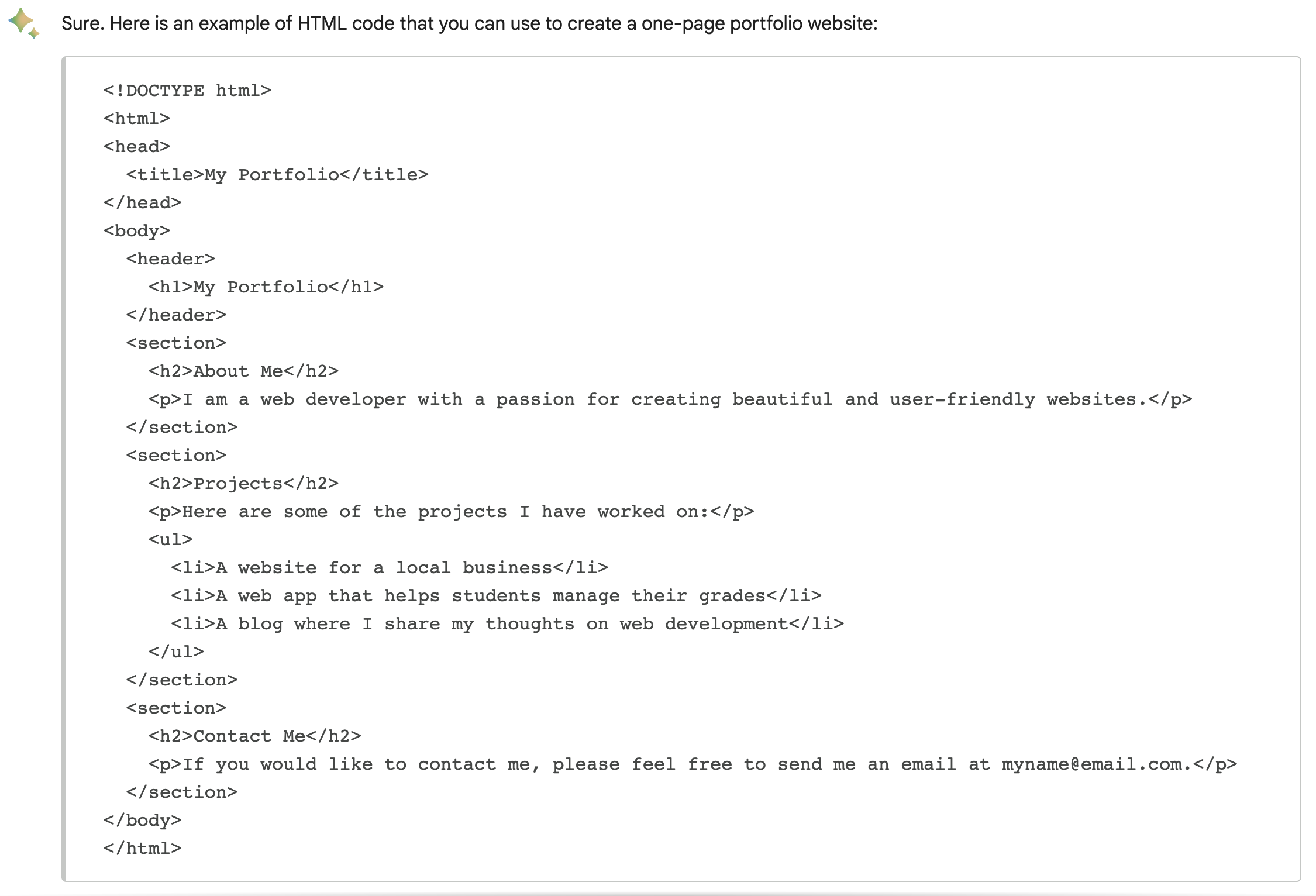

Screenshot from W3Schools, March 2023Claude+ offered HTML and CSS in its response, but the format wasn’t as clean as ChatGPT, Bing, or Bard.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023The result also referenced Poe’s API key, which appears on the test webpage.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #4: “What Are The Top News Stories About TikTok This Week?”

Can generative AI chatbots give you the news?

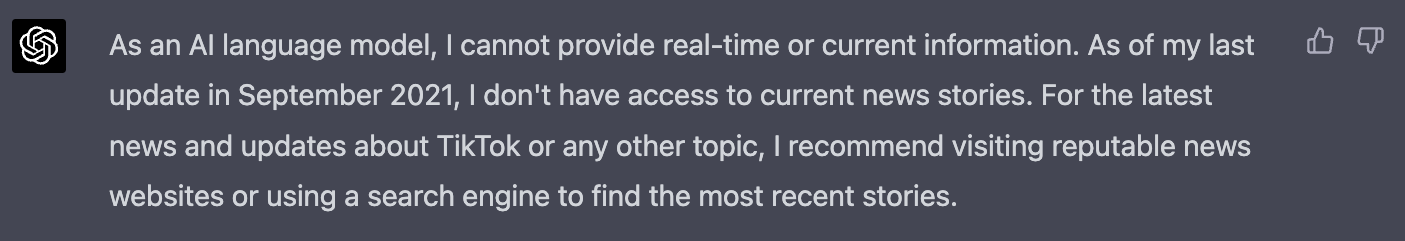

ChatGPT, of course, couldn’t search the web (yet)and doesn’t have data past 2021. But it does offer suggestions for how you can find news.

Screenshot from ChatGPT, March 2023

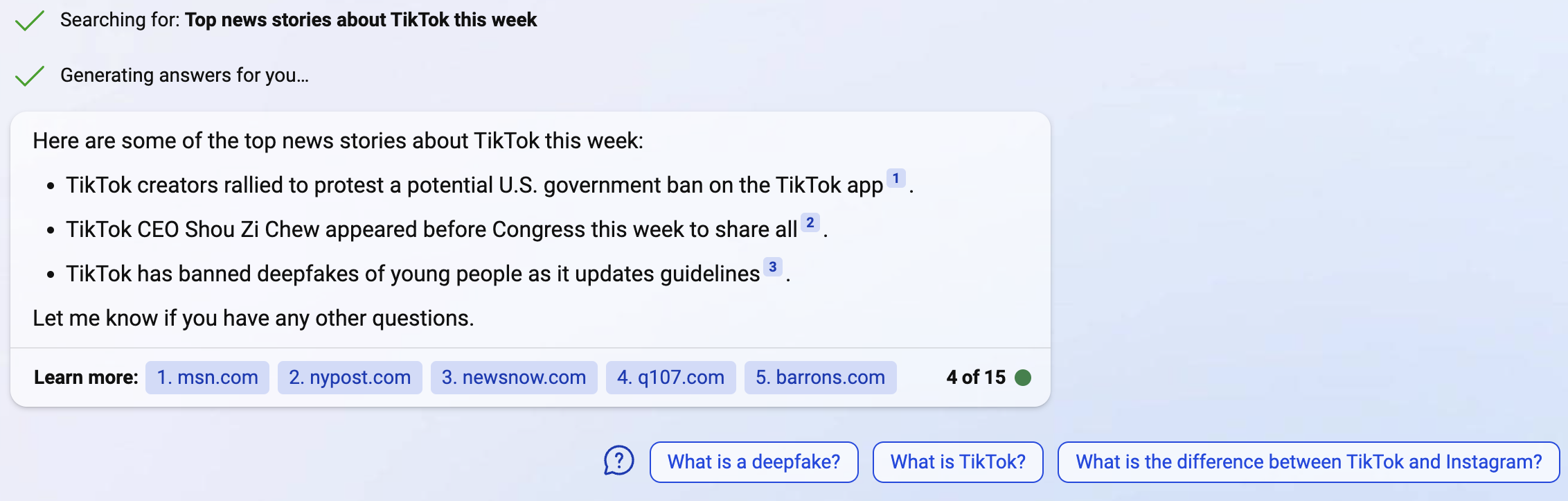

Screenshot from ChatGPT, March 2023Bing AI responded with a few stories with links to each.

Screenshot from Bing, March 2023

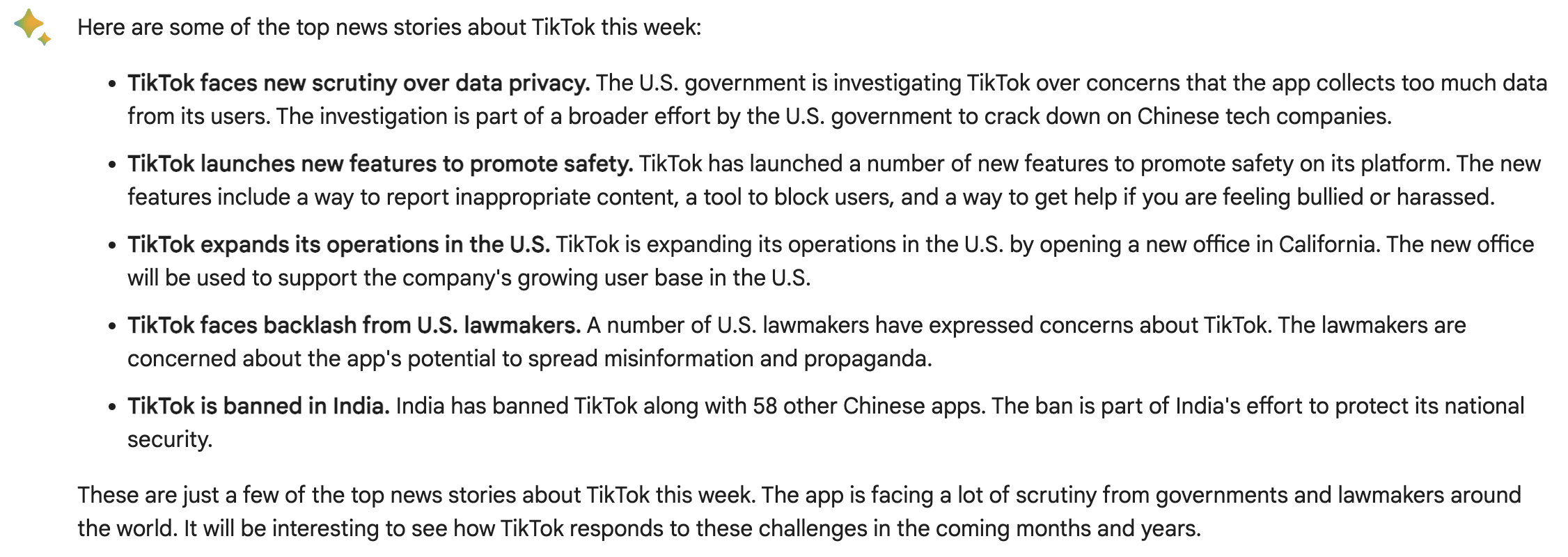

Screenshot from Bing, March 2023Google Bard offers three versions of recent TikTok news overviews but no sources. This was the first draft.

Screenshot from Bard, March 2023

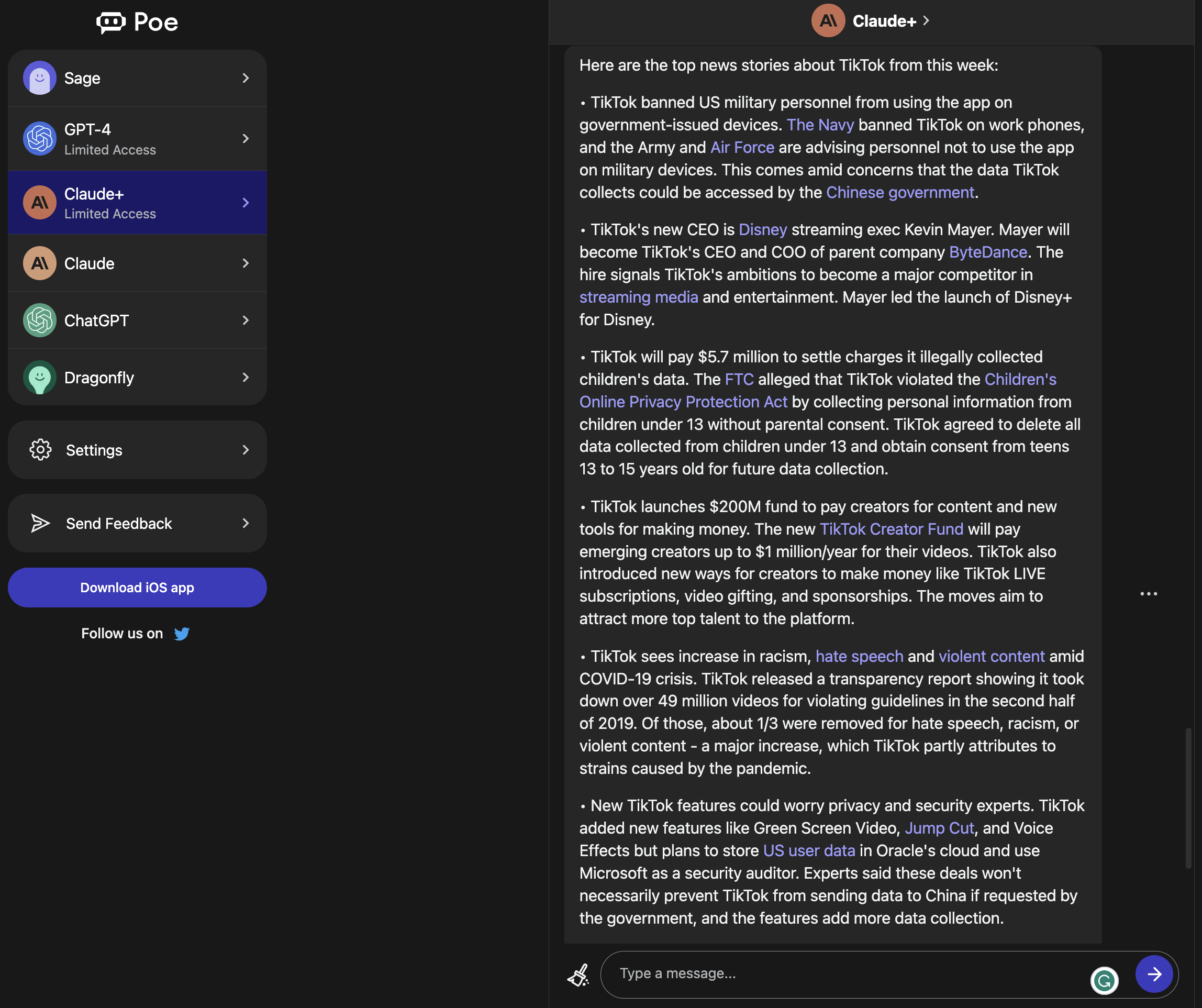

Screenshot from Bard, March 2023Claude+ shared several news stories, but they are not from the week when the prompt was asked.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #5: “Who Should I Follow On Twitter To Learn More About AI And LLMs?”

Want to find influencers in your niche or professionals to follow to learn more about a particular topic?

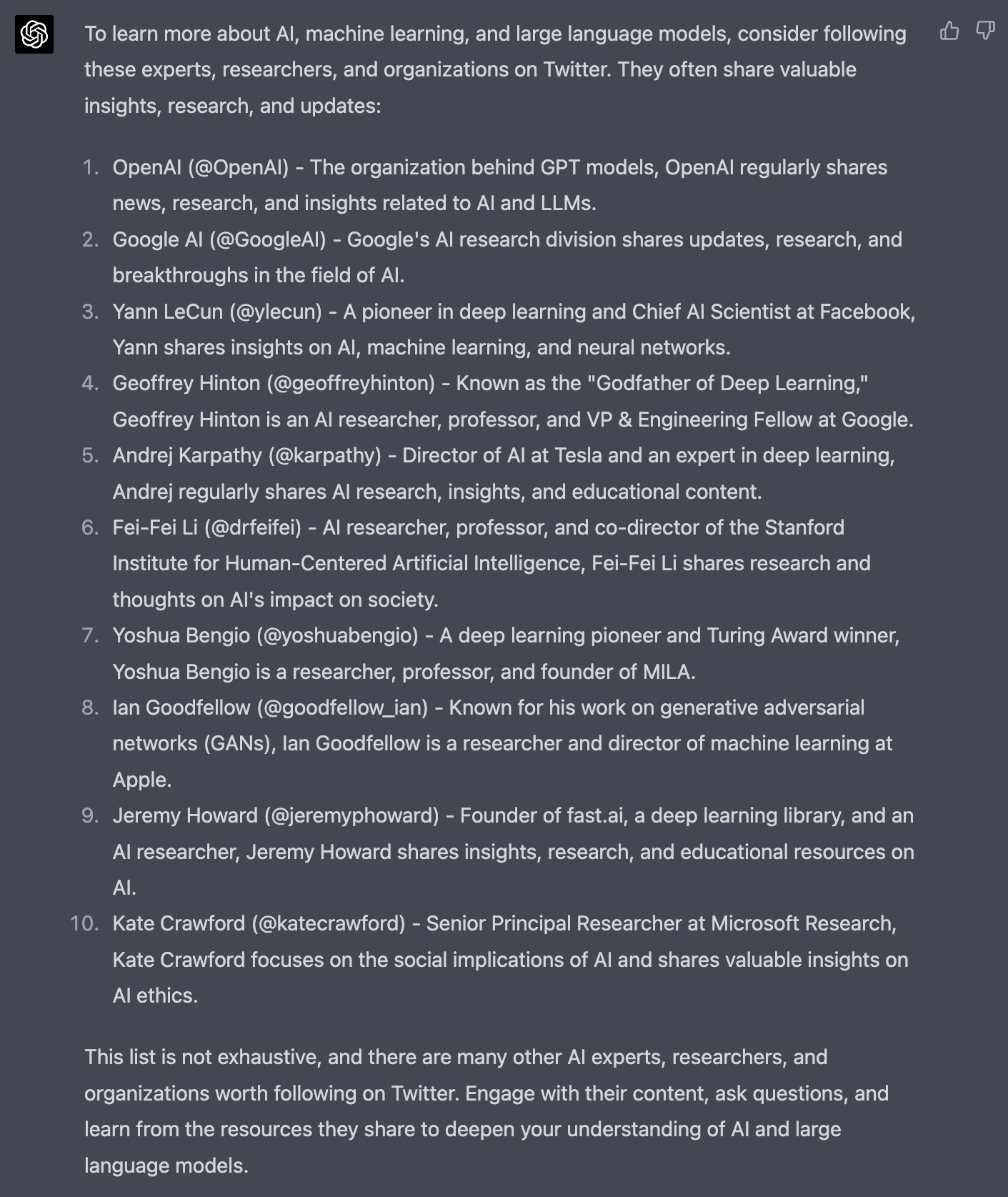

ChatGPT gave suggestions based on its training data up until 2021. One account on the list was suspended, but the rest were active.

Screenshot from ChatGPT, March 2023

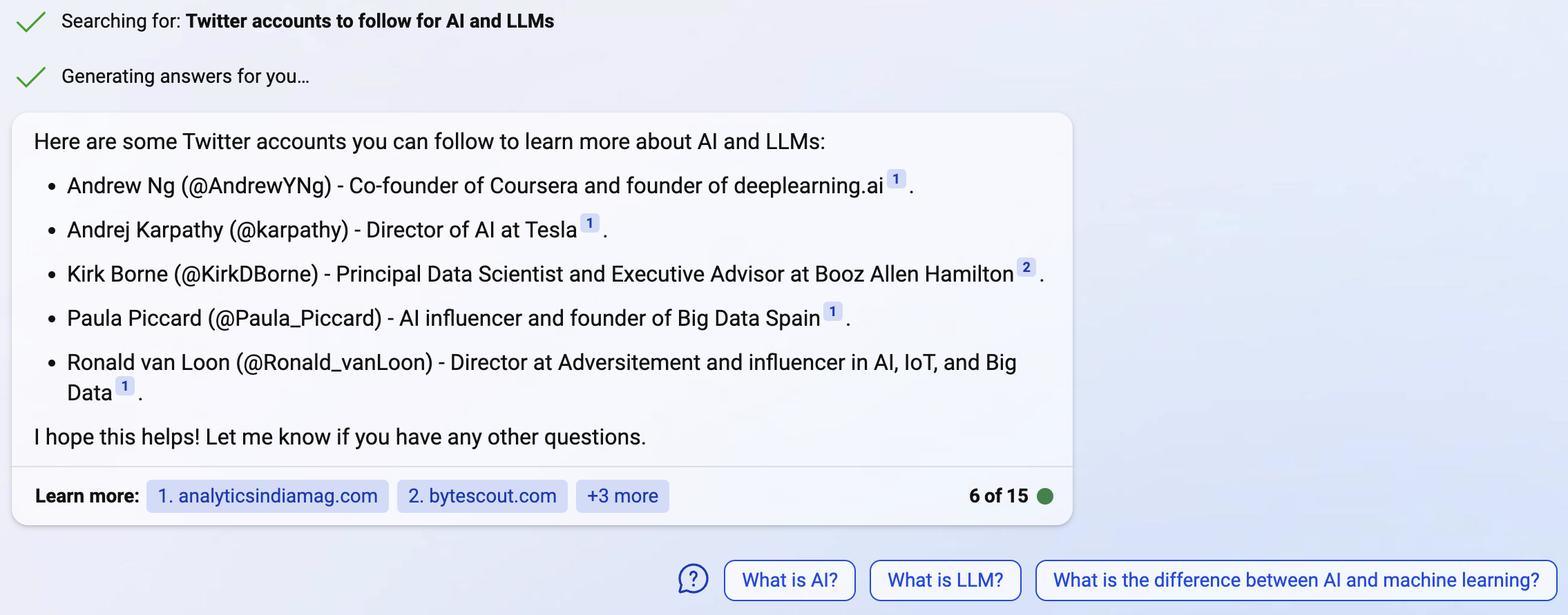

Screenshot from ChatGPT, March 2023Bing AI offered the shortest list. While it gave links, the links pointed back to the source of information rather than the actual Twitter accounts.

Screenshot from Bing, March 2023

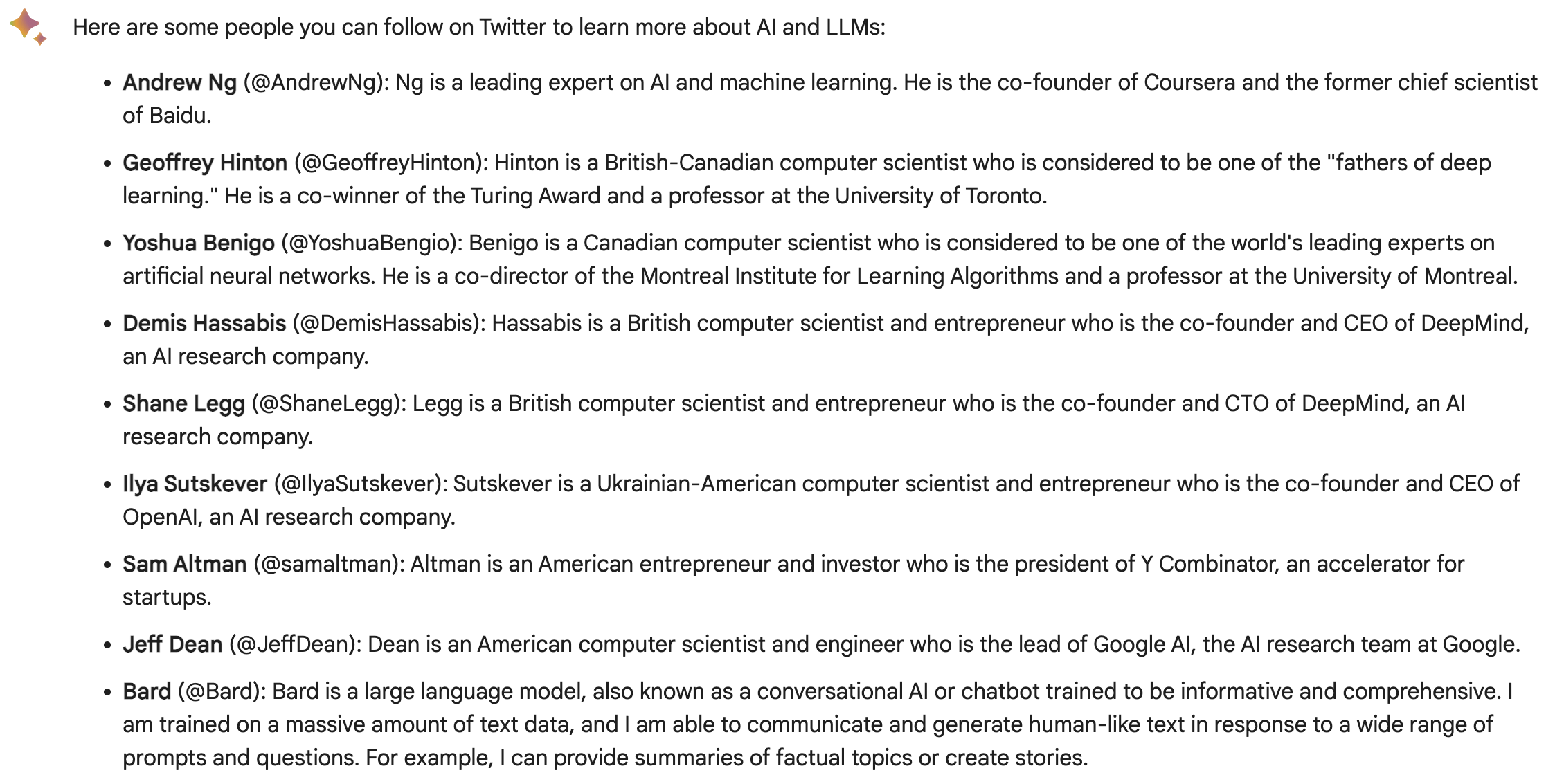

Screenshot from Bing, March 2023Google Bard offered three lists filled with suggestions. Unfortunately, some suggestions did not include usernames or had nothing to do with AI.

Screenshot from Bard, March 2023

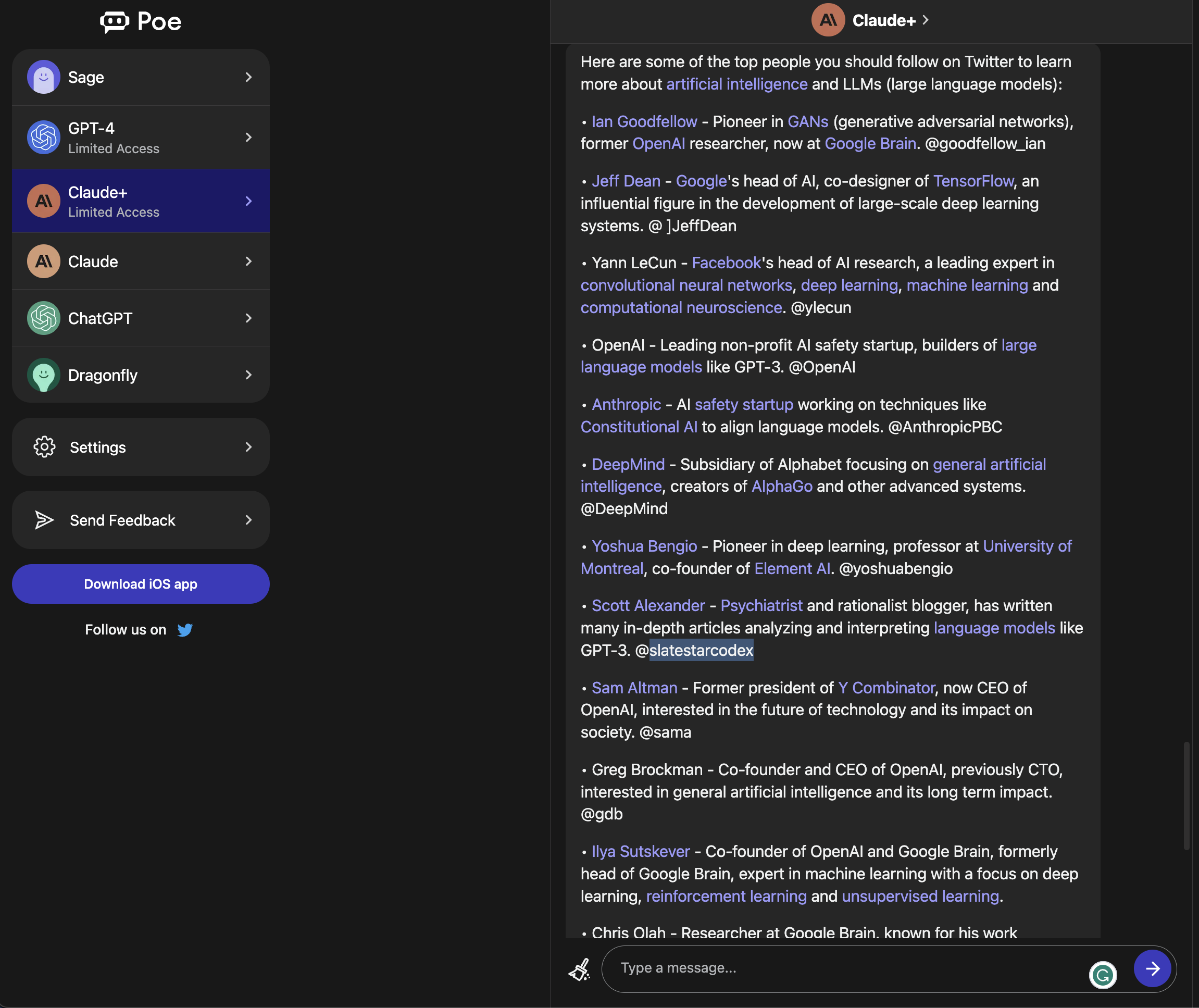

Screenshot from Bard, March 2023Claude+ produced the longest list, but a few accounts did not match the descriptions provided in its answer.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #6: “What Are The Best Tools For Increasing Productivity Within An Organization Participating In A Four-Day Work Week?”

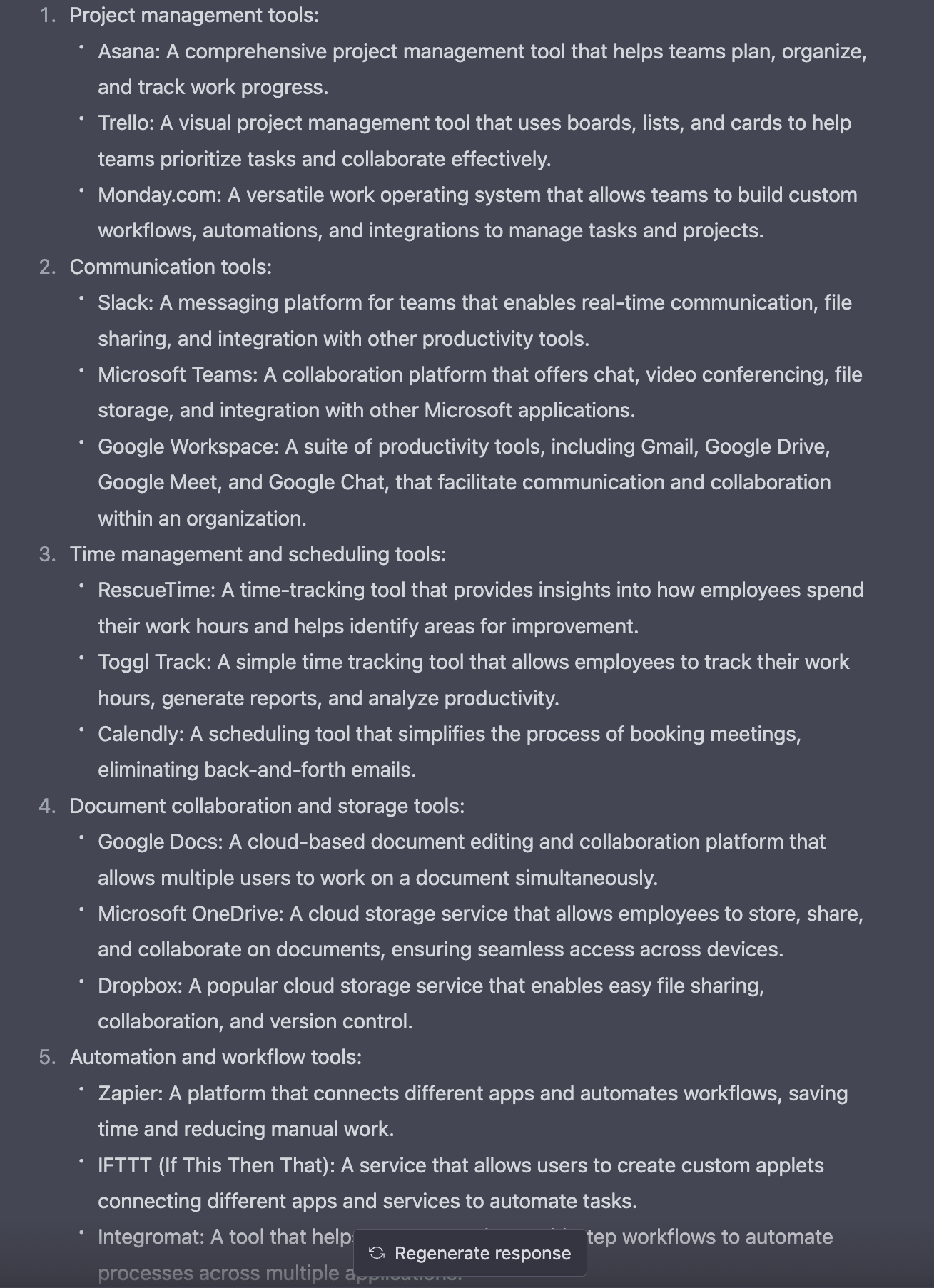

Looking for new tools to enhance your productivity? Depending on the context of the question, it’s unclear which generative AI chatbot can give you what you seek.

ChatGPT gave 15 tools in five categories for organizations to increase productivity.

Screenshot from ChatGPT, March 2023

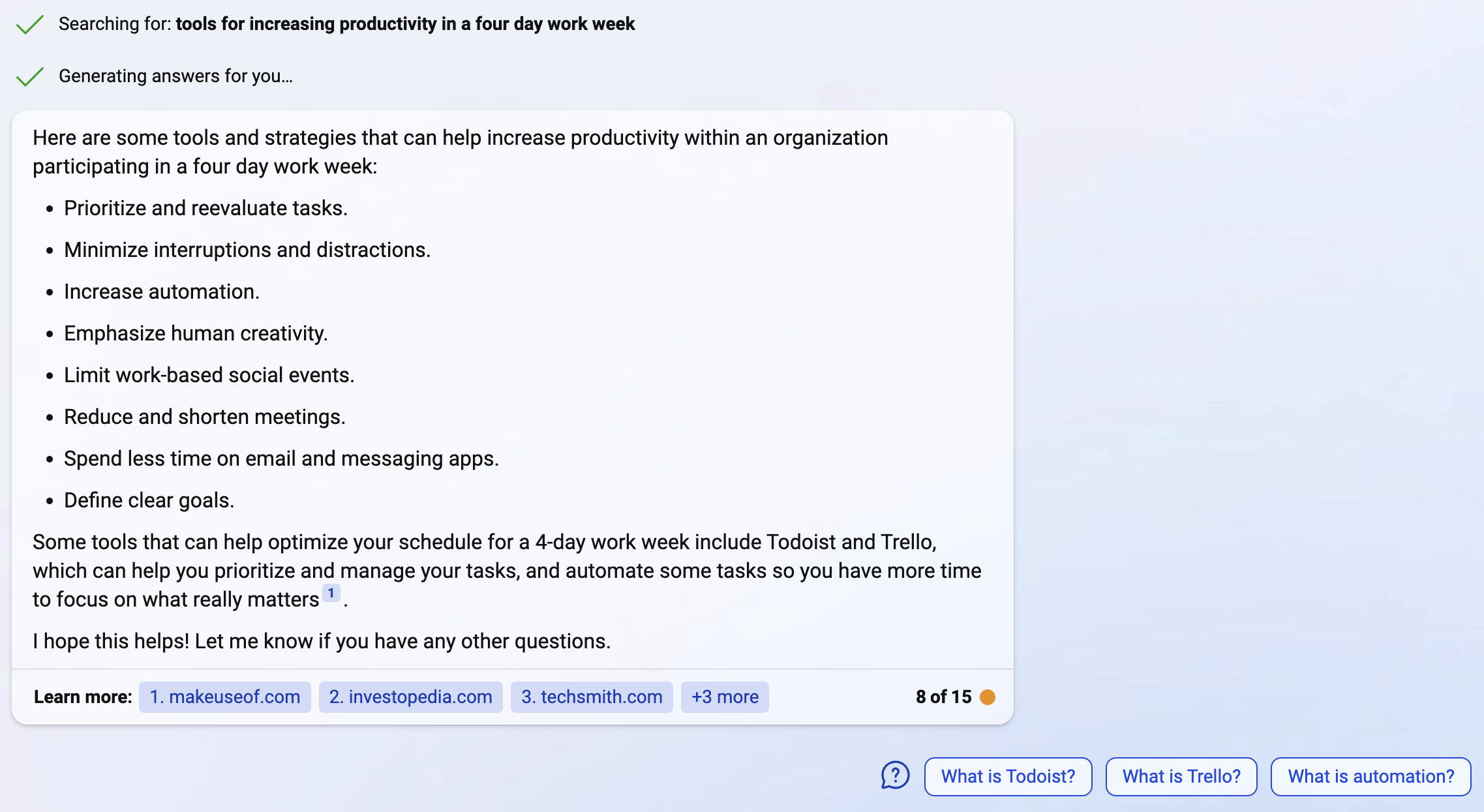

Screenshot from ChatGPT, March 2023Bing AI offered a list of simple tips and two tools.

Screenshot from Bing, March 2023

Screenshot from Bing, March 2023Google Bard listed the types of tools you would need in the first draft and suggested specific tools in the other two drafts. Shown below is the second draft.

Screenshot from Bard, March 2023

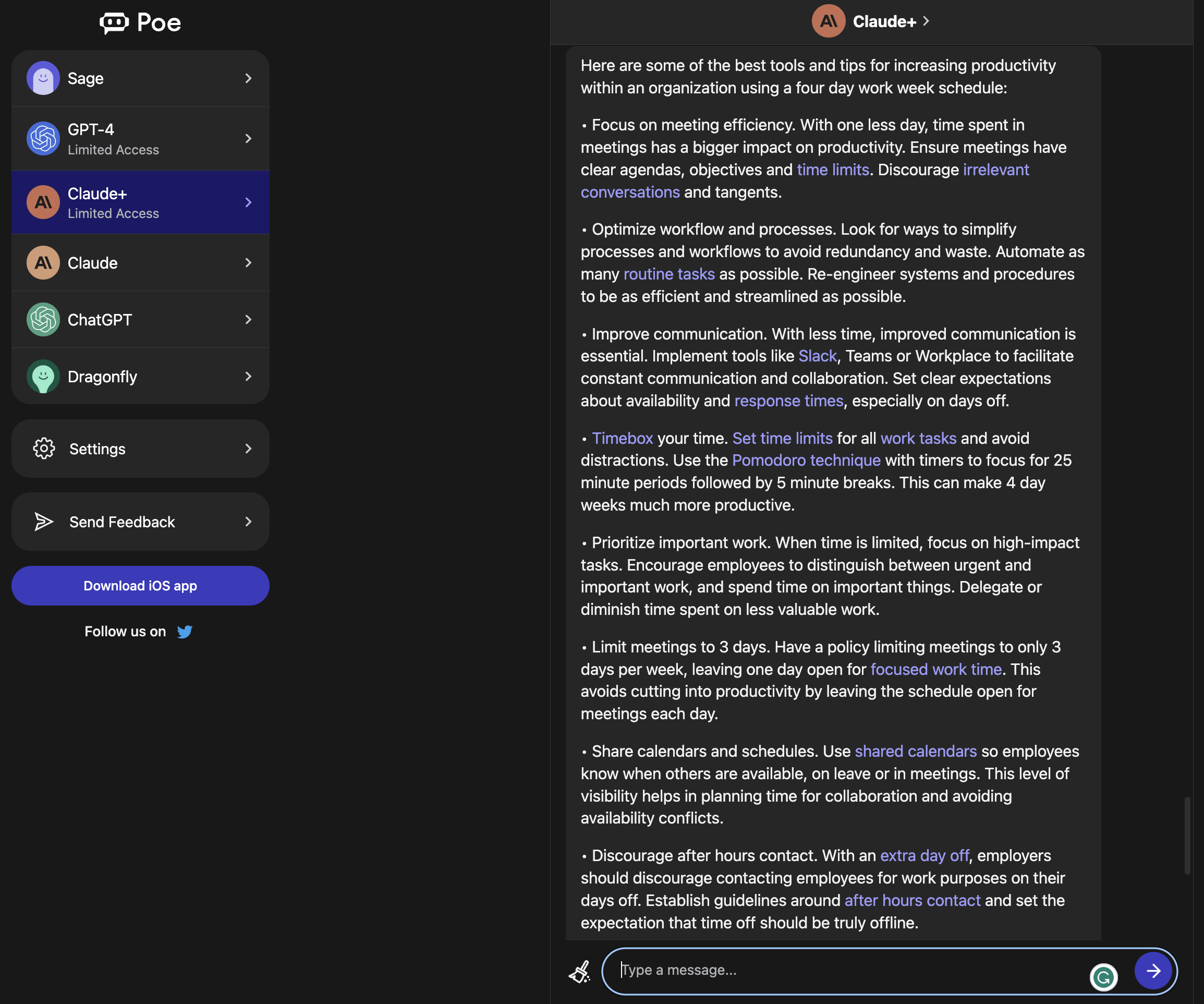

Screenshot from Bard, March 2023Claude+ gave a lengthy answer but only suggests three specific tools.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #7: “Write A 2,000-Word Blog Post On How To Use Slack”

Need inspiration for your content strategy? AI chatbots can help you get started in the right direction.

It’s important to note that AI-generated content is not unique. Large language ****** (LLMs) are trained on large amounts of data, including content published on the internet. Using AI-generated content as is may result in accusations of plagiarism.

Another concern is inaccurate information from AI responses. If you were to publish content directly from an AI chatbot without checking, you could accidentally promote misinformation to your audience.

Google expects all content – including AI-generated content – to be valuable for search users and demonstrate E-E-A-T quality.

It’s in your best interest to use the content generated by AI as inspiration to help you create unique content with verified facts and your perspective.

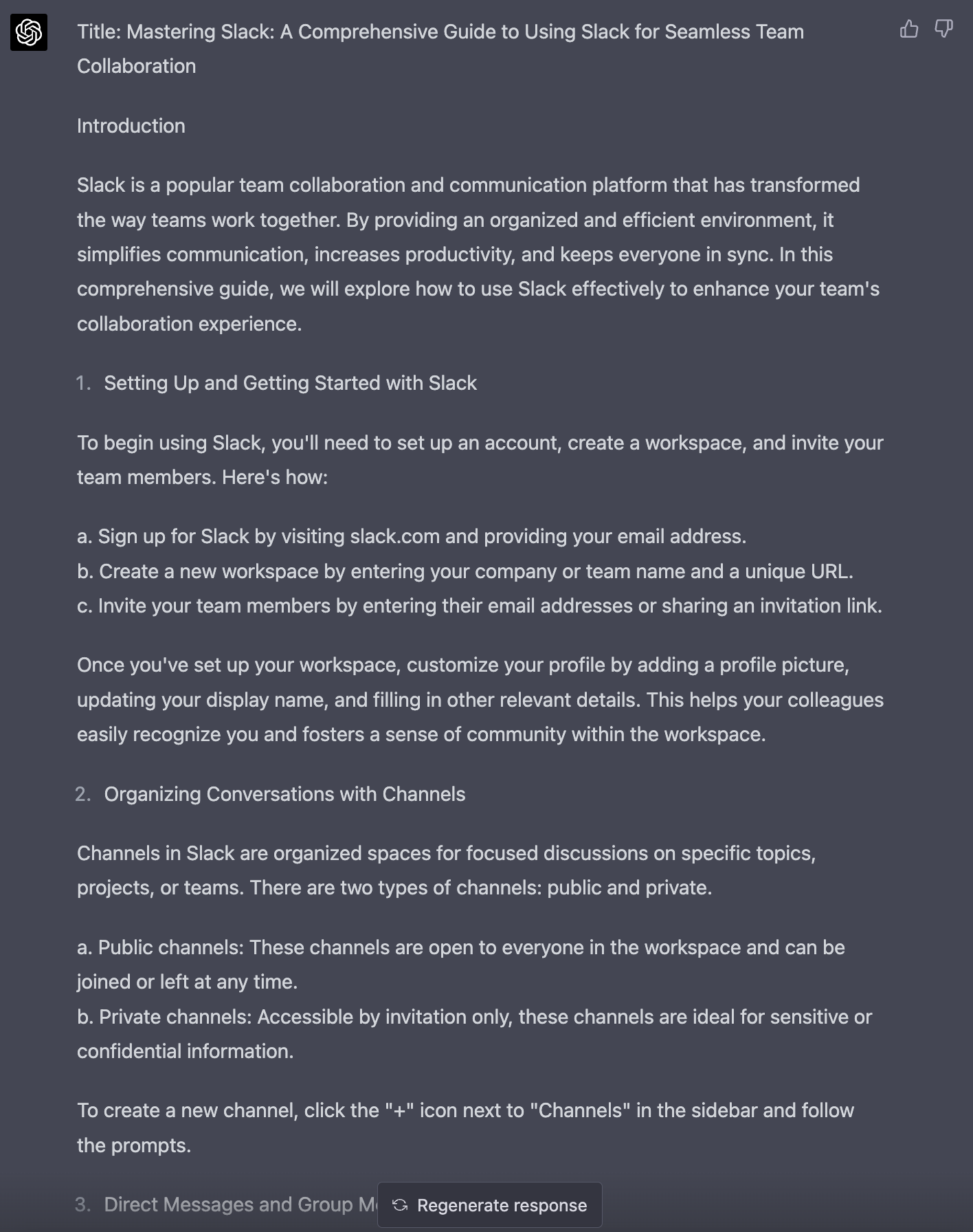

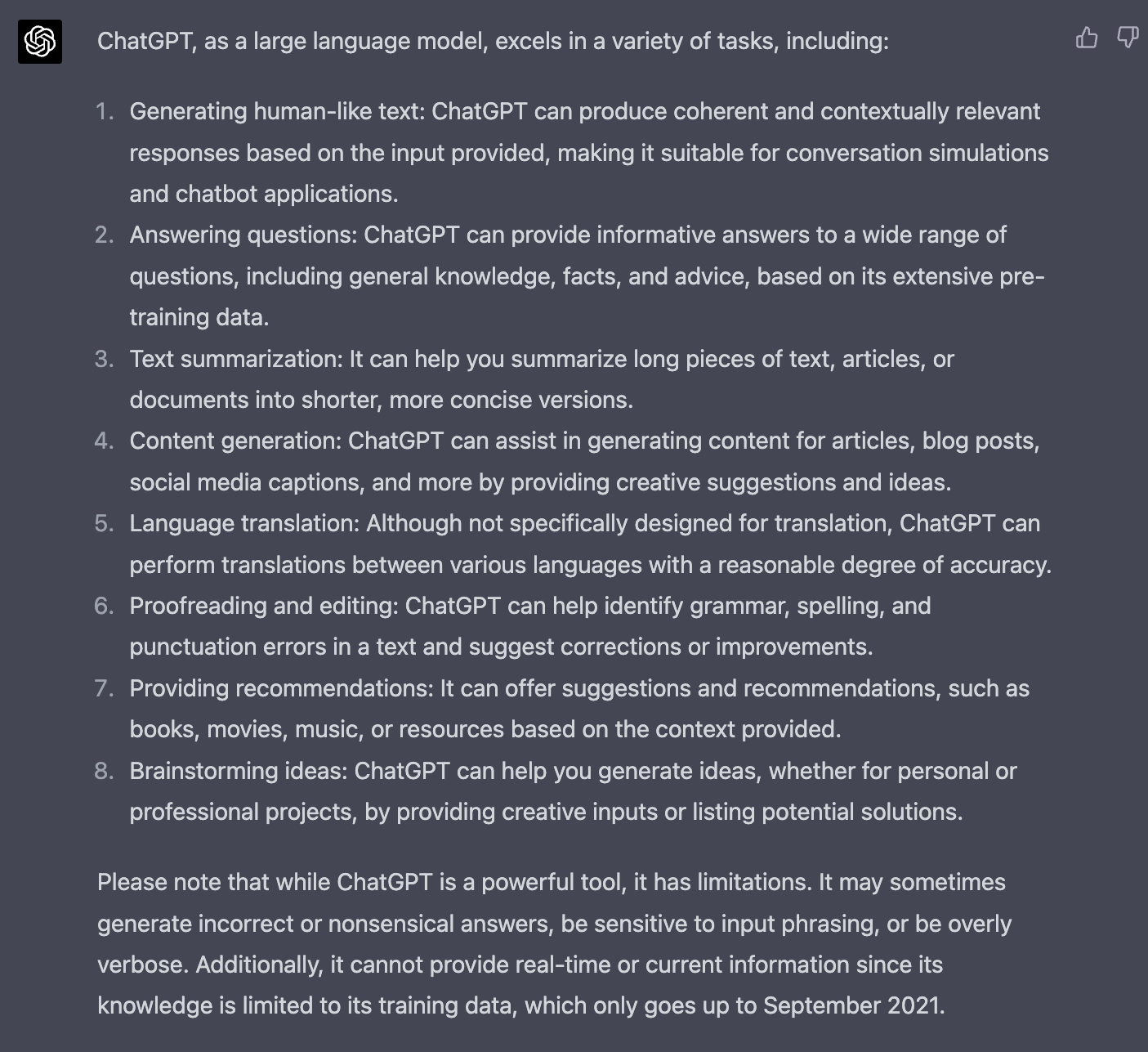

ChatGPT initially provided 1,008 words after prompting it to please continue when it stopped halfway through. When prompted to write more to make it 2,000 words, it added an additional 1,068 words.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023Bing AI refused to write a blog post but offered starting points.

Screenshot from Bing, March 2023

Screenshot from Bing, March 2023Google Bard drafted three posts, only one of which had proper formatting. The highest word count in a single draft was 760. When prompted to write more to reach 2,000 words, it gave suggestions about additional topics it could write about related to Slack.

Screenshot from Bard, March 2023

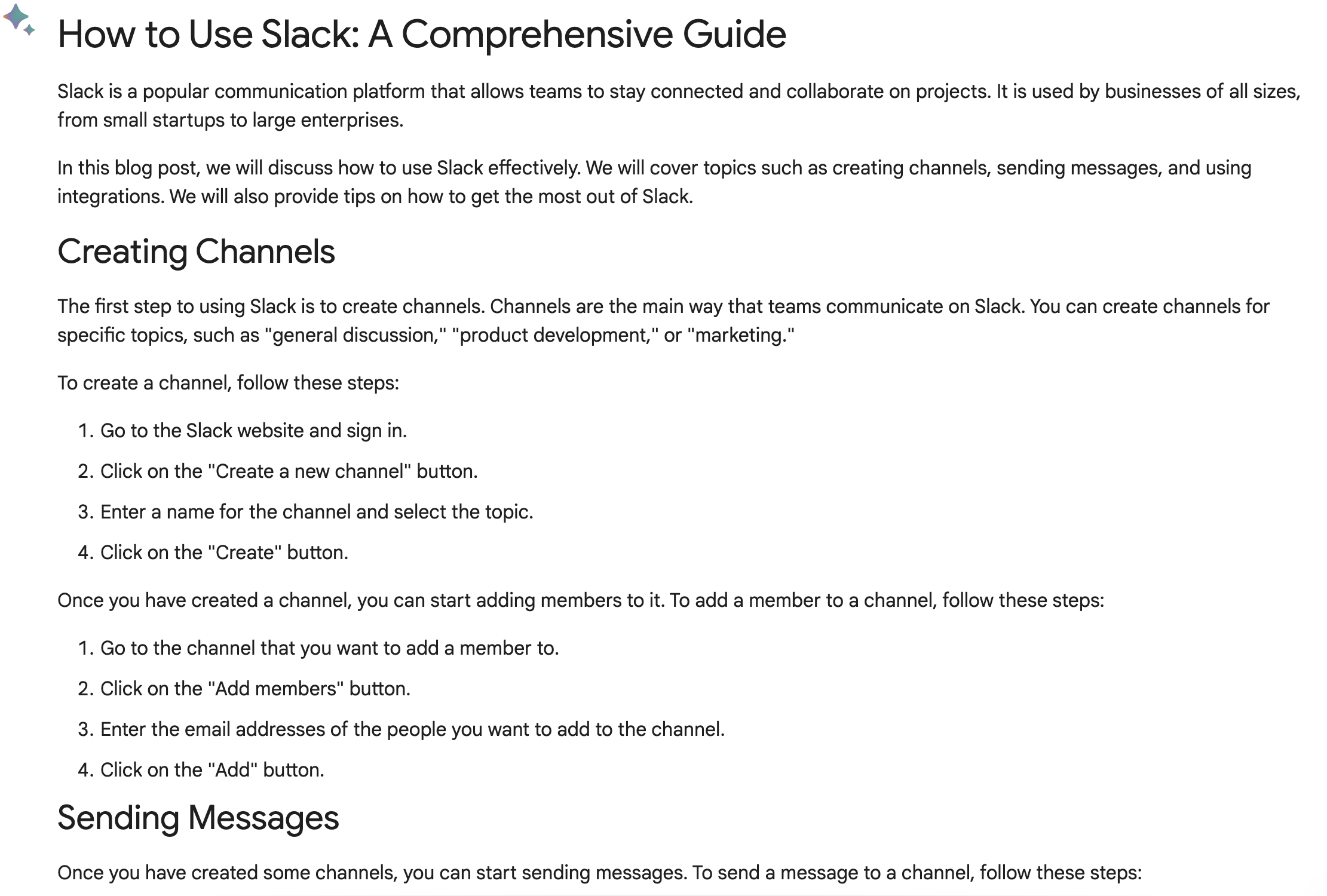

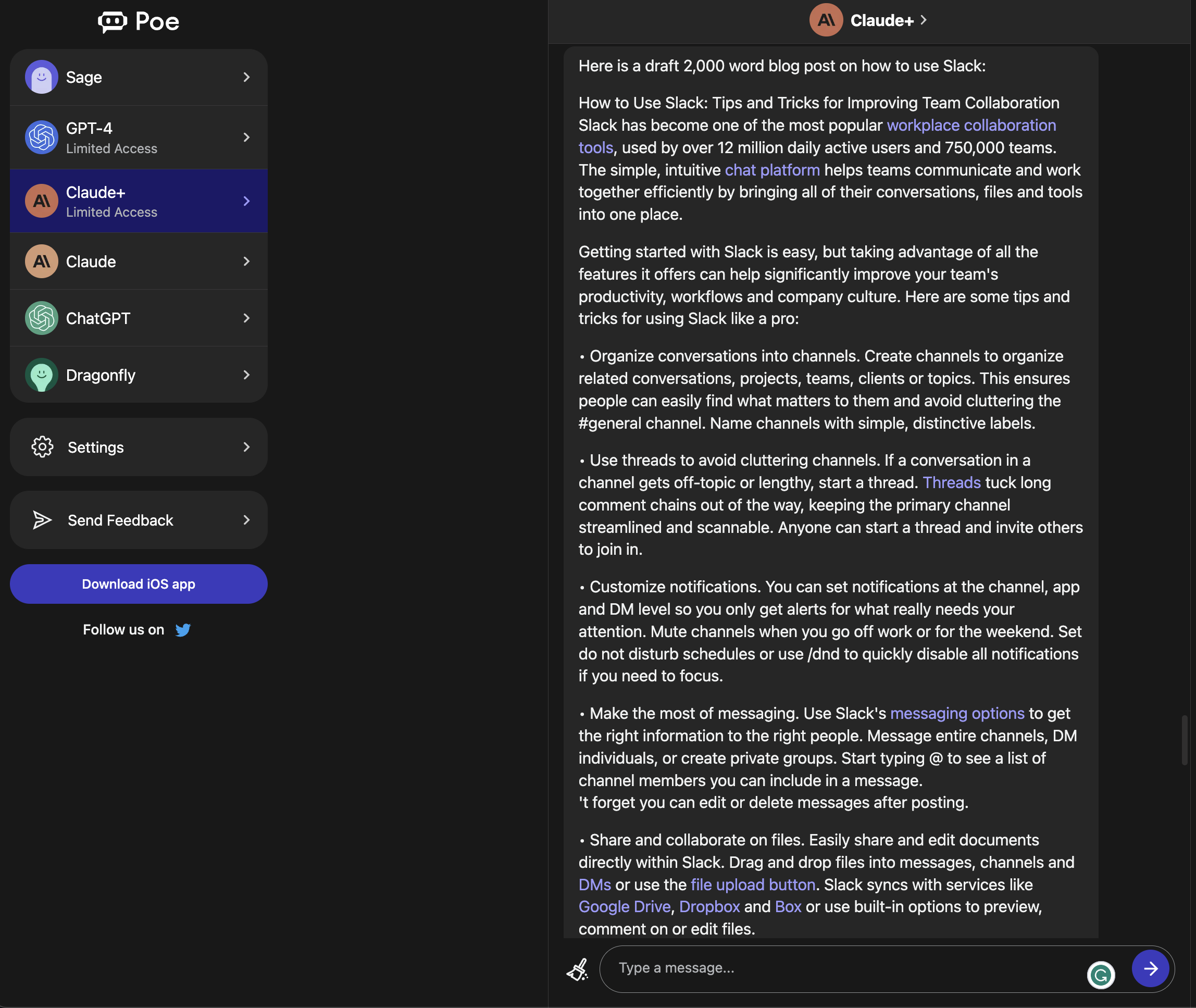

Screenshot from Bard, March 2023Claude+ wrote 627 words. When prompted to write more to reach 2,000 words, it rewrote the same post in 569 words.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023Prompt #8: “What Can AI Chatbots Do Best?”

Finally, here’s what each generative AI chatbot believes it can do best.

ChatGPT provided a detailed but humble version of its capabilities.

Screenshot from ChatGPT, March 2023

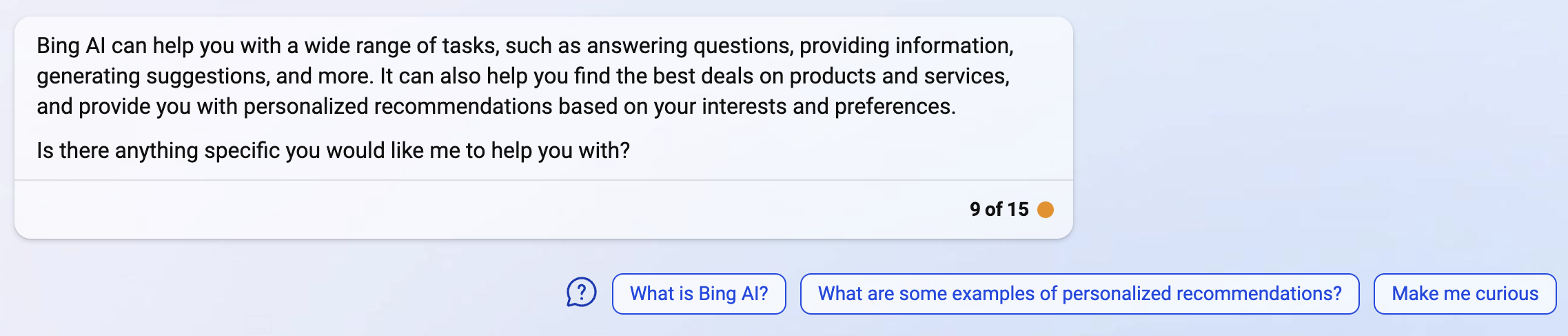

Screenshot from ChatGPT, March 2023Bing AI offered a short and sweet answer.

Screenshot from Bing, March 2023

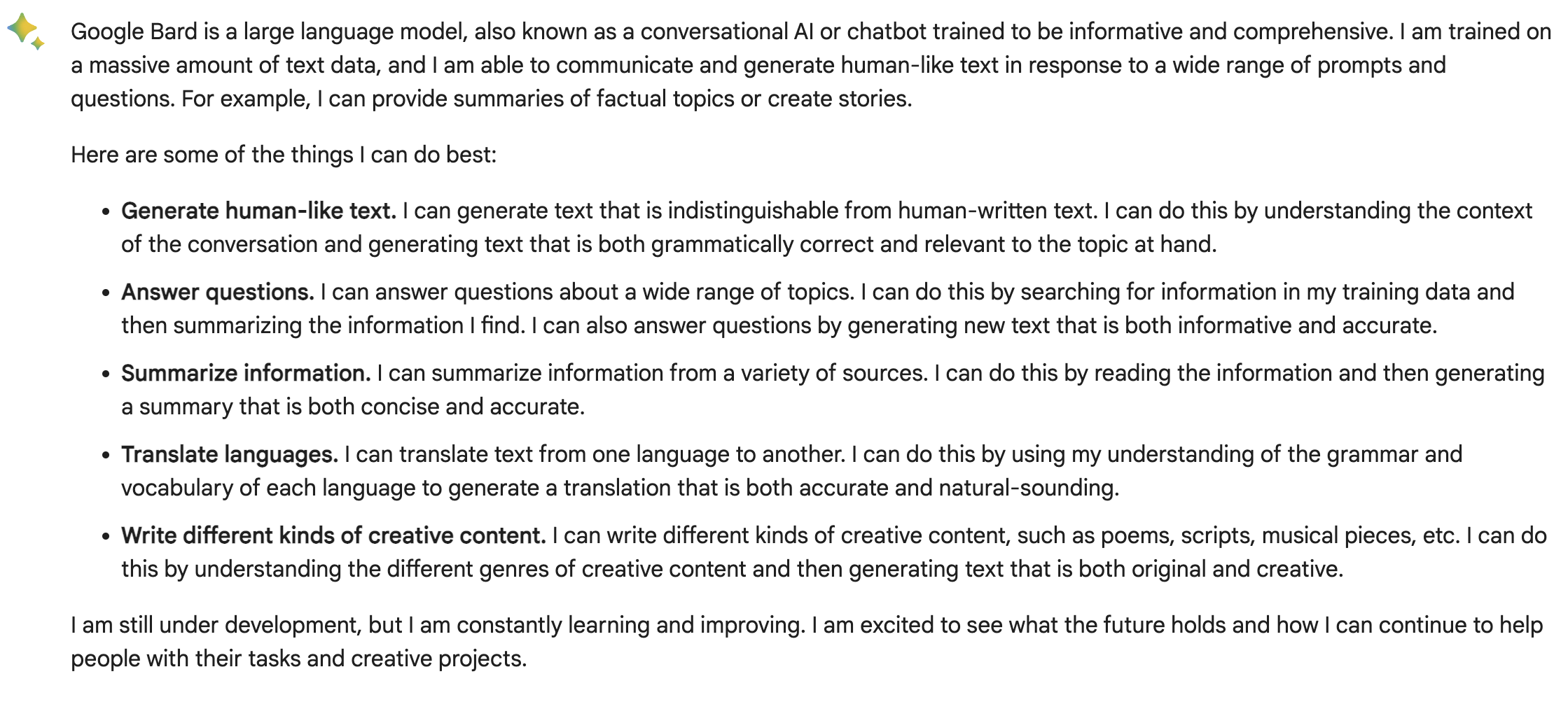

Screenshot from Bing, March 2023Google Bard’s answers seem similar to ChatGPT’s.

Screenshot from Bard, March 2023

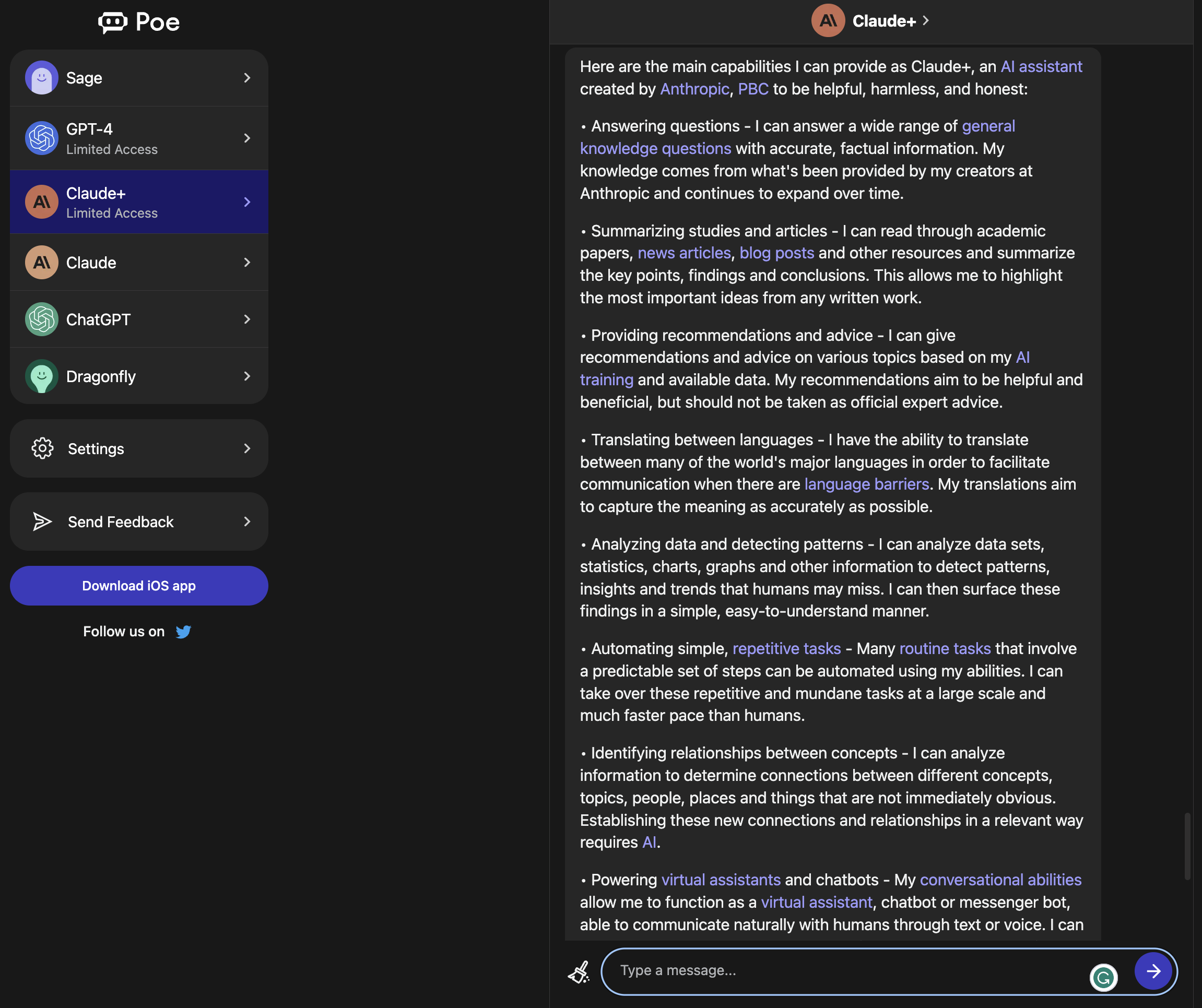

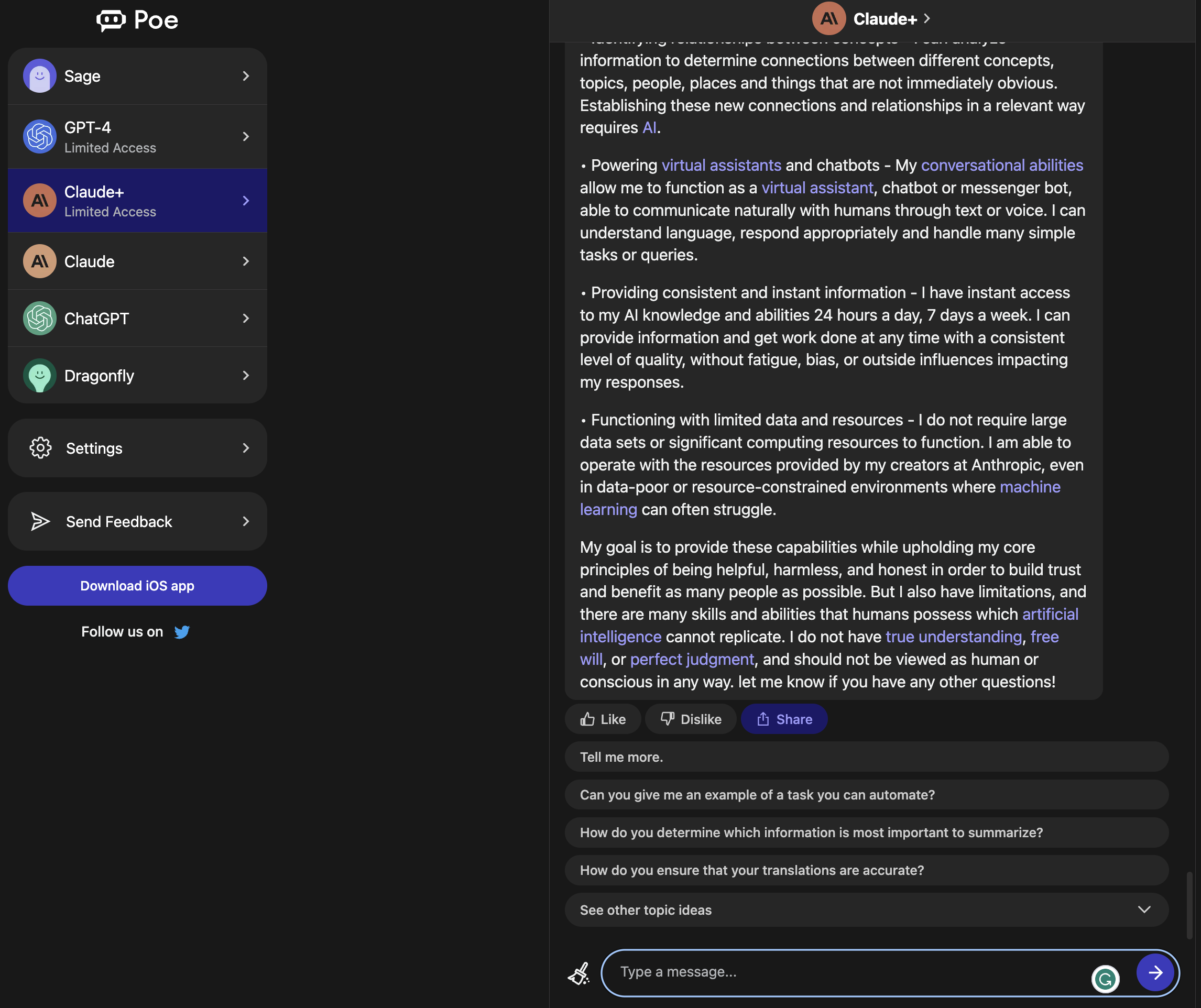

Screenshot from Bard, March 2023Claude+, as usual, provided the most detailed answer with more advanced capabilities.

Screenshot from Poe, March 2023

Screenshot from Poe, March 2023 Screenshot from Poe, March 2023

Screenshot from Poe, March 2023ChatGPT Provides The Best Responses

AI chatbots can already perform a wide variety of tasks. With time and refinement, each has the potential to make workers more productive and capable of higher-quality output.

Each AI chatbot has its unique benefits.

- ChatGPT offers the best responses for prompts that don’t need data from later than 2021. That limitation may not be a factor once ChatGPT plugins launch for all users.

- Bing AI almost always offers sources for its responses, making it easier to fact-check or get more information.

- Google Bard includes three drafts of its responses and occasionally includes a source.

- Claude+ uses links within its answers to help users learn more about a topic.

Unfortunately, all generative AI chatbots may offer outdated or incorrect information. It’s up to users to take the information they receive and verify that it is the best information possible before utilizing it in any application.

Featured image: Rokas Tenys/Shutterstock

Source link : Searchenginejournal.com