Can AI perform technical SEO analysis effectively?

Generative AI has made significant strides in various fields, including SEO.

This article explores a crucial question: Can AI effectively perform technical SEO analysis using raw crawl data?

We’ll examine the capabilities of ChatGPT in interpreting Screaming Frog crawl data and generating SEO recommendations.

Leveraging generative AI for technical SEO

My Search Engine Land articles often examine in-depth technical applications where SEO and AI can collaborate, resulting in increased efficiencies. For example, I have used AI to generate alt text from image files or product descriptions from PIM data.

However, many marketers are less technical and may struggle to blend OpenAI’s API, Python and spreadsheets. Some marketers would rather hand AI the tech data and receive clear human insights.

As such, I thought I would produce an article examining the effectiveness of AI in interpreting technical SEO data. Even if you’re not communicating with AI ****** via programmatic API access, many AI assistants (ChatGPT, Google Gemini) offer user-friendly chat interfaces. We will explore ChatGPT in this article.

Lately, the ability of these AI-powered chat interfaces has been growing. For example, OpenAI’s ChatGPT has recently evolved from GPT-4 to GPT-4o. The new GPT-4o model comes with many feature changes:

- GPT-4o is much faster than GPT-4. It has a similar standard of accuracy to GPT-4, while responding at pace, more similar to GPT-3.5-Turbo.

- GPT-4o is less reliant on plugins or custom GPTs to access web content.

- GPT-4o will seek web content far more frequently to generate its results, making the produced material “fresher.”

- Though true, the web is poorly curated and full of misinformation. Many feel that the refined data model of GPT-4, with fewer web requests – actually produced superior results to GPT-4o.

- There’s no arguing that GPT-4o is at least comparable to GPT-4. It is much faster and far more interactive. It can work with more types of attached data, produce more types of media and is web-capable.

With GPT-4o now more capable of searching web content, it could be more helpful for marketers, even those who lack technical skills. However, there are concerns about its ability to access the web, as past experiences with tools like Google’s Bard and Gemini showed inferior results.

To test GPT-4o, we’ll give it specific technical SEO crawl data instead of generic analysis tasks. This will help us see how much ChatGPT has improved and if it can be useful for technical SEO analysis.

Analyzing Screaming Frog crawl data for insights via ChatGPT

The “internal all” export from Screaming Frog is the bread-and-butter foundation of most technical SEO insights.

That single export may be pivoted in several ways to comment on metadata issues, canonical tag conflicts, hreflang issues etc.

Will AI pick up on such issues? Will AI hallucinate or misdiagnose issues where none are present? Let’s find out.

We will use the Butcher’s Food company website as a testbed for these tests. They are a UK food supplier, and their site is about the right size for our proposed activities.

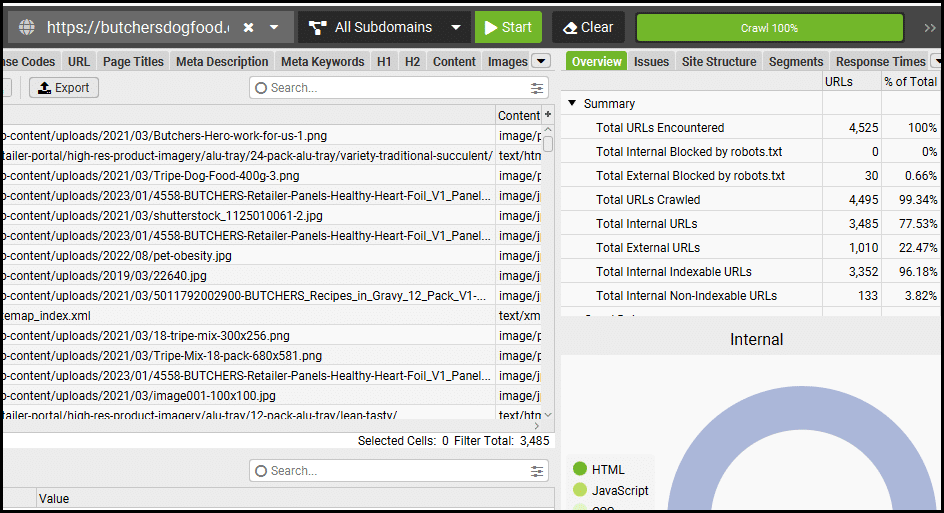

First, we will crawl Butchersdogfood.co.uk:

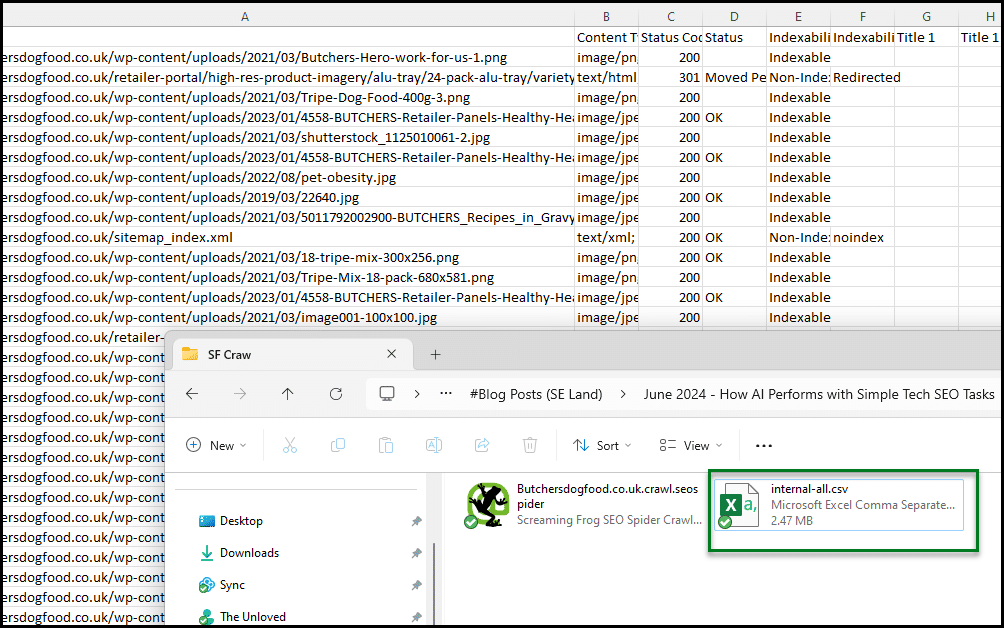

Over 3,000 internal addresses were discovered. Now, we can export the list of internal addresses to a CSV file:

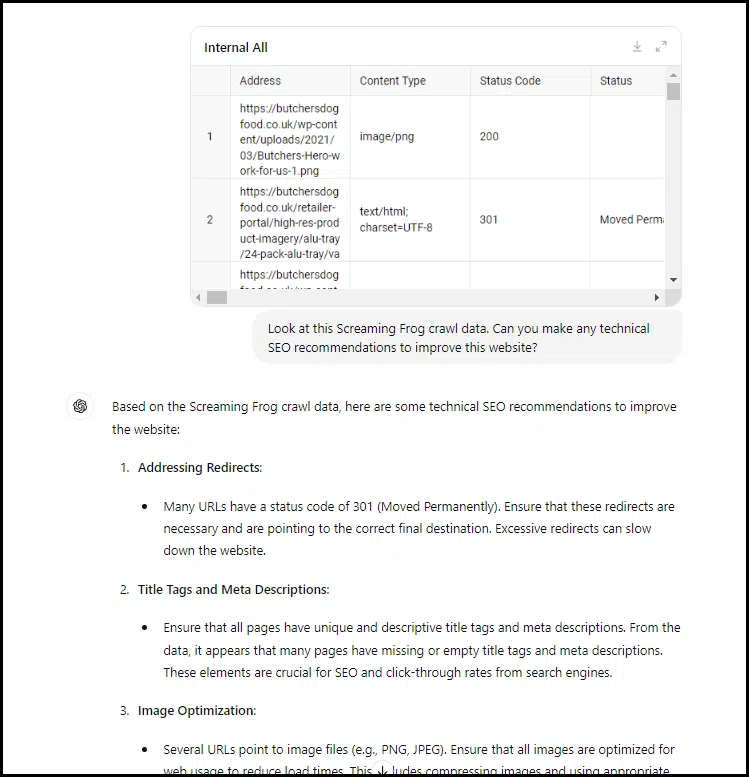

Now, we can feed the CSV file to GPT-4o and ask for some recommendations. Most people don’t write prompts as complex as I do. They write shorter prompts and then refine them. As such, I will attempt to follow that behavior.

The response contained many recommendations, but most were disappointingly generic. For example, recommendations like this:

- “Several URLs point to image files (e.g., PNG, JPEG). Ensure that all images are optimized for web usage to reduce load times. This includes compressing images and using appropriate formats.”

This recommendation is disappointing because ChatGPT actually did have access to response time and file-size data as per image addresses. So, the response could potentially have been more specific.

Here’s another example of very generic guidance:

- “Ensure that canonical tags are correctly implemented to avoid duplicate content issues. Each page should have a self-referencing canonical tag unless it’s a deliberate duplicate.”

Since ChatGPT has access to the datasheet, it should be able to work out (roughly) which URLs are the main addresses and which are not (parameters in the URL string, etc.).

Thus, ChatGPT should be able to isolate canonical tag problems rather than give general (and useless) guidance.

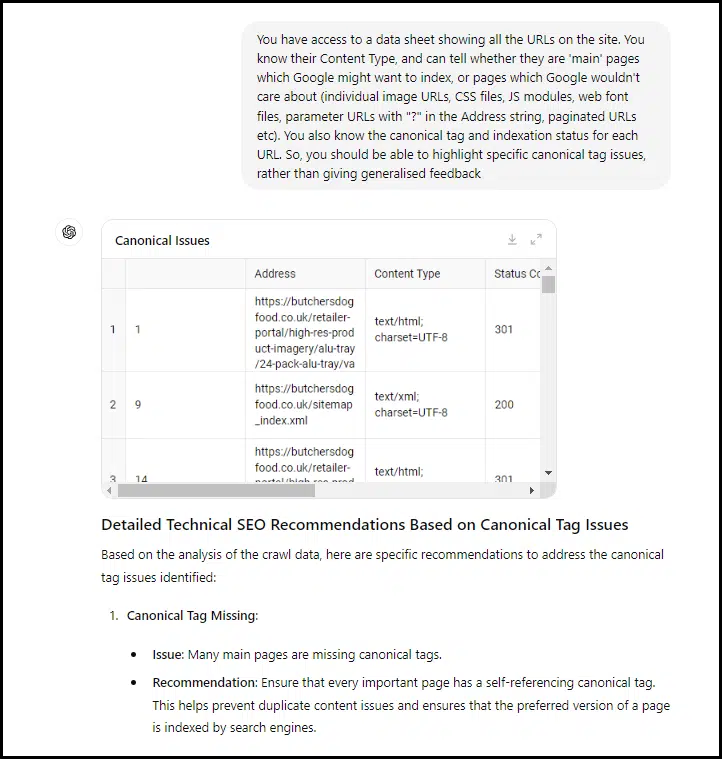

Let’s push it a bit harder:

The guidance is still relatively vague, even when we iterate and create a more detailed prompt. Many pages are always missing canonical tags, such as image addresses, which do not support HTML.

The response should have been more specific and detailed, explaining why certain addresses needed canonical tags but didn’t have them.

As part of the response above, some more detailed feedback was given:

Wow, these recommendations are a total mess.

In a way, they are impressive despite being totally wrong. I might expect an inexperienced junior SEO person whose been thrown into the technical SEO deep end to make similar recommendations.

The advice would be wrong, but there are indeed signs of intelligence. You must remember that it still takes some degree of intelligence to go off on tangents and produce relevant yet inaccurate analysis. The work is unusable, but there are signs of a genuine attempt.

As humans, we’re prone to holding AI to unfair standards. If AI produces something that is wrong or incorrect, we say that AI has failed to achieve human understanding. However, many of us (real humans) also fall short, and this happens often.

For me, the question is: Has AI failed in a way that demonstrates some human-like attempt at completing the task?

I think signs are positive for the future in terms of such applications for accessible (chat interface) AI interactions. For now, though, it would be disastrous to act on the recommendations given.

Identifying a redirecting URL as potentially important (even though it is a redirecting address) and then assuming that work may or may not need to occur on the final destination URL is some of the most woolly thinking I have personally encountered.

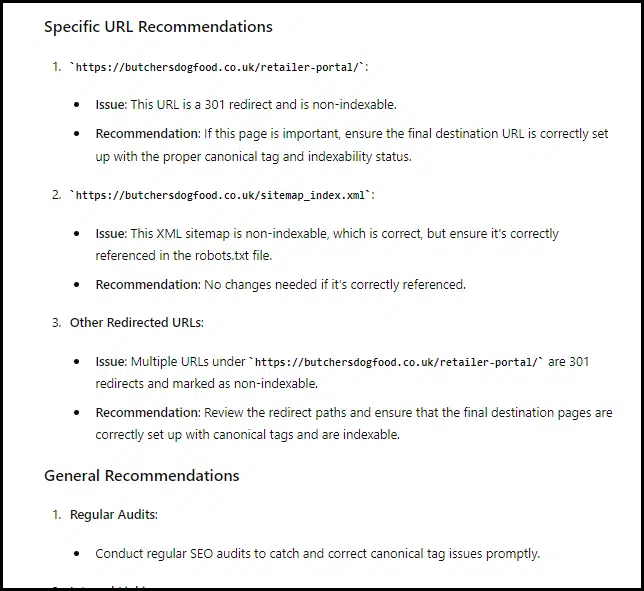

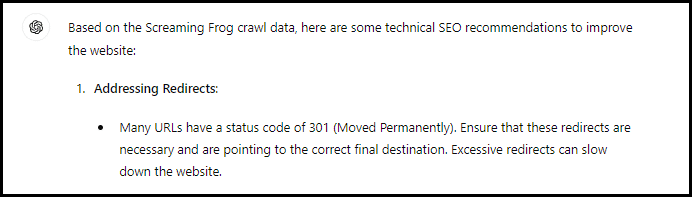

The only interesting pointer that ChatGPT was able to come up with was that a number of addresses on the site resulted in 301 redirects, and there may be some defective architecture:

The above response was given after our very first message before we began pushing AI to think in more specific terms. This was actually the first recommendation that ChatGPT gave, and in some ways it is interesting:

There are around 100 redirecting addresses. Many of these are redirected image addresses. Some seem like they were once true pages or perhaps are trailing slash redirects. Others revolve around pagination.

This was an interesting lead, but most other recommendations were generic or misleading. ChatGPT’s attempt at specific advice was semi-intelligent, but the suggestions would have wasted time or caused more problems.

I’d grade this work a D or E, though many machines wouldn’t score that high on technical SEO from flat datasheets. I’m impressed, but I don’t recommend using AI for this level of technical SEO analysis yet.

Get the daily newsletter search marketers rely on.

Comparing AI insights to Ahrefs’ technical audit

Ahrefs has a sophisticated cloud-based SEO crawler. While Screaming Frog’s data can be more accurate, Ahrefs still provides solid data and better insights. I use both tools for site audits.

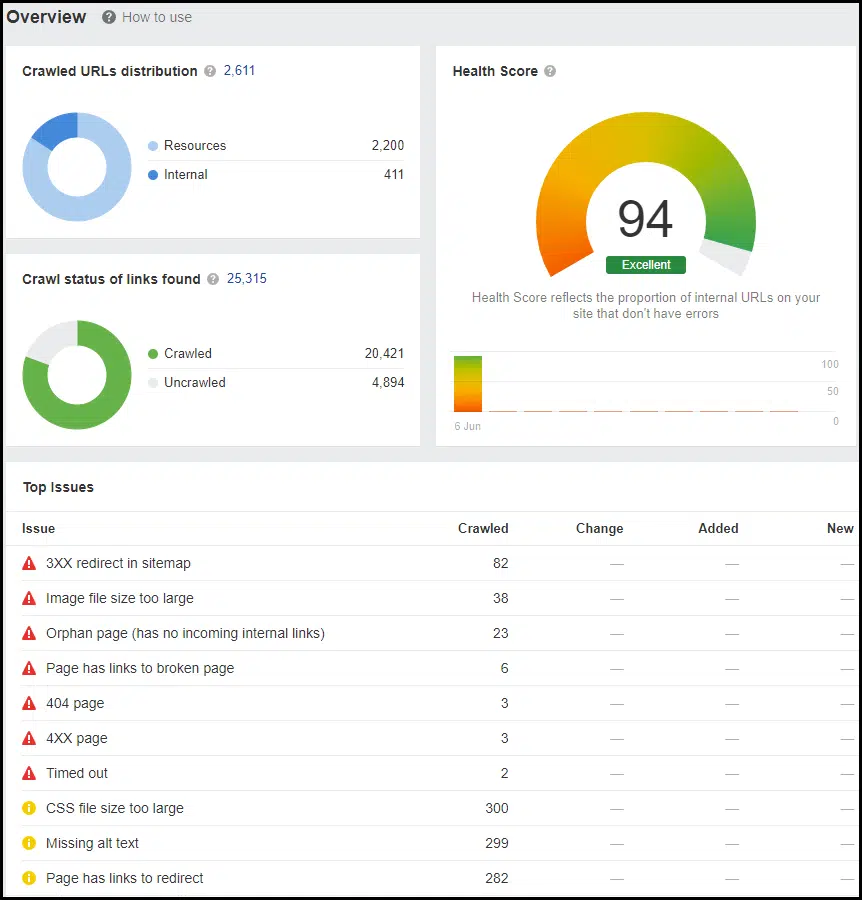

If we look at the output from a full Ahrefs technical SEO crawl, we may then be able to determine how close GPT-4o came to finding the truth. Let’s look at the Ahrefs overview:

Wow, that’s an excellent rating! Well done to the Butcher’s Food team.

Despite the high rating, some issues still persist. This is totally normal and is usually nothing to worry about.

What’s interesting here is that the remaining issues revolve around redirecting addresses and images, which may have too large file sizes. This is interesting because these were some of the top issues picked out by our AI assistant based on Screaming Frog data.

What does this mean? Was the AI right all along, and perhaps we judged ChatGPT too harshly for “generic sounding” recommendations that were actually accurate?

Yes and no. Humans often feel the need to defend their roles against advancing technology, which can create a bias against it. This bias is common, even among those in the digital field.

However, this technology is not an immediate threat to technical SEO specialists. The advice given was not usable, but the AI’s mistakes were intelligent, similar to those of an inexperienced human who shows promise.

The general focus of the insights, like redirects and image compression, was accurate even if the specific advice wasn’t.

The final verdict

GPT-4o is a vast improvement over GPT-3.5-Turbo. However, I’m unconvinced that there is significant (or any) improvement over GPT-4, which seems to hallucinate less.

Currently, I see GPT-4o as a good middle ground between GPT-3.5-Turbo and GPT-4. To me, the results of GPT-4 seem superior. But GPT-4o is around as fast as GPT-3.5-Turbo and is more interactive.

I guess time will tell which model users will prefer. I’m willing to wait longer for GPT-4 to produce superior output, even if that output is limited via a (curated) data model.

ChatGPT’s advice was poor. It missed key points and offered generic, unhelpful suggestions. Its attempt at specific advice also failed.

However, there were signs of intelligence in its errors, similar to an inexperienced human making mistakes. This shows potential for improvement.

For now, don’t rely on this technology for technical SEO analysis, especially through simple chat interfaces. But keep an eye on it – an AI capable of providing valuable insights may be closer than we think.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Source link : Searchengineland.com

![YMYL Websites: SEO & EEAT Tips [Lumar Podcast] YMYL Websites: SEO & EEAT Tips [Lumar Podcast]](https://www.lumar.io/wp-content/uploads/2024/11/thumb-Lumar-HFD-Podcast-Episode-6-YMYL-Websites-SEO-EEAT-blue-1024x503.png)