Google Chrome utilizes a machine learning model for address bar autocomplete. This model, likely a Multilayer Perceptron (MLP) processes numerous input signals to predict and rank suggestions.

Here’s a breakdown of these signals:

Input Features:

User Browsing History:

- log_visit_count: (float32[-1,1]) Logarithmic count of user visits to the URL.

- log_typed_count: (float32[-1,1]) Logarithmic count of the URL being typed in the address bar.

- log_shortcut_visit_count: (float32[-1,1]) Logarithmic count of user visits to the URL via a desktop shortcut.

- elapsed_time_last_visit_days: (float32[-1,1]) Days elapsed since the user last visited the URL.

- log_elapsed_time_last_visit_secs: (float32[-1,1]) Logarithmic seconds elapsed since the user last visited the URL.

- elapsed_time_last_shortcut_visit_days: (float32[-1,1]) Days elapsed since the user last visited the URL via a desktop shortcut.

- log_elapsed_time_last_shortcut_visit_sec: (float32[-1,1]) Logarithmic seconds elapsed since the user last visited the URL via a desktop shortcut.

- num_bookmarks_of_url: (float32[-1,1]) Count of bookmarks associated with the URL.

- shortest_shortcut_len: (float32[-1,1]) Length of the shortest desktop shortcut for the URL.

Website Characteristics:

- length_of_url: (float32[-1,1]) Length of the URL string.

Match Characteristics:

- total_title_match_length: (float32[-1,1]) Total length of matches between the user’s input and the website title.

- total_bookmark_title_match_length: (float32[-1,1]) Total length of matches between the user’s input and the bookmark titles for the URL.

- total_host_match_length: (float32[-1,1]) Total length of matches between the user’s input and the URL host.

- total_path_match_length: (float32[-1,1]) Total length of matches between the user’s input and the URL path.

- total_query_or_ref_match_length: (float32[-1,1]) Total length of matches between the user’s input and the URL query/referral parts.

- first_url_match_position: (float32[-1,1]) Position of the first match between the user’s input and the URL.

- first_bookmark_title_match_position: (float32[-1,1]) Position of the first match between the user’s input and the bookmark titles for the URL.

- host_match_at_word_boundary: (float32[-1,1]) Boolean indicator of whether the host match occurs at a word boundary.

- has_non_scheme_www_match: (float32[-1,1]) Boolean indicator of whether a match occurs without considering the scheme (http/https) or “www” prefix.

- is_host_only: (float32[-1,1]) Boolean indicator of whether the user’s input matches the host only.

Model Processing:

These features are fed into the neural network. The network architecture, including specific layers and weights, is defined within the model file.

Output:

The model outputs a prediction score (float32[-1,1]) representing the relevance of each potential autocomplete suggestion. This score is used to rank suggestions, with higher scores appearing higher in the address bar dropdown.

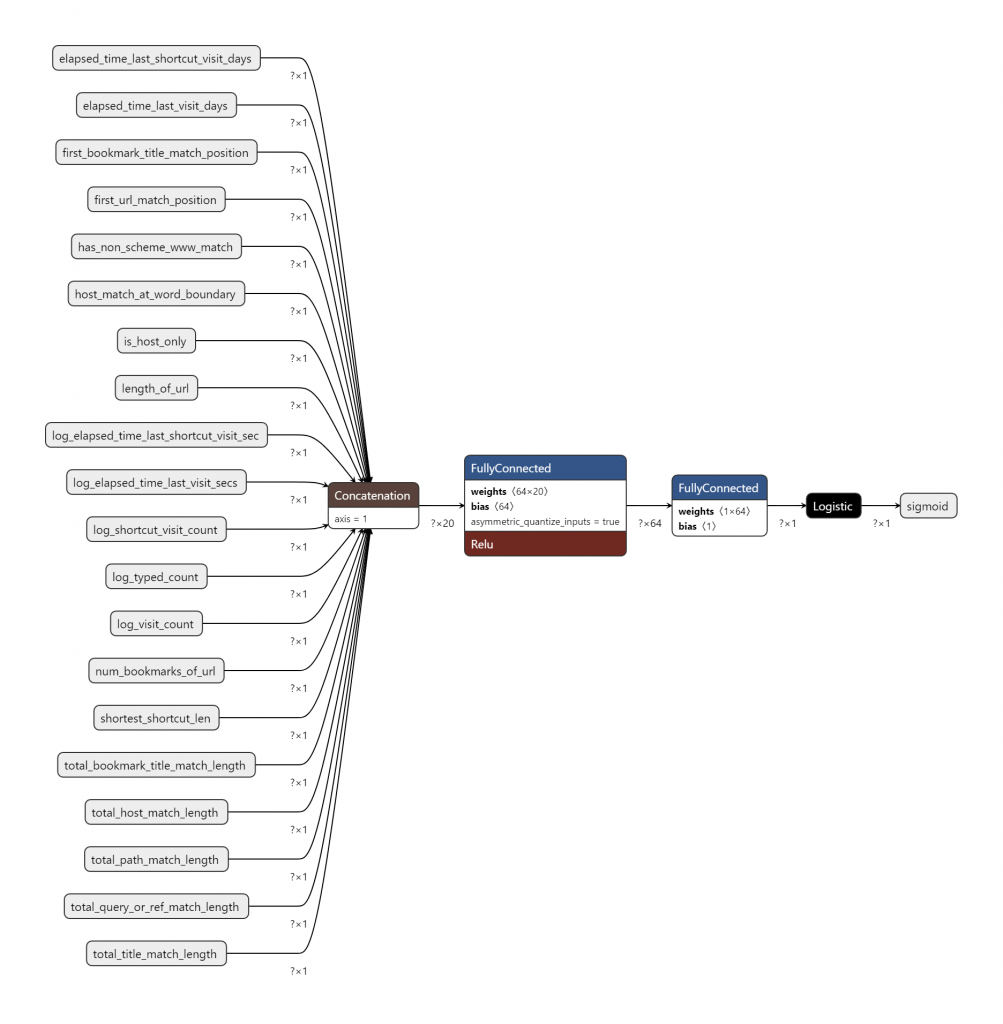

Model Architecture:

- Input Layer: 20 input features, each represented by a separate node (e.g., elapsed_time_last_shortcut_visit_days, log_visit_count, total_title_match_length).

- Concatenation Layer: All 20 input features are concatenated along axis 1, resulting in a single tensor of shape ? x 20. The “?” indicates a variable batch size.

- Dense Layer (FullyConnected): A fully connected layer with:

-

- Weights: Shape 64 x 20, suggesting 64 neurons in this layer. The weights are quantized as int8 for efficiency.

- Bias: Shape 64, a bias term for each neuron.

- Activation Function: ReLU (Rectified Linear Unit).

- Quantization: Asymmetric quantization of inputs is applied.

- Dense Layer (FullyConnected): Another fully connected layer with:

- Weights: Shape 1 x 64, leading to a single output neuron.

- Bias: Shape 1, a bias term for the output neuron.

- Logistic Layer: This likely represents a sigmoid activation function applied to the output of the previous dense layer, producing a value between 0 and 1.

- Output Layer: A single output node (“sigmoid”) representing the predicted score.

Key Observations:

- Simple Architecture: The model consists of two hidden dense layers with a ReLU activation and a final sigmoid activation for output.

- Quantization: The model employs quantization to reduce size and improve performance, using int8 weights for the first dense layer.

- Feature Engineering: The input features are a combination of raw values and engineered features (e.g., logarithmic transformations, match lengths, boolean indicators).